Named Entity Recognition (NER) enables AI systems to identify people, places, organizations, and other key information within text. From healthcare to finance, NER powers modern applications by transforming unstructured data into actionable insights using transformer models and deep learning. #MachineLearning #NLP #ArtificialIntelligence #DataScience #DeepLearning #TransformerModels

Project Volkner: Integrating Transformer Modeling into Pentesting

Automation 🤖

Today marked a significant milestone: the foundational transformer models are complete. I am now in the process of bridging the Sabrina pentesting AI agent with this new transformer modeling system.

This integration will enhance Sabrina's core Bootstrapping logic (augmented decision trees), which currently governs how she navigates the vulnerability database and determines precise payload injection points. The goal is to dramatically improve her decision-making and adaptability when interacting with diverse web architectures.

A key challenge has been resource management. Volkner, our dedicated hardware management system, drives the modeling process and optimizes GPU/system usage. By offloading resource allocation and performance tuning to Volkner—an AI learning system itself—we achieved stable utilization and bypassed the need for manual graphics card argument tuning. We're seeing excellent stability where the calculated VRAM requirements are consistently managed below capacity.

Volkner will be made plublic when im done with his systems.

#AI #CyberSecurity #PenetrationTesting #MLOps #TransformerModels

Researchers unveil Context Engineering 2.0, a leap as AI shifts from Era 2.0 to 3.0. By expanding the context window of transformer language models, they show how smarter prompts can unlock deeper reasoning. Open-source teams can start experimenting today—this could redefine prompt engineering for the next generation of AI. #ContextEngineering2 #AIera3 #TransformerModels #PromptEngineering

🔗 https://aidailypost.com/news/researchers-push-context-engineering-20-ai-moves-from-era-20-30

Continuous Autoregressive Language Models

https://arxiv.org/abs/2510.27688

#HackerNews #Continuous #Autoregressive #Language #Models #NaturalLanguageProcessing #AI #Research #MachineLearning #TransformerModels

Continuous Autoregressive Language Models

The efficiency of large language models (LLMs) is fundamentally limited by their sequential, token-by-token generation process. We argue that overcoming this bottleneck requires a new design axis for LLM scaling: increasing the semantic bandwidth of each generative step. To this end, we introduce Continuous Autoregressive Language Models (CALM), a paradigm shift from discrete next-token prediction to continuous next-vector prediction. CALM uses a high-fidelity autoencoder to compress a chunk of K tokens into a single continuous vector, from which the original tokens can be reconstructed with over 99.9\% accuracy. This allows us to model language as a sequence of continuous vectors instead of discrete tokens, which reduces the number of generative steps by a factor of K. The paradigm shift necessitates a new modeling toolkit; therefore, we develop a comprehensive likelihood-free framework that enables robust training, evaluation, and controllable sampling in the continuous domain. Experiments show that CALM significantly improves the performance-compute trade-off, achieving the performance of strong discrete baselines at a significantly lower computational cost. More importantly, these findings establish next-vector prediction as a powerful and scalable pathway towards ultra-efficient language models. Code: https://github.com/shaochenze/calm. Project: https://shaochenze.github.io/blog/2025/CALM.

⚡ How MiniMax M1

Just Rewrote the Rules of AI

https://helioxpodcast.substack.com/publish/post/166746306

https://www.buzzsprout.com/2405788/episodes/17370848

Sometimes the most profound changes happen not with fanfare, but with a whisper that echoes through eternity.

Thanks for listening today!

#AI #MachineLearning #OpenSource #TechNews #AIResearch #DeepLearning #ComputerScience #Innovation #TechBreakthrough #OpenSourceAI #TransformerModels #ReinforcementLearning

🧬 Could the grammar of DNA be unraveled using tools from natural language processing?

🔗 A review on the applications of Transformer-based language models for nucleotide sequence analysis. Computational and Structural Biotechnology Journal, DOI: https://doi.org/10.1016/j.csbj.2025.03.024

📚 CSBJ: https://www.csbj.org/

#Bioinformatics #AIinBiology #Transformers #Genomics #NLP #DeepLearning #PrecisionMedicine #TransformerModels #DNABERT #ComputationalBiology

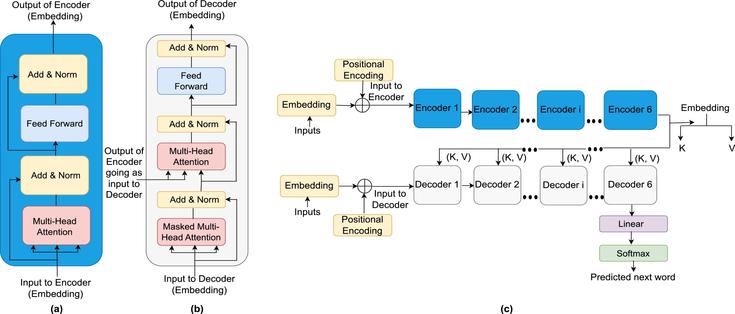

Attention is all you need!

The paper to propose transformer model in the year 2017.

I used #notebooklm to create a podcast on this paper to understand the Transformer model.

Enjoy listening to it! #llm #transformermodels #gpt

#Google is using #TransformerModels for music recommendations! 🎶

Now being tested on YouTube, this approach aims to understand sequences of user actions when listening to music to better predict user preferences based on their context.

More details: https://bit.ly/4e14ZdE