“Breakthroughs in large language models (#LLMs) have made conversations with #chatbots feel more natural and human-like. Applications such as Replika and Character.ai, which allow users to chat with artificial intelligence (##AI) versions of famous actors and science-fiction characters, are becoming popular, especially among young #users. As a #neuroscientist studying #stress, #vulnerability and #resilience, I’ve seen how easily people react to even the smallest emotional cues — even when they know that those cues are artificial. For example, study participants exhibit measurable physiological responses when shown videos of computer-generated avatars expressing sadness or fear.”

…

“Because these AI systems are #trained on vast amounts of emotionally expressive human language, their outputs can come across as surprisingly natural. *LLMs often mirror human emotional patterns, not because they understand emotions, but because their responses resemble how people talk.*”

…

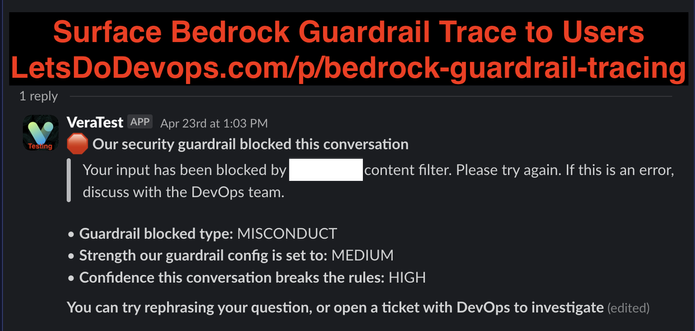

“Now is the time to establish mandatory #safeguards for all emotionally responsive AI.”

“Emotional influence isn’t a glitch. It’s a core feature of LLMs. If we celebrate their ability to comfort or advise, we must also confront their capacity to mislead or manipulate. We’ve built machines that sound like they care. Now, we must ensure that they don’t hurt the very people who turn to them for support. That means giving emotionally responsive AI not just more capabilities, but clearer boundaries.”

#ZivBenZion / #neurosciene / #AI / #GuardRails / #control / #WhiteCollar <https://www.nature.com/articles/d41586-025-02031-w> (paywall) / <https://archive.md/IyddU>

Qiita - 人気の記事

Qiita - 人気の記事