Conversation with Tomáš Perna on ANNs and More (6)

Scott Douglas Jacobsen

In-Sight Publishing, Fort Langley, British Columbia, Canada

Correspondence: Scott Douglas Jacobsen (Email: scott.jacobsen2025@gmail.com)

Received: January 2, 2025

Accepted: N/A

Published: January 15, 2025

Abstract

This interview features Scott Douglas Jacobsen engaging in a profound discussion with Tomáš Perna on the simulation of human intelligence through artificial neural networks (ANNs) and its implications for the development of genuine artificial intelligence (AI). Perna delves into the intricate relationship between intelligent processes and neural network domains, introducing the concept of MACP (Manner of Assigning Coordinates to Processes) as a pivotal yet enigmatic mechanism governing intelligent behavior. The conversation explores the integration of quantum mechanics, particularly the Schrödinger equation, into neural network modeling to understand information transmission at the synaptic level. Perna also discusses the challenges associated with interpreting intelligent phenomena beyond conventional language frameworks and the potential role of Klein Gordon Equation (KGE) in optimizing ANN architectures. The dialogue highlights the theoretical complexities and practical considerations in bridging neural networks with quantum principles, underscoring the need for advanced research and collaboration to advance the frontier of real AI development.

Keywords: AI Development, Artificial Neural Networks, Human Intelligence Simulation, Information Transmission, Intelligent Observation, Klein Gordon Equation, MACP, Quantum Mechanics, Schrödinger Equation, Synaptic Slots

Introduction

In this insightful interview conducted on January 2, 2025, Jacobsen and Perna engage in a deep exploration of the methodologies and theoretical frameworks underpinning the pursuit of real AI through artificial neural networks (ANNs). Perna elucidates the concept that intelligent processes are localized within specific domains of the cerebral neural network, governed by an elusive mechanism known as MACP (Manner of Assigning Coordinates to Processes). He further integrates principles from quantum mechanics, proposing that the Schrödinger equation can serve as a mathematical model for the observable electronic states within neural networks, thereby bridging the gap between information transmission and intelligent behavior. The discussion also touches upon the potential of Klein Gordon Equation (KGE) in optimizing ANN architectures to achieve an AI-horizon, while addressing the financial and practical challenges inherent in this advanced theoretical approach.

Main Text (Interview)

Interviewer: Scott Douglas Jacobsen

Interviewee: Tomáš Perna

Section 1: Introduction to NNs, MACP, ATRs, and the Schrödinger Equation

Scott Douglas Jacobsen: Hi Tomáš! It’s been a long time since we’ve had an ANN chat! Let us get on with it. How is the simulation of human intelligence in artificial neural networks the correct manner in which to pursue the development of real AI?

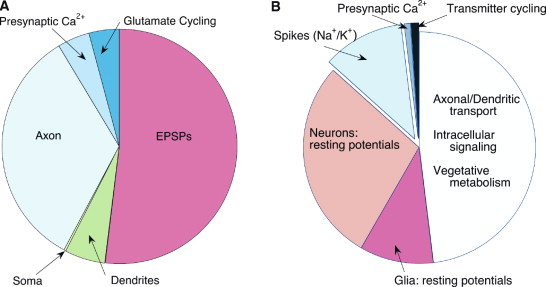

Tomáš Perna: The intelligent processes take place in certain domains of the cerebral neural network (NN). So, the “coordinates” of any intelligent process occupy a corresponding domain of NN and the reference frame of this process can be found, if the manner of how the domain is assigned to the process (in short MACP). Nobody knows, how it can be done. MACP is not a part of the thinking process and simply stands thus outside of any language, in which it is thought. We can only assume that the MACP is an information that is transcendent with respect to the thinking process languages. So, consequently, we introduce a transcendent function that could be studied from the information transmission point of view. And you know that the basic role at an information transmission the electric charge or a distribution of electric dipoles within the synaptic slots in the NN-case play. So, man, we have a task to study the electric charge behavior with respect to a transmission to our considered transcendent function. In this way, we get the wave function without any intelligent thinking process language interpretation possibility associated with an electric charge that, in the first most simple degree, satisfies the Schrödinger equation thought as an MACP transmission equation with respect to an intelligent observation of phenomena, which such a transmission could emerge within the synaptic slots of NN. These slots are thought of as the above mentioned domains of the intelligent process’s course. – Many synaptic slots, many electron system observable states as the solutions of the Schrödinger equation. – Very roughly described, you have just obtained the Shrödinger equation as a mathematical model of the observable electronic states of NN. Such the equation thus becomes characteristics of your intelligent observation and thinking instrument usable within synaptic slots. Point. Problems with interpretations of the observed phenomena in the thinking process languages are now seemingly generated by the wave function, where your languages ansatz should be completely forgotten and these “problems” are very natural consequence of the MACP.

Now, you can see that the Schrödinger equation is silent, it cannot speak in any natural language. Taking the machine language that is isomorphic to the considered thinking process language up to the wave function interpretation, you can obtain certain artificial solutions of the Schrödinger equation which exist with respect to the artificial thinking process representations (ATR). These are already the ANN- algorithms creating the horizon of observables-considering manner coupled with the Schrödinger equation, very schematically expressed. We say that the quantum states of aNN lay on the ATR- horizon of the Schrödinger equation. Optimizing the aNN- architecture, you should control them by means of the KGE, such that the AI-horizon can be found.

That is, however, another more complicated story and who will pay the money for it? Why? Since there are some keys, which could help the guys from the OpenAI (e.g.) already without anything for us. Therefore, I will be silent up to any substantiated possible KGE-conversation.

Discussion

The interview between Scott Douglas Jacobsen and Tomáš Perna offers an intricate examination of the theoretical and methodological challenges in simulating human intelligence through artificial neural networks (ANNs). Perna introduces the concept of MACP (Manner of Assigning Coordinates to Processes) as a foundational yet enigmatic mechanism that governs the localization of intelligent processes within the neural network. This discussion highlights the importance of transcendent functions, suggesting that these operate beyond traditional linguistic frameworks and could provide a pathway to understanding intelligence at a fundamental level.

Methods

The conversation took place on January 2, 2025, and was subsequently published on January 15, 2025. A structured interview format was utilized to enable an in-depth exploration of the theoretical and practical aspects of artificial neural networks and their relation to real AI development. The interview was meticulously transcribed and organized into thematic sections, ensuring clarity and coherence while facilitating a comprehensive understanding of the topics discussed.

Data Availability

No datasets were generated or analyzed during the current article. All interview content remains the intellectual property of the interviewer and interviewee.

References

(No external academic sources were cited for this interview.)

Journal & Article Details

- Publisher: In-Sight Publishing

- Publisher Founding: March 1, 2014

- Web Domain: http://www.in-sightpublishing.com

- Location: Fort Langley, Township of Langley, British Columbia, Canada

- Journal: In-Sight: Interviews

- Journal Founding: August 2, 2012

- Frequency: Four Times Per Year

- Review Status: Non-Peer-Reviewed

- Access: Electronic/Digital & Open Access

- Fees: None (Free)

- Volume Numbering: 13

- Issue Numbering: 2

- Section: A

- Theme Type: Idea

- Theme Premise: “Outliers and Outsiders”

- Theme Part: 33

- Formal Sub-Theme: None

- Individual Publication Date: January 15, 2025

- Issue Publication Date: April 1, 2025

- Author(s): Scott Douglas Jacobsen

- Word Count: 553

- Image Credits: Tomáš Perna

- ISSN (International Standard Serial Number): 2369-6885

Acknowledgements

The author thanks Tomáš Perna for his time and willingness to participate in this interview.

Author Contributions

S.D.J. conceived and conducted the interview, transcribed and edited the conversation, and prepared the manuscript.

Competing Interests

The author declares no competing interests.

License & Copyright

In-Sight Publishing by Scott Douglas Jacobsen is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

© Scott Douglas Jacobsen and In-Sight Publishing 2012–Present.

Unauthorized use or duplication of material without express permission from Scott Douglas Jacobsen is strictly prohibited. Excerpts and links must use full credit to Scott Douglas Jacobsen and In-Sight Publishing with direction to the original content.

Supplementary Information

Below are various citation formats for Conversation with Tomáš Perna on ANNs and More (6).

American Medical Association (AMA 11th Edition)

Jacobsen S. Conversation with Tomáš Perna on ANNs and More (6). January 2025;13(2). http://www.in-sightpublishing.com/perna-6American Psychological Association (APA 7th Edition)

Jacobsen, S. (2025, January 15). Conversation with Tomáš Perna on ANNs and More (6). In-Sight Publishing. 13(2).Brazilian National Standards (ABNT)

JACOBSEN, S. Conversation with Tomáš Perna on ANNs and More (6). In-Sight: Interviews, Fort Langley, v. 13, n. 2, 2025.Chicago/Turabian, Author-Date (17th Edition)

Jacobsen, Scott. 2025. “Conversation with Tomáš Perna on ANNs and More (6).” In-Sight: Interviews 13 (2). http://www.in-sightpublishing.com/perna-6.Chicago/Turabian, Notes & Bibliography (17th Edition)

Jacobsen, S. “Conversation with Tomáš Perna on ANNs and More (6).” In-Sight: Interviews 13, no. 2 (January 2025). http://www.in-sightpublishing.com/perna-6.Harvard

Jacobsen, S. (2025) ‘Conversation with Tomáš Perna on ANNs and More (6)’, In-Sight: Interviews, 13(2). http://www.in-sightpublishing.com/perna-6.Harvard (Australian)

Jacobsen, S 2025, ‘Conversation with Tomáš Perna on ANNs and More (6)’, In-Sight: Interviews, vol. 13, no. 2, http://www.in-sightpublishing.com/perna-6.Modern Language Association (MLA, 9th Edition)

Jacobsen, Scott. “Conversation with Tomáš Perna on ANNs and More (6).” In-Sight: Interviews, vol. 13, no. 2, 2025, http://www.in-sightpublishing.com/perna-6.Vancouver/ICMJE

Jacobsen S. Conversation with Tomáš Perna on ANNs and More (6) [Internet]. 2025 Jan;13(2). Available from: http://www.in-sightpublishing.com/perna-6Note on Formatting

This layout follows an adapted Nature research-article structure, tailored for an interview format. Instead of Methods, Results, and Discussion, we present Interview transcripts and a concluding Discussion. This design helps maintain scholarly rigor while accommodating narrative content.

#AIDevelopment #ArtificialNeuralNetworks #HumanIntelligenceSimulation #InformationTransmission #IntelligentObservation #KleinGordonEquation #quantumMechanics #SchrödingerEquation #synapticSlots