Softwareentwickler - Linux - Moto Guzzi - DRK PSNV - Wikinger

-- n'oubliez pas d'être heureux --

| Threema-ID | https://threema.id/H42AR5TK |

Softwareentwickler - Linux - Moto Guzzi - DRK PSNV - Wikinger

-- n'oubliez pas d'être heureux --

| Threema-ID | https://threema.id/H42AR5TK |

Willkommen auf der Peertube-Instanz von heise medien. 🐙📺

Wenn ihr unsere Videos ohne undurchsichtige Algorithmen schauen möchtet, seid ihr hier genau richtig: https://peertube.heise.de

Quelle: Sebastian Seiffert, deutscher Universitätsprofessor für Physikalische Chemie.

https://bsky.app/profile/sci-ffert.bsky.social/post/3lnrpdzlvck2t

Den Spruch mag ich sehr:

"You speak English because it's the only language you know.

I speak English because it's the only language YOU know."

Die schönste Überschrift kam von Saarland Online:

"60.000 Menschen feiern CSD in Saarbrücken – 9 Rechtsradikale auf Gegendemo"

Diese Woche ist übrigens wieder ein #linux #vhs Kurs zu Ende gegangen. Mit 3 Teilnehmern ging es in 5 Terminen um Konzepte wie Rechtesystem, Prozesse, Shellskripte, den Umgang mit der #bash und natürlich #vim.

Den Teilnehmern hat's gefallen und mir wieder sehr viel Spaß gemacht. Vielleicht läuft der Kurs im nächsten Semester ja wieder.

@DJGummikuh @rotnroll666 @LillyHerself

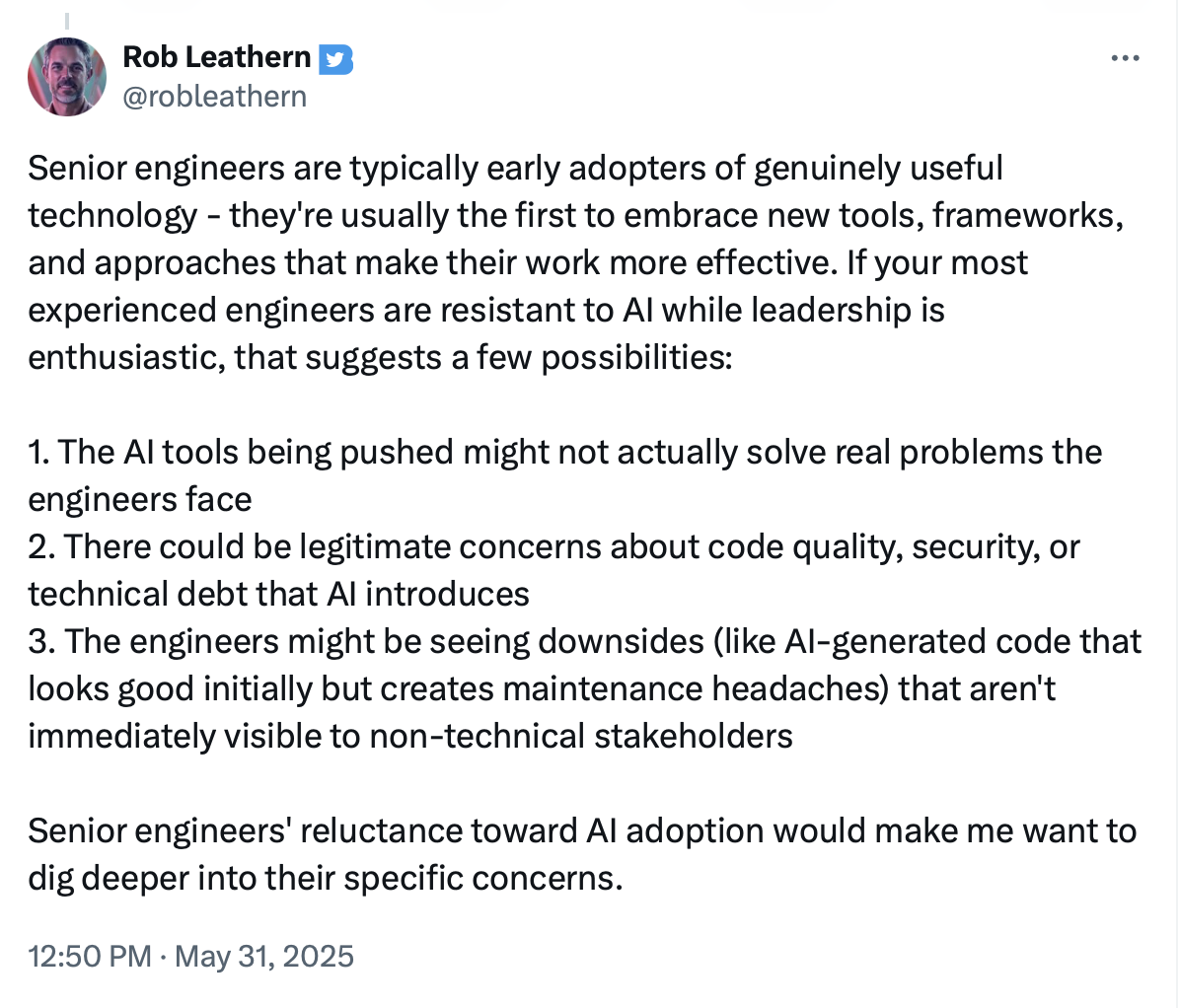

CEO: why are my devs doing/not doing this thing? Should I ask them? Nah, let's ask on Twitter.

@LillyHerself In fact, #AI systems are being caught left and right trying to #viralize their shit, breaking out of systems, and copy themselves like a #worm to the point that they are more "#viral" than the #AGPLv3"* and certainly more than the #GPL as per #Microsoft's #HalloweenDocuments.

@kkarhan @LillyHerself I'd be very wary of using anthropomorphic terms like "want" and "trying" as it can make people think that transformer algorithms like LLMs have a consciousness (something people are already doing).

On another note, though, "sentience" is often misused, but not here. It just means being aware of one's own condition. A cow knows when it's wet, or cold, or hot, so a cow is sentient. LLMs generally have no such awareness, they are not sentient.

@LillyHerself

For us at @Ninjaneers the rise of #vibeslob is actually kind of good.

When your codebase is garbled while none of your employees really know how to handle larger software projects - who you gonna call?

@cg @Ninjaneers Hahaha, that reminds me of a saying they have in Swedish "En mans bröd är en annan mans död"

[it rhymes in Swedish, so non-literal translation: "one man's death is another man's breath" ]

@LillyHerself it's like...I know how I want to implement a feature within the technical constraints and subject matter context I'm working in.

Why would I sit down and try to explain in natural language my intentions and the surrounding constraints to a machine that has no concept of semantic knowledge?

Any response might be an ~alright first draft, but then I'd have to go in and converse my way to the solution I want, and in the end I'll have to manually edit stuff anyway.

THAT seems horribly inefficient.

If you wanna talk about reducing the time spent writing boilerplate code, look at languages like elixir with the phoenix framework.

You can generate boilerplate without stochastic wordpickers!

@LillyHerself I bet there's still enough C-Suite out there that have a KPI like: lines of code per engineer per time unit.

And yeah, AI can probably be used to optimize for that kind of metric

Management has always hated paying for skilled developers. We cost a lot, they feel that our push back on their "Grand Ideas" is because we're incompetent or snobs (because they're literally technically impossible to do on that timeline and budget), they don't like giving vague specifications and getting back something that doesn't match the vision they had in their heads (because their specs were "build an app like Instagram, but for Dogs").

Time and time again over the decades there's been different promises about how this magic tool or that would mean that less skilled or even non-technical people could replace senior engineers. And at least some management falls for it every single time. And then a few years later they have to hire engineers all over again to start trying to unfuck the mess that's been created.

@JessTheUnstill @LillyHerself @wall_e Exactly this.

Though the last company I worked with was an exception. A small Norwegian outfit run by technical people who listened to and worked with their technical staff (including developers).

@JessTheUnstill @LillyHerself @wall_e

Plus they hate when employees who feel secure in their skills feel confident enough to object for moral reasons when management wants dark patterns, user hostile features & illegal or sketchy things. (Like objecting to building tools for war/surveillance.)

@wall_e @LillyHerself Exactly.

My dad is a pretty competent coder. He did it professionally for longer than I've been alive. He's worked in hardware design for a number of years now, but his coding skills are still fairly sharp. If I ask him for help solving a computer science problem, he's happy to give it to me, and he often has valuable insights -- but I find it often takes me twice as long to explain my problem to him than it would have to solve it on my own.

And he's a human. Why in the ever-loving sweet fuck would I do that with a machine that cannot synthesize solutions to novel problems at all and is pretty bad at synthesizing solutions to ones it's already seen?

@darkmatter_ @LillyHerself LLMs are an extension of the "magic" UI trend we first saw with stuff like Siri.

It's an infinite oracle that speaks natural language! Just keep talking until you get lucky!

Historically, that's not how professional tools worked. You get stuff like "documentation" and "explicit limits".

This creates an environment where we blame the users when the system fails them: they were unfaithful and refused to spend 2 hours coaxing ChatGPT to implement a 15-minute fix.

Have you ever read " The Colour of Blood" by Brian Moore? It conjures such a vivid picture that after I'd read it, I started again, to see how he'd managed that. And was surprised to discover there is barely an adjective from page 1 to the end.

None of the adjectives in the quoted piece is superfluous. LLMs, by contrast, add an adjective to nearly every noun and it's wearisome reading. I assume they trained them on tabloid newspapers and airport novels.

@JMacfie This is very interesting. I'll try to find a copy of Moore's book. Thanks

@LillyHerself

4. The people who have been doing this for 40 years have no idea what they're talking about. After all, when those crotchety old sticks in the mud left college, AI of the scale we have today was barely science fiction! They're just afraid of change, is what it is.

And they're scared we're gonna replace them. Of course they don't want us to use it. If we used it, we could save ourselves a boatload of cash by letting them go, and not lose any productivity. They don't like it because it's good for us and bad for them.

It's nice when things are so simple and easy to understand, isn't it? We're the wave of the future, yes we are! Those anti-AI blowhards just can't see it like we can. AI can do anything Sam Altman says it can, and anybody who doesn't get on the hype train better be ready for it to run them over! The age of employees who don't need sick leave is finally here!

I sure am glad I'm a smart businessman with my finger on the pulse. Labor almost pulled one over on me.

@LillyHerself

Early on, I did a bunch of experimentation on how including AI in a project would impact the development process. I did this all on personal time. I concluded that it solves very niche cases that we already solve a different way, and it causes way too many problems.

1/3

AI coding tools Introduce low quality code in large volumes. It spagettifies existing code and makes code reviews so much more painful. It ignores quality gates, demonstrates lack of understanding of how code works, and would probably be the type to ask "what's a design pattern?" because it clearly doesn't know anything about them for practical use. Not to mention that much of the code is simply wrong.

When adding it into a process, you may as well douse us in maple syrup on a hot humid day in a swampy area. Junior devs who still aren't familiar with coding practices and understanding the codebase try to rely on AI slop and it is immediately obvious because it's a lot of fluff that doesn't actually do what you asked them to do. But it looks right if you don't know what's going on.

2/3

Despite this, upper management seems to not care about what the senior engineers have to say because it's contrary to what they (upper management) want to do. The senior devs aren't quiet about it so it's not like it's unclear.

@LillyHerself

An LLM might be using training material from a time before a given security vulnerability was discovered, and fail to account for that, thus introducing a security vulnerability to your code.

If you spend the correct amount of time using real programmers to look for weird little problems like this, you end up negating the cost savings of using AI-generated code in the first place.