Choose One and Boost Please

Jeff Watson

@jeffwatson

- 6 Followers

- 64 Following

- 417 Posts

Poll ended at .

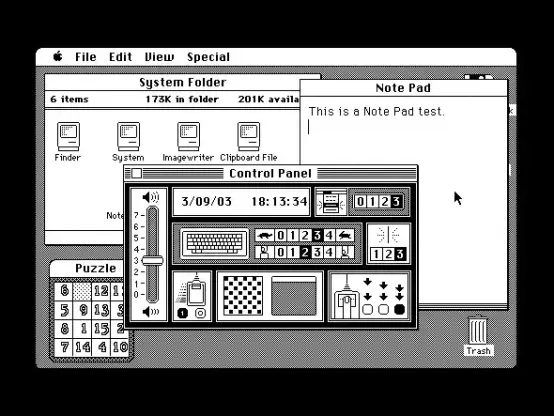

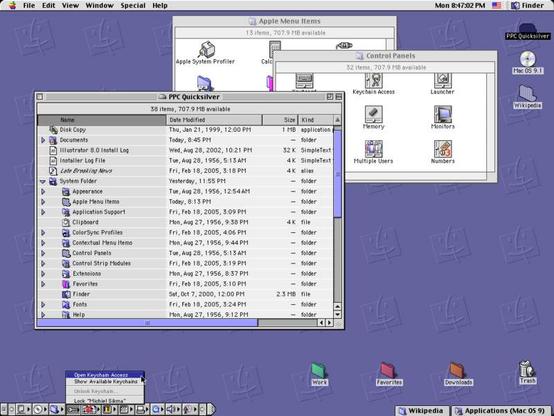

Every time I look at anything Liquid Glass, this is all I’ll ever see

It’s remarkable, especially this summer, to see just how much Apple got right in the first decade of the Mac. The NeXT acquisition may have saved the company, sure, but it was the durable and thoughtful design of Mac OS that persuaded users to tolerate a decaying foundation until something better came along.

Tim Cook should retire.

https://mastodon.social/@BenRiceM/114984029470988076

https://mastodon.social/@BenRiceM/114984029470988076

It is deeply and profoundly embarrassing to watch the head of a trillion dollar company debase himself like this

https://www.threads.com/@aaron.rupar/post/DNB0zymJDjS

https://www.threads.com/@aaron.rupar/post/DNB0zymJDjS

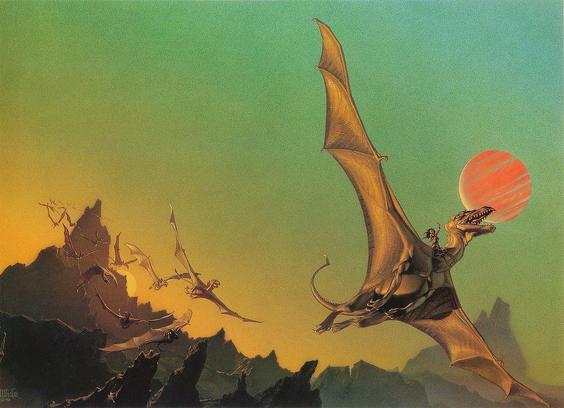

DRAGONFLIGHT (1978)

Acrylic on Masonite - 20" X 30"

For the first book in Anne McCaffrey's now legendary Dragonriders of Pern series, I wanted to create a literal visualization of the title. I selected an aerial point-of-view and tilted the horizon to simulate the dizzying sensation of flight. 1/4

#sciencefiction #scifi #scifiart #sff #illustration #annemccaffrey #pern

#dragon

It’s not too late to start masking.

You don’t have to do it alone. You won’t be the lone masker.

There’s an engaged Covid cautious and disability community who will help you.

There’s local mask blocs who provide respirators to those who can’t afford them.

Covid is still here, and masks work!

Wow. This is as close to perfection as I've seen when it comes to accurately representing expressive characters using nothing but #LEGO. The faces on the bus and on Totoro are spot on using nothing but bricks.

Amazing work.

I saw a lot of press about the “ICEBlock” app recently, but clear links for where to download it were harder to come by. Here’s a link: https://apps.apple.com/us/app/iceblock/id6741939020

ICEBlock

Stay informed about reported ICE sightings, within a 5 mile radius of your current location, in real-time while maintaining your privacy. ICEBlock is a community-driven app that allows you to share and discover location-based reports without revealing any personal data. KEY FEATURES: • Anonymi…

)}]

)}]