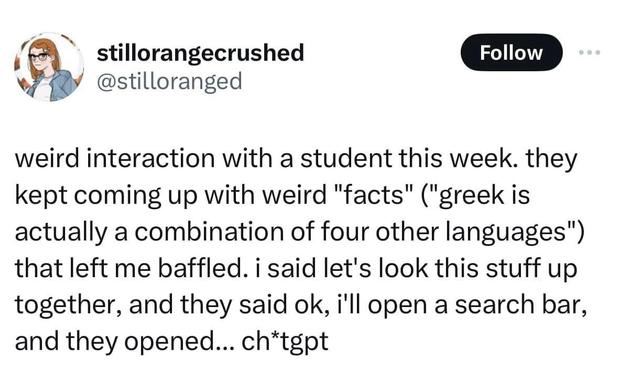

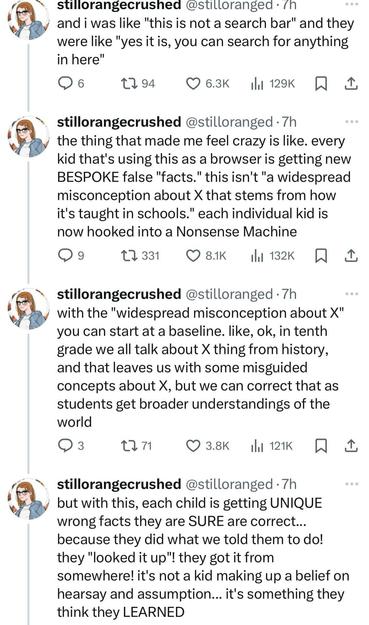

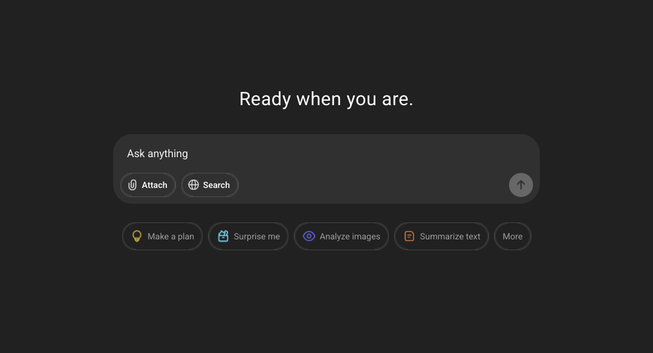

Edit: I got those screenshots from imgur. It might be from Xitter, with the account deleted or maybe threads with the account not visible without login? 🤷

2nd Edit: @edgeofeurope found this https://threadreaderapp.com/thread/1809325125159825649.html

#school #AI #KI #meme #misinformation #desinformation