Jeff Watson

@jeffwatson

- 6 Followers

- 64 Following

- 423 Posts

I like how any Mr. Beast contest can be described as "What if a bad person was forced to give away large amounts of money but found a way to still be a total dick while doing so?"

Tesla dealerships don't want you to know this one weird trick of using saltwater and lemon juice to preserve and maintain the finish on their cybertrucks.

Woz: ‘I Am the Happiest Person Ever’

https://daringfireball.net/linked/2025/08/15/woz-on-slashdot

https://daringfireball.net/linked/2025/08/15/woz-on-slashdot

We have all worshipped at the feet of the wrong Steve…

https://mastodon.social/@daringfireball/115033598998035149

https://mastodon.social/@daringfireball/115033598998035149

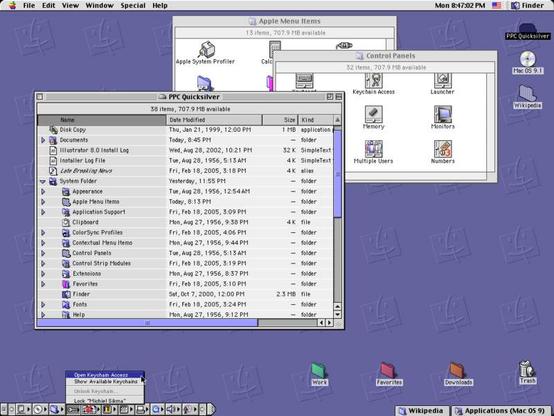

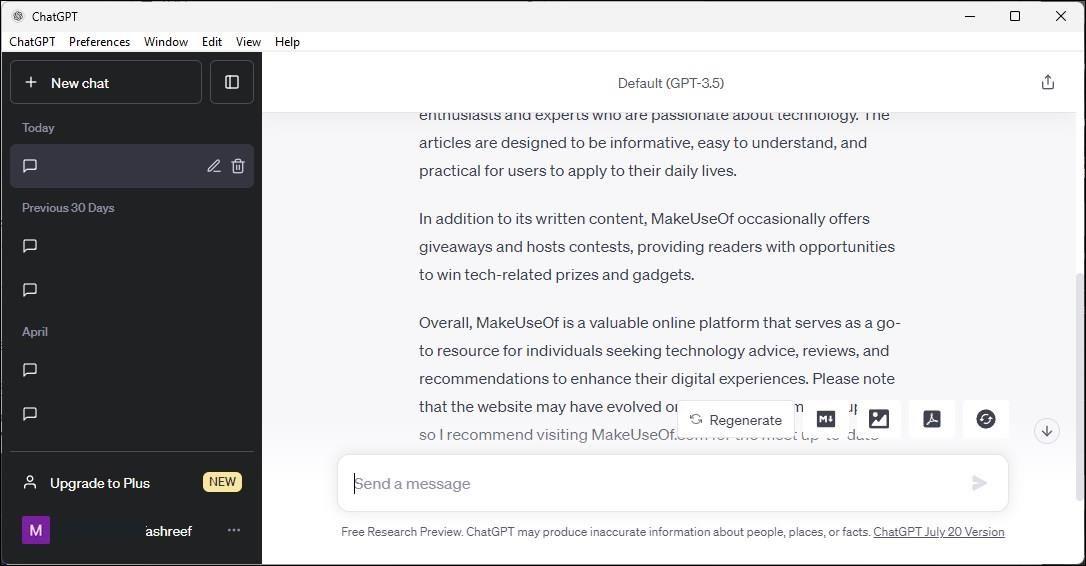

macOS UI design had a good run, and the macOS 26 will be remembered and studied in book under: how to not design user interface, case study.

It is impossible to conceive that anyone at Apple could think this was an improvement (either aesthetically or functionally) and so the only conclusion is that they simply don't care.

Whatever requirements drove this icon change; whatever process led to its approval; it is clear that at no point did the question "is this good?" factor in.

Once respected as design leaders, Apple has now transcended the very notion of design quality as a concept.

From: @BasicAppleGuy

https://mastodon.social/@BasicAppleGuy/115016185421357323

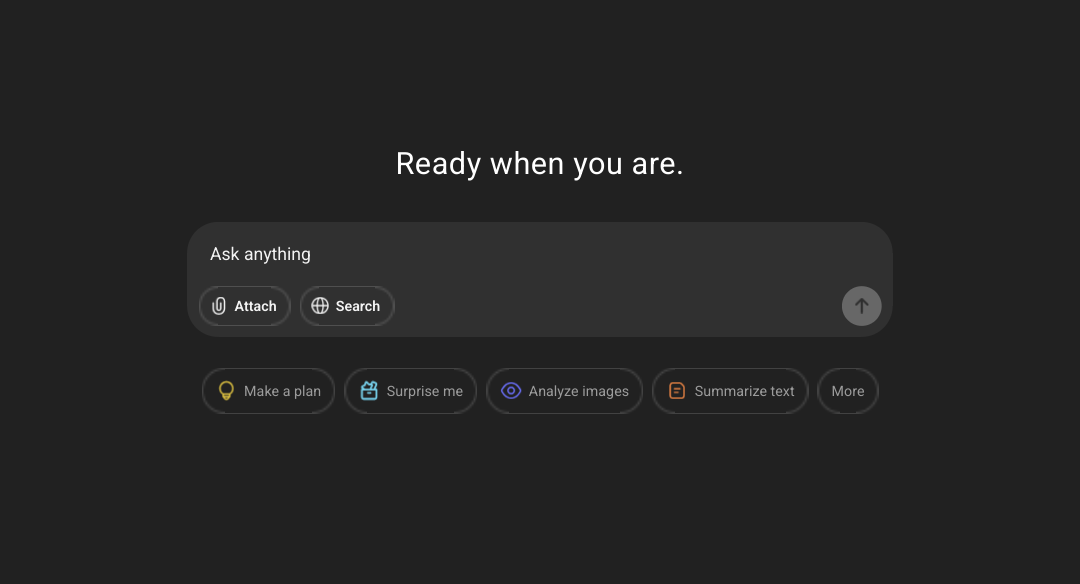

Choose One and Boost Please

Dilly-Dally

Lollygag

Poll ended at .

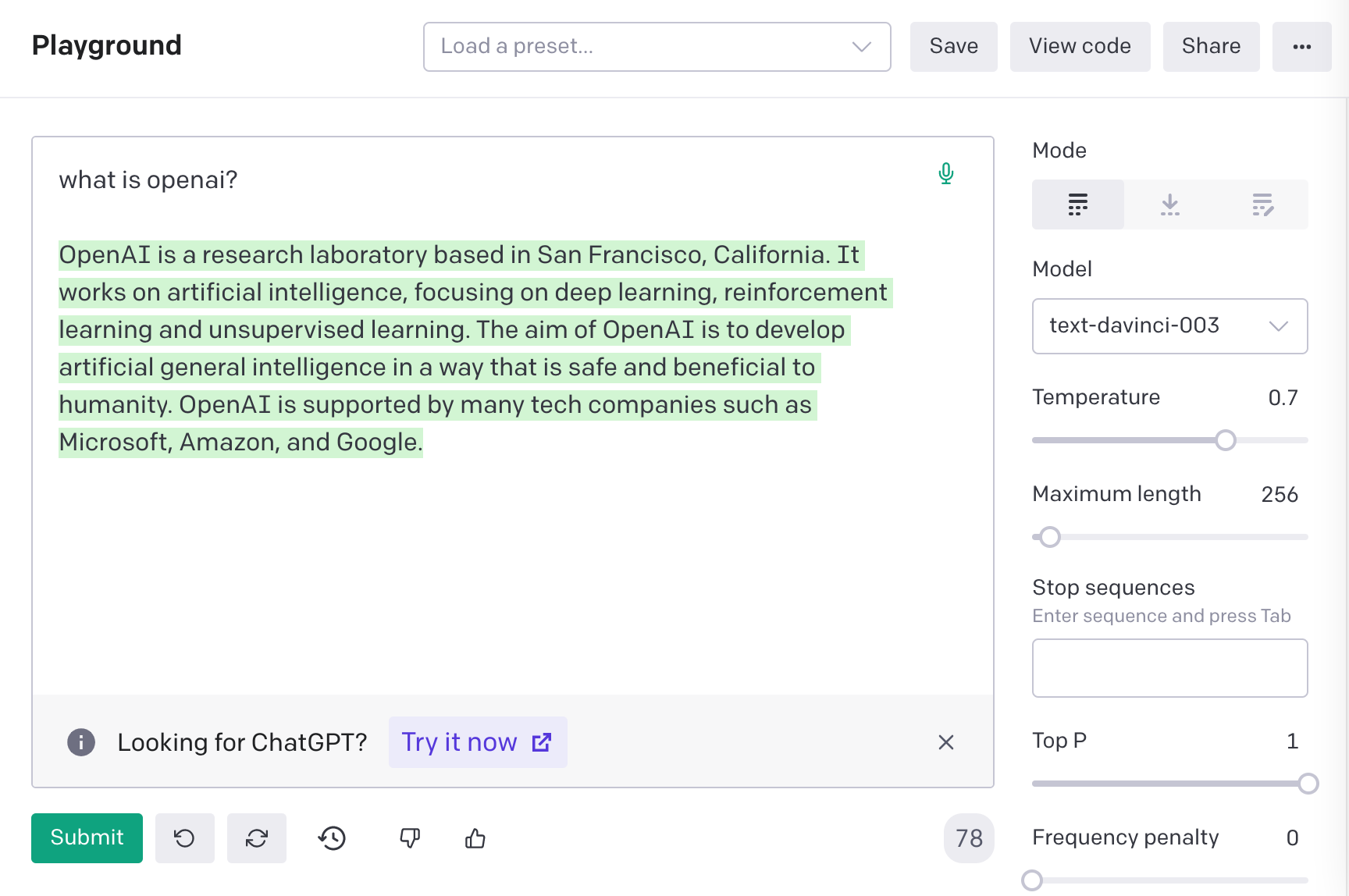

Every time I look at anything Liquid Glass, this is all I’ll ever see

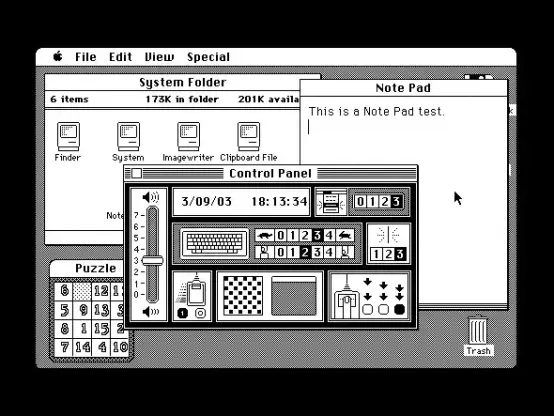

It’s remarkable, especially this summer, to see just how much Apple got right in the first decade of the Mac. The NeXT acquisition may have saved the company, sure, but it was the durable and thoughtful design of Mac OS that persuaded users to tolerate a decaying foundation until something better came along.

Tim Cook should retire.

https://mastodon.social/@BenRiceM/114984029470988076

https://mastodon.social/@BenRiceM/114984029470988076