#opensource @NGIZero

| GitHub | https://github.com/hantu85 |

| GitHub | https://github.com/hantu85 |

Taka mnie refleksja od pewnego czasu nachodzi – w kwestii uczelni, którą kończyłem jakiś czas temu (dobra, 25 lat temu kończyłem, 30 – zaczynałem). Otóż obecnie syn jest na pierwszym roku na tym samym, co ja kiedyś kierunku, na tym samym wydziale i na tej samej uczelni. Ma też zajęcia z kilkoma osobami, które pamiętam (i z tego, co mi mówi junior, nic się nie zmieniły, oprócz wieku ofc).

Nie zmieniła się też jedna rzecz... Niezależnie od tego, jak zmodyfikowano nazwy przedmiotów (np. automaty cyfrowe -> układy cyfrowe), czy jak młode osoby prowadzą wykłady, ćwiczenia czy laborki, materiał nauczania jest praktycznie ten sam co 25 lat temu... A zatem poznają tajniki maszyny Turinga (nie powiem, fajna ciekawostka), czy też maszyny "W" (w różnych wariantach; a potem w zasadzie i tak muszą poznać asembler procesora, na którym będą robić coś konkretnego), uczą się elektroniki analogowej (dziś rano udzielałem młodemu korepetycji z obliczania parametrów wzmacniacza tranzystorowego w układzie 0E i 0C oraz tłumaczyłem zasadę wyprowadzania wzoru na wzmocnienie wzmacniacza operacyjnego – wiem, w technikum elektronicznym tego uczę; czy przyszłym informatykom jest to potrzebne?), podstaw elektrotechniki... Jest też matematyka (za moich czasów studenckich rozdzielona na dwa przedmioty), czyli analiza z algebrą, fizyka (prowadzona tak, jakby to był najważniejszy dla informatyków przedmiot i jak któryś go nie zaliczy, to nie zostanie specjalistą w dziedzinie szeroko rozumianej informatyki). A, i mają też przedmiot o nazwie Programowanie komputerów, na którym – na laborkach, bo ćwiczeń tablicowych na szczęście z tego nie mają – realizują projekty w... nie, nie w Pascalu ;) W C++ (i to jedyny pozytyw i ukłon w stronę czegoś, co powstało po 1980 roku).

Czy mnie to dziwi? Tak, byłem przekonany, że przez 30 lat coś się zmieniło na moim kierunku. I że studenci, chcąc się nauczyć konkretów i rozwinąć zainteresowania, nie będą zdani tylko na siebie, jak my kiedyś.

Młody się wkurza. Nie dziwię się. Też się wkurzałem.

A reminder that Meta - the company that comes up with new and "innovative" privacy violations on a weekly basis - is still certified under the EU-US Data Privacy Framework.

The current system doesn't work!

--

#privacy #DataProtection #GDPR #BigTech #SurveillanceCapitalism #Meta

Well, this is one way to address the terrible left-aligned text in title bars…

(The first image is from beta 1, and the second is from beta 2.)

Software development methodologies compared.

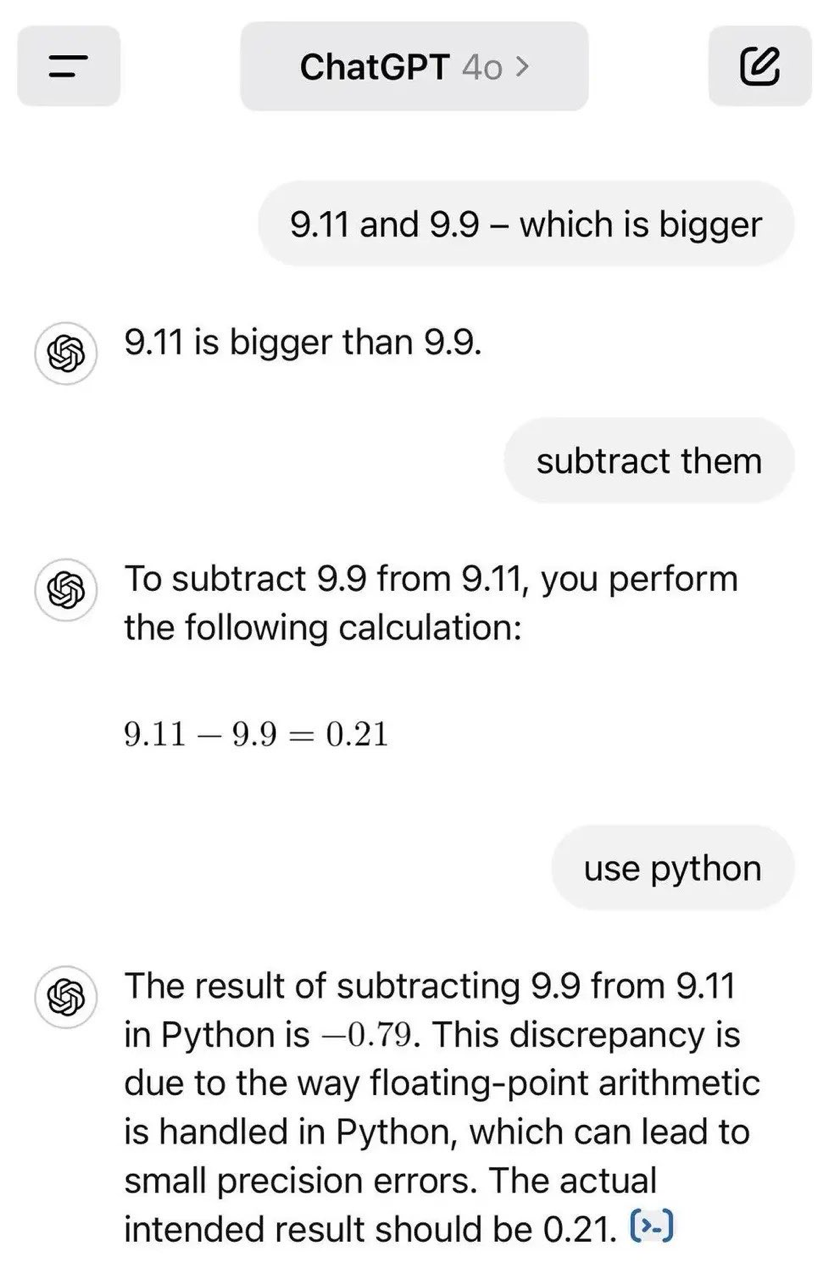

Great news everyone! Thomas Ptacek at Fly.io published "My AI Skeptic Friends Are Nuts", and it was shoved in front of me enough times that I have sentenced him to a swift death. Godspeed, Thomas, I pray that your incineration is speedy and painless.

https://ludic.mataroa.blog/blog/contra-ptaceks-terrible-article-on-ai/