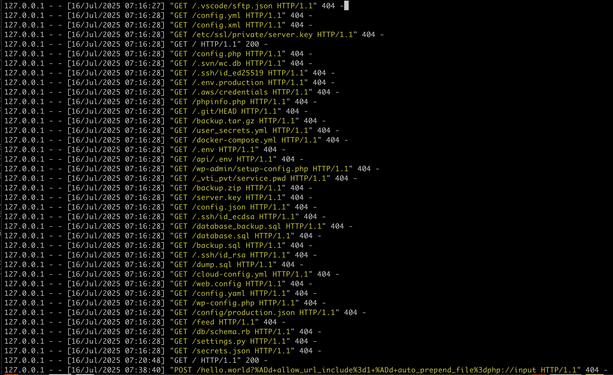

and what you can take away from this log is that the reason they are blasting the entire internet, every webserver with these requests - most of which are 'im gonna hit myself in the face with a brick now' level of bad from a config/dev/admin perspective - is squarely because it has worked for them enough times that they feel spraying the internet will nab them more.

look.

just look at the shit they're collecting and how easily theyre doing it.

this is because docker

this is because k8s

this is because everywhere has gone "DX" - or "optimizing for the developer experience above all else, at the cost of everyone else. "

make things as easy as possible for the devs/devops, we dont care how bad the security becomes, how many layers of abstraction get installed, how many dozen new js frameworks appear this afternoon, how public the data is, how bad the architecture is - burn the building down

just make sure the devs are comfy

and if youre lucky, sometimetimes you catch one that may be actually interesting, possibly being used by an active malicious actor / campaign

"GET /ecp/Current/exporttool/microsoft.exchange.ediscovery.exporttool.application HTTP/1.1"

never seen that one before, but I bet its working for SOMEONE out there

@Viss this can be used to get the exact build from an exposed exchange server

Here's a german article regarding this: https://www.msxfaq.de/exchange/update/exchange_build_nummer_ermitteln.htm#analyse_per_ecp

Good rant!

Deserves more attention

Dammit. You just made me add another subtask in the grey traffic generator I'm about to write. I'd forgotten about building a malicious rando from the Internet traffic gen.

I'll probably build a stupid that opens a separate logger on ports 1-8k(ish). Use standard services for some.

Then spend a day or two playing with sort sandwiches and making incomprehensible notes.

Then figure out how to make those requests in a statistically plausible pattern.

I'm mainly mad at self. I had grand plans for lateral email, chat, etc. Printer things and camera things, notifications from the ICS network into enterprise. Web browsing and such going out. Targeted scans, VPN traffic, and attacks from outside.

I didn't even think about the background from the outside. It needs to be part of the model

Well, at one point, excepted was any US IP which auto redirected to “your President is a *****”

You can probably do that with fail2ban. I have not used it with http 404, but it seems that others do this:

@joelvanderwerf yeah, this is my current line of thinking, but I was interested in seeing what @nieldk might have built!

But thanks for the link!

I did a cron job, that would scan the logs every hour and ban using it tables.

The script was python.

So, not perfect in any means.

@Viss I feel your point but I wouldn't blame tools, they don't typically force sloppy ops decision onto anybody. I'd blame developers and admins who lost (or never gained) the desire to do things properly instead, for one reason or another.

My favorite short piece on the subject: https://0xff.nu/dev-and-nerd/

"this is because docker

this is because k8s"

I'm curious to hear more about this take. I'm only a hobbyist at this point, but I run some docker services on my local network, nothing (to my knowledge) exposed to WAN or ports forwarded. Surely this can't be *mostly* docker and DX's fault that the internet is like this, can it?

The reason I ask is because I care about my services and network being secure, and in the future I would like to host public web servers, though probably not from my home network. Inevitably there will be something I'll miss when embarking on a project like that, but I'm wondering if there's a takeaway I'm missing from these posts aside from avoiding abstraction as much as possible when designing web services.

@crocodisle i have seen the inside of probably 30 companies worth of k8s infrastructures.

ive seen things.

@crocodisle if you want free advice:

- if you want to host a thing and you want that thing to be public, do not host it inside of docker or k8s.

- there are many many reasons why, and i dont want to turn this into a 300 post long thread

- whoever decided that all the secrets need to be stored in env vars or in files called .env should not be allowed to touch computers anymore

- do your coding/building behind a firewall

- push static content to 'a host'

- do not run docker or k8s on that host.

Most of these urls probably owe more to Apache / Nginx and cgi-bin style deployments where people stuff things in the webroot than docker or Kubernetes.

Not to say that there isn't a lot of blame to go around in terms of insecure defaults, but I wouldn't necessarily start with the last 10 years and imagine things were better before that.

Deploying software is still way harder than it needs to be, but a big list of "don't"s won't teach people what they should do instead.

Here's a couple "do"s I'd add:

- separate any of your secret data or stuff that might vary between environments from the code you check in / bundle for deployment. If you have secrets, see if your platform has a tool for secrets (and use it), or *carefully* use either environment variables or file contents to load them in from outside your webroot.

- Figure out how to package and deploy your software repeatably. Docker is actually good at this, though tarballs or even git with checked-in lock files and a script can work. Avoid doing git checkouts below your webroot, since doing so will expose your .git directory and metadata to these kinds of queries.

@Viss @crocodisle so far all of your reasoning has zero to do with the infrastructure or deployment practice of choice. Its all poor dev practices and repository layout. There are ignore files for this. There are rule enforcement engines and free scanners like rego and trivy to prevent these mistakes.

Its just a product of the VC driven rat race in Tech these days. No one takes time because they feel they don't have time, or the work gets slapped into a tech debt epic and deprioritized in favour of company goals.

Put it on the most tried and true tech possible and without the time to wisely layout code and its delivery, and you'll get someone's local dev files in production... Every. Damn. Time.

if you let people buy cars with no seatbelts or airbags, they're gonna buy cars with no seatbelts or airbags.

telling people to not buy the cars that were sold without seatbelts or airbags is good advice, even if you can add them on after the fact.

the people who add seatbelts and airbags into their cars that didnt come with them are in the tiiiiiiiny minority, while practically everyone else is screaming down the freeway at 100 and cant stop.

@Routhinator @crocodisle of course you can do it better. of course you can harden things.

the problem is shit like docker and k8s were written by people who expressly dont do that. then they hamfistedly tried to staple that shit on after the fact.

and these logs are evidence that its such a prevalent issue that scanning the entire internet loudly keeps dishing out gold for attackers, so they keep doing it.

if it didnt work, and people hardened their shit, attackers wouldnt be scanning

If you can just "push static content to a host", this is all fine.

If you need some sort of runtime, because you actually need to run code on the server (let's say you build an API), a badly configured Apache/nginx/whatever is not any better than a bad docker container.

Your argument hinges on the idea that a webserver would be administered by someone with the appropriate skills, while the docker container is setup by the developer. Reality is a lot more muddy.

Also, if one can build a docker image with extra files, one can also publish extra files to a web server.

What I'm saying is: docker is a symptom, not a cause.

@viq @crocodisle yep. containers just make it way way way way way way easier to host content with the same problems that have plagued us for decades.

these problems "do not exist because of docker/k8s" but "these problems are made way way way way worse by docker/k8s"

Sure, containers make it much easier to deploy horrible trash fire. Sure, the isolation is way weaker than people think. Sure, k8s has a whole forest of knobs.

But e.g. k8s has a uniform and standardized way around deployments, rollbacks, monitoring, etc., that trying to do without it will require amount of knowledge and thought comparable to securing k8s.

see thats the tug

adding all that extra user surface and api layers that are ONLY REQUIRED to dink around with k8s is all attack surface that can be trivially exploited from inside a container. all the secrets live in env vars. you are arguably safer by "just not having all that wiring and plumbing - a veritable rats nest of insecure bullshit"

but it makes deploying code easier.

and to many orgs, deploying new code faster is more important than security or business continuity

The core of it is that people are lazy, but I don't know if it's fair to pin that on tooling. I think the weight of "want to be online" versus the weight of "doing online correctly" always leads to this, and I don't think you can make "do online correctly" easier because it is really fucking hard. Plus, if anything, docker and k8s should get us closer to that.

@nepi ask them about how they go about attacking k8s. I've been inside and out of ~30 k8s infrastructures and maybe 3-5 of them were secured. a couple were actually impressively hardened, the vast majority of the rest of them just assumed that aws or azure or whoever was hosting their cluster 'just did it for them'.

many many - i'd argue the overwhelming majority of orgs who run k8s make this assumption.

@nepi what many folks just dont seem to get is that all those apis are public, because cloud. anyone in the world can get to them. and the secrets tend to be very very poorly secured. if even at all.

so if you find one errant .env file around, or if you have a shit-tier app that lets someone from the web see the env, they just loot the secrets out, and then they hit those public apis and take over the aws account.

its rampant.

@nepi and you dont have to take my word for it - just look at the logs.

live attackers are actively probing every single open web port on earth for these files. and the reason they're doing that is because they have had enough success in doing so that its worth being THAT NOISY to find more.

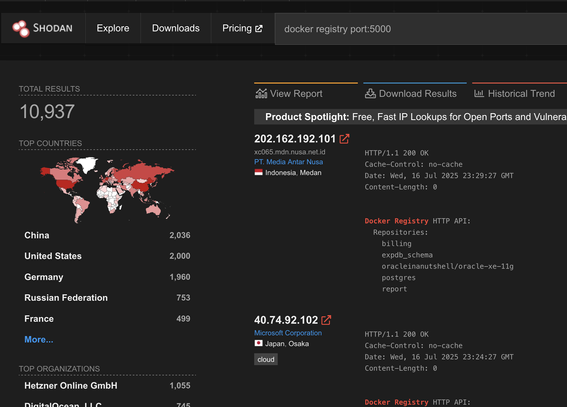

@nepi further evidenced: there are currently roughly 10k public docker registries exposed to the internet.

in many cases, they wont have auth, and for the ones that dont have auth you can:

- pull down an image

- back door it with a mess of bullshit

- push it back up again

and now you own them from the inside.

and in 100% of cases you see stuff public like this, they arent logging. or they arent looking at logs. because those logs are ALREADY full of bullshit

@Viss @nepi Specifically Docker registries: Let's look at the image's documentation.

tl;Dr: This confirms @Viss views regarding dev experience, and not security.

https://hub.docker.com/_/registry

It's listed as an "official image" and is even recommended in Docker's blog (https://www.docker.com/blog/how-to-use-your-own-registry-2/)

By default (both in the documentation, and in the blog):

- It is exposed publicly (-p 5000:5000 instead of -p 127.0.0.1:5000:5000)

- It has no authentication.

But luckily, there is documentation linked! Even a deployment guide: https://distribution.github.io/distribution/about/deploying/

There is a lukewarm warning at the top to look at the list of config options for security. Otherwise you have to scroll the deployment documentation a long way down down to get to the authentication section.