🚀 New blog post: How to implement local embeddings using ONNX with Semantic Kernel in .NET for RAG solutions!

💡 Real-world use cases, full code, model comparisons & more.

🚀 New blog post: How to implement local embeddings using ONNX with Semantic Kernel in .NET for RAG solutions!

💡 Real-world use cases, full code, model comparisons & more.

I was testing out some real-time video-matting in #maxmsp that we developped for an ongoing project, and realized that “sunset.jpg” demo file was a nice match for this memorable dialogue in Romero’s “Night of the living dead”… If it weren’t for all those zombies outside (and some inside the video), it could have been such a lovely campfire scene!

https://www.youtube.com/watch?v=vKvdrXMDPPE

More info : https://vincentgoudard.com/real-time-video-matting-in-max/

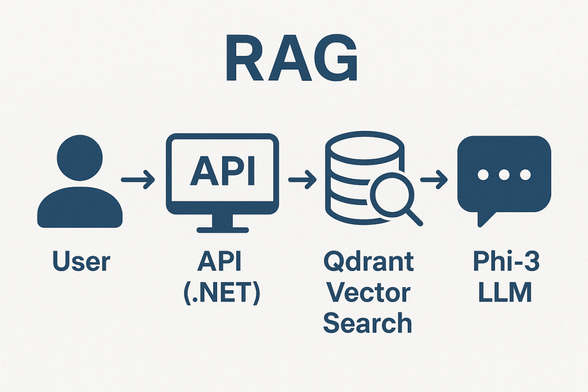

🚀 Build a real RAG API with .NET 8, Semantic Kernel, Phi-3, and Qdrant!

🎯 Enrich LLMs with real product data

⚙️ Local ONNX inference with Phi-3

🔥 Full API endpoints ready!

Dive into the architecture 👉 https://elguerre.com/2025/04/22/%f0%9f%9a%80-building-a-rag-api-with-net-semantic-kernel-phi-3-and-qdrant-enrich-your-e-commerce-experience/

#DotNet #SemanticKernel #RAG #Qdrant #Phi3 #AI #MachineLearning #ONNX

Learn how to build a powerful RAG (Retrieval-Augmented Generation) API using .NET, Microsoft Semantic Kernel, Phi-3, and Qdrant. Combine your private e-commerce data with LLMs to create smarter, gr…

🚀 Boost Your AI Career with ONNX Framework AI Certification from EdChart!

🔗 Take the Free Certification Test : https://www.edchart.com/certificate/onnx-framework-ai-certification-exam-free-test

🏅 Earn a Credly-Verified Badge : https://www.credly.com/org/edchart-technologies/badge/edchart-certified-onnx-framework-ai-subject-matter-

The ONNX Framework AI Certification is your gateway to mastering AI model interoperability across platforms like PyTorch, TensorFlow, and Caffe2.

#ONNX #AIcertification #MachineLearning #DeepLearning #ArtificialIntelligence #ONNXRuntime #EdChart #Credly #OpenSourceAI #MastodonTech

Just pushed another batch of 224 nuget packages totalling ~6 GB to NuGet with native libraries for ONNX Runtime 1.21.0, CUDA 12.8.1, cuDNN, TensorRT etc. in my "NtvLibs" project for better fine granular distribution of these while handling splitting large dlls into multiple packages to be able to host on nuget.org.

NVidia downloads getting crazy, for every new GPU arch size increase ~1.5x, that's the true exponential development of nvidia. 😅

#Intel should figure out their #ml strategy because they have:

1. #OpenVino plugins for GPU and NPU,

2. #OpenXLA plugin for GPU

3. #ipex for PyTorch

4. intel-npu-acceleration-library for PyTorch

5. oneDNN neural network math kernels

And for #ONNX they have both OpenVino and oneDNN runtimes.

Best of all I haven't reliably gotten the NPU to work using any permutation of them lol..

個人開発アプリTinyONNXViewer v1.0をリリース

https://qiita.com/guardman/items/90584e0792ca13b4d325?utm_campaign=popular_items&utm_medium=feed&utm_source=popular_items

Turns out, rewriting an #ONNX based inference inside a #nodejs process in #rust can speed things up *a lot*. Like, on my Ryzen laptop, without any realy optimization effort, by a factor of 4-5. I'm really surprised, I figured that the ONNX runtime would be the limiting factor and it'd be similarly efficient both ways. 🤷

Also if you haven't, give #napiRs a try, it is ✨🫶✨

ONNX GenAI Connector for Python (Experimental).

https://buff.ly/4gupKPS

#python #ai #generativeai #onnx #genai #semantickernel

ONNX GenAI Connector for Python (Experimental) With the latest update we added support for running models locally with the onnxruntime-genai. The onnxruntime-genai package is powered by the ONNX Runtime in the background, but first let’s clarify what ONNX, ONNX Runtime and ONNX Runtime-GenAI are. ONNX ONNX is an open-source format for AI models, both for […]