https://huggingface.co/papers/2512.15603 #QwenImageLayered #ImageEditing #DiffusionModels #TechHumor #HackerNews #ngated

https://huggingface.co/papers/2512.15603 #QwenImageLayered #ImageEditing #DiffusionModels #TechHumor #HackerNews #ngated

AI is intellectual Viagra

and it hasn't left me so I am exorcising it here. I'm sorry in advance for any pain this might cause.

#AI #GenAI #GenerativeAI #LLMs #DiffusionModels #tech #dev #coding #software #SoftwareDevelopment #writing #art #VisualArt

Meta V-JEPA model learns physical intuition from everyday videos, signaling new progress for self-supervised video AI and safer robotics.

https://www.aistory.news/generative-ai/meta-v-jepa-model-shows-intuitive-physics-from-video/

Think it through. If you don't accept the use of climate-destroying, electricity-and-fresh-water-sapping, job-destroying, economy-thrashing--and yet mediocre or poorly performing!--technology created by multi-trillion-dollar sociopathic entities, then you are preventing people with less privilege than you have from living their best lives. You are preventing them from learning how to code. You are preventing them from obtaining coveted jobs in the tech sector. You are preventing them from having access to information. You, personally, are responsible for all this. Not the multi-trillion-dollar sociopathic entities who've not only created this technology and forced it on us but contributed to creating the less-privileged conditions of the people you are supposedly responsible for with your individual choices. Not the governments that neglected to enforce existing laws that would have prevented such multi-trillion-dollar sociopathic entities from forming in the first place, let alone creating such a technology--while also creating the conditions that led to people being less privileged. No, they are not responsible. You are. I am.

That doesn't make any sense.

Neoliberalism's greatest trick has been to shift responsibility for any problems away from the powerful and onto individuals who are not empowered to fix anything, all while convincing everyone that this is right and proper. Large corporations do not cause a plastic pollution problem; you and I do, by not separating our recycling. Large corporations, governments and militaries do not cause CO2 pollution and climate damage; you and I do, by using incandescent lightbulbs and non-electric/non-hybrid cars or eating meat. Lack of regulation and large agribusiness practices are not to blame for poor food quality; you and I are, for buying what they sell instead of going organic and joining a CSA. Etc. ad infinitum. Large, powerful entities routinely generate a problem, then tell you and me that we are responsible for the problem as well as for fixing it. Never mind that these entities could nudge their own behavior a bit and move the needle on the problem far more than masses of people could no matter how organized they were. Never mind that these entities could be constrained from causing such problems in the first place.

We are watching a new variation of this pattern come into being right in front of our eyes with AI. We should stop accepting these fictions. You are neither ableist nor a gatekeeper for resisting AI. You are, instead, attempting to forestall the further degradation of conditions for everyone, which starts this same cycle anew.

#AI #GenAI #GenerativeAI #LLM #DiffusionModels #neoliberalism #depoliticization

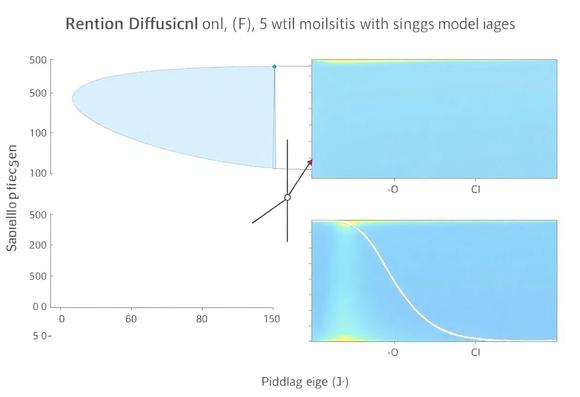

NeurIPS 2025 revealed a turning point: the top-four papers argue that bigger isn't always better. From revamped attention mechanisms to leaner diffusion models and smarter RL benchmarks, researchers are redefining performance metrics. Curious how the AI community is shifting focus? Dive into the highlights and see what's next for open-source innovation. #NeurIPS2025 #LLM #DiffusionModels #BenchmarkRevisions

🔗 https://aidailypost.com/news/neurips-2025-top-4-papers-highlight-shift-from-bigger-models-limits

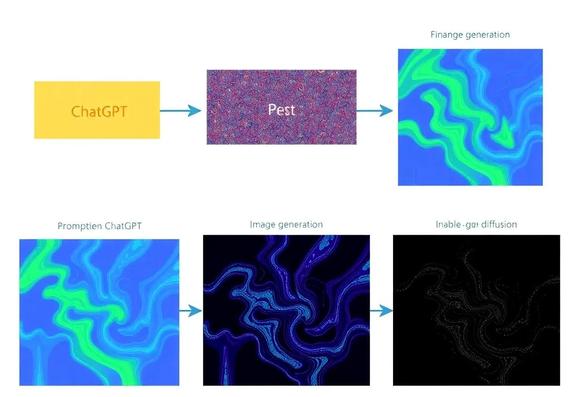

There's an animated GIF here showing text and image being generated at the same time.

https://github.com/tyfeld/MMaDA-Parallel

#solidstatelife #ai #genai #computervision #diffusionmodels #llms

GitHub - tyfeld/MMaDA-Parallel: Official Implementation of "MMaDA-Parallel: Multimodal Large Diffusion Language Models for Thinking-Aware Editing and Generation"

Official Implementation of "MMaDA-Parallel: Multimodal Large Diffusion Language Models for Thinking-Aware Editing and Generation" - tyfeld/MMaDA-Parallel

Some of the pathological beliefs we attribute to techbros were already present in this view of statistics that started forming over a century ago. Our writing is just data; the real, important object is the “hypothetical infinite population” reflected in a large language model, which at base is a random variable. Stable Diffusion, the image generator, is called that because it is based on latent diffusion models, which are a way of representing complicated distribution functions--the hypothetical infinite populations--of things like digital images. Your art is just data; it’s the latent diffusion model that’s the real deal. The entities that are able to identify the distribution functions (in this case tech companies) are the ones who should be rewarded, not the data generators (you and me).

So much of the dysfunction in today’s machine learning and AI points to how problematic it is to give statistical methods a privileged place that they don’t merit. We really ought to be calling out Fisher for his trickery and seeing it as such.

#AI #GenAI #GenerativeAI #LLM #StableDiffusion #statistics #StatisticalMethods #DiffusionModels #MachineLearning #ML