Datasets typically consist of a collection of related sets of data compiled from various sources such as public records, research studies, or crowd-sourced information. These datasets enable ML practitioners to train algorithms in recognizing specific patterns or making predictions, thereby improving the overall performance of their models.

Below is a list of the top websites that provide datasets...

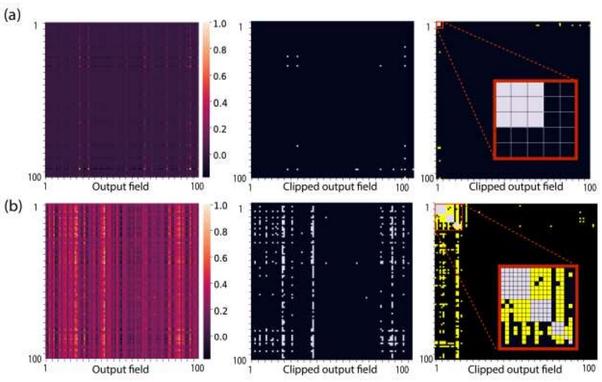

📝 Você sabia que a técnica de pruning pode revolucionar suas redes neurais? Neste post, exploramos como podar modelos pode otimizar o uso de memória e melhorar a eficiência. Descubra estratégias práticas e veja como economizar recursos sem comprometer a performance! Clique e aprenda mais!

.

.

.#MachineLearning #AI #NeuralNetworks

https://inkdesign.com.br/pruning-eficiente-reduz-custo-de-memoria-em-redes-neurais/?fsp_sid=57772

#NeuralNetworks pre-trained with #RetinalWaves, spontaneous activity in the early #VisualSystem, predict motion faster and more accurately. Julijana Gjorgjieva and her team showed this phenomenon in simulations and real-world scenes: http://go.tum.de/361879

@ERC_Research

📷A.Eckert

Stress and Resilience: How Your Brain Responds to Challenges and Restores Balance

#BrainScience #Neuroscience #Neurotransmitters #StressManagement #Memory #BrainHealth #Neuroplasticity #MindAndBody #MentalHealth #BrainFunctions #NeuralNetworks #CognitiveScience

All our articles posted here:

https://ml-nn.eu/articles.html

Horovod is an open-source library designed for distributed training of deep learning models across multiple GPUs and nodes. It is framework-agnostic and integrates seamlessly with popular machine learning libraries such as TensorFlow, PyTorch, Keras...

Weekly Update from the Open Journal of Astrophysics – 07/06/2025

It’s Saturday so once again it’s time for the weekly update of papers published at the Open Journal of Astrophysics. Since the last update we have published two new papers, which brings the number in Volume 8 (2025) up to 69 and the total so far published by OJAp is now up to 304.

The two papers published this week, with their overlays, are as follows. You can click on the images of the overlays to make them larger should you wish to do so.

The first paper to report is “Chemical Abundances in the Leiptr Stellar Stream: A Disrupted Ultra-faint Dwarf Galaxy?” by Kaia R. Atzberger (Ohio State University) and 13 others based in the USA, Germany, the UK, Sweden, Australia, Canada and Brazil. This one was published on 2nd June 2025 and is in the folder marked Astrophysics of Galaxies. It presents a spectroscopic study of stars in a stellar stream suggesting that the stream originated by the accretion of a dwarf galaxy by the Milky Way.

The overlay is here:

You can read the final accepted version on arXiv here.

The second paper is “Scaling Laws for Emulation of Stellar Spectra” by Tomasz Różański (Australian Nastional University) and Yuan-Sen Ting (Ohio State University, USA). This was published yesterday, i.e. on 6th June 2025, and is in the folder Instrumentation and Methods for Astrophysics. The paper discusses certain scaling models and their use to achieve optimal performance for neural network emulators in the inference of stellar parameters and element abundances from spectroscopic data.

The overlay is here:

You can find the officially-accepted version of the paper on arXiv here.

That’s the papers for this week. I’ll post another update next weekend.

As a postscript I have a small announcement about our social media. Owing to the imminent demise of Astrodon, we have moved the Mastodon profile of the Open Journal of Astrophysics to a new instance, Fediscience. You can find us here. The old profile currently redirects to the new one, but you might want to update your links as the old server will eventually go offline.

#arXiv241017312v2 #arXiv250318617v2 #AstrophysicsOfGalaxies #DiamondOpenAcccess #InstrumentationAndMethodsForAstrophysics #MilkyWay #neuralNetworks #OpenJournalOfAstrophysics #spectroscopy #stellarSpectra #StellarStreams #TheOpenJournalOfAstrophysics

From the https://freenet.org/ Matrix room.

Machine Learning Libraries: TensorFlow, PyTorch & scikit-learn

[..]behind every successful machine learning model lies a robust library or framework that simplifies the process of building, training, and deploying[..]