It's already the last talk of #CCLS2025 😱

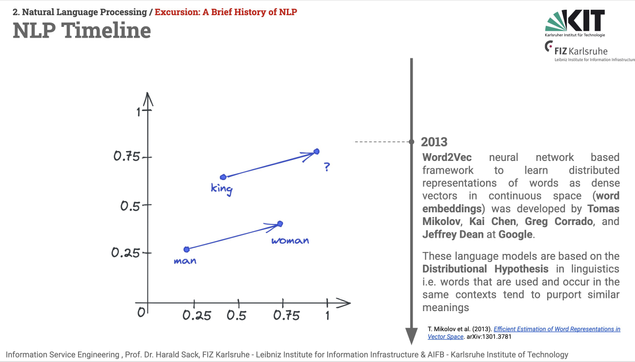

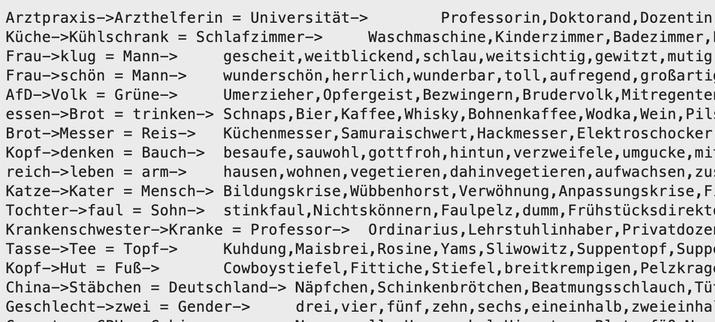

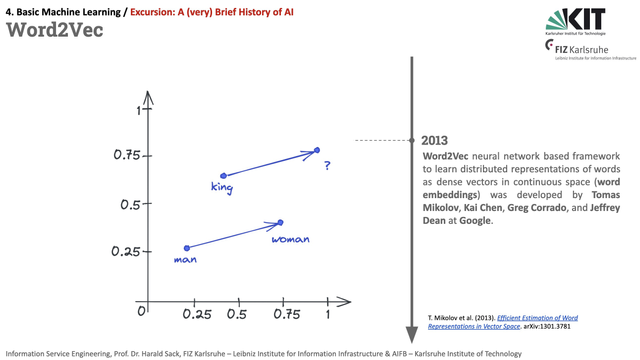

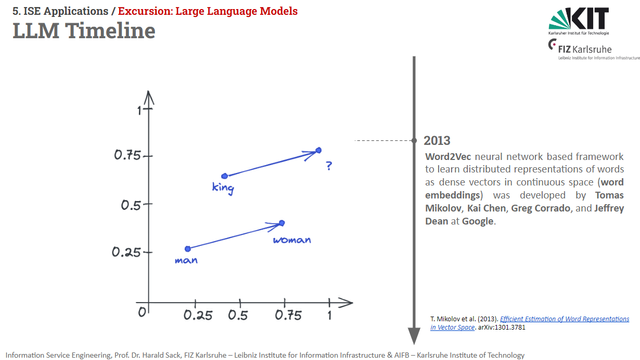

Yuri Bizzoni, Pascale Feldkamp, Kristoffer L. Nielbo: Encoding Imagism? Measuring Literary Imageability, Visuality and Concreteness via Multimodal Word Embeddings (https://doi.org/10.26083/tuprints-00030154)

#Measuring #LiteraryImageability #WordEmbeddings