New preprint on our "collaborative modelling of the brain" (COMOB) project. Over the last two years, a group of us (led by @marcusghosh) have been working together, openly, online, with anyone free to join, on a computational neuroscience research project

https://www.biorxiv.org/content/10.1101/2024.07.19.604252v1

This was an experiment in a more bottom up, collaborative way of doing science, rather than the hierarchical PI-led model. So how did we do it?

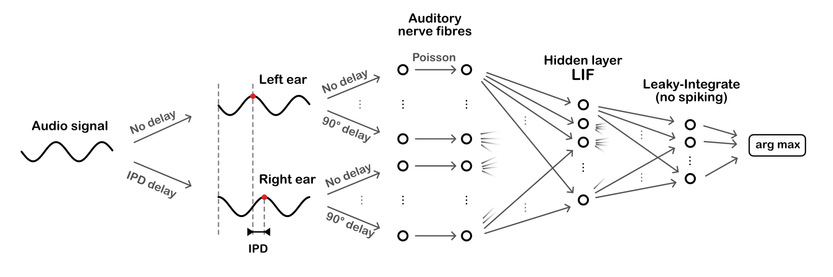

We started from the tutorial I gave at @CosyneMeeting 2022 on spiking neural networks that included a starter Jupyter notebook that let you train a spiking neural network model on a sound localisation task.

https://neural-reckoning.github.io/cosyne-tutorial-2022/

https://www.youtube.com/watch?v=GTXTQ_sOxak&list=PL09WqqDbQWHGJd7Il3yVxiBts5nRSxvJ4&index=1

Participants were free to use and adapt this to any question they were interested in (we gave some ideas for starting points, but there was no constraint). Participants worked in groups or individually, sharing their work on our repository and joining us for monthly meetings.

The repository was set up to automatically build a website using @mystmarkdown showing the current work in progress of all projects, and (later in the project) the paper as we wrote it. This kept everyone up to date with what was going on.

https://comob-project.github.io/snn-sound-localization/

We started from a simple feedforward network of leaky integrate-and-fire neurons, but others adapted it to include learnable delays, alternative neuron models, biophysically detailed models, incorporated Dale's law, etc.

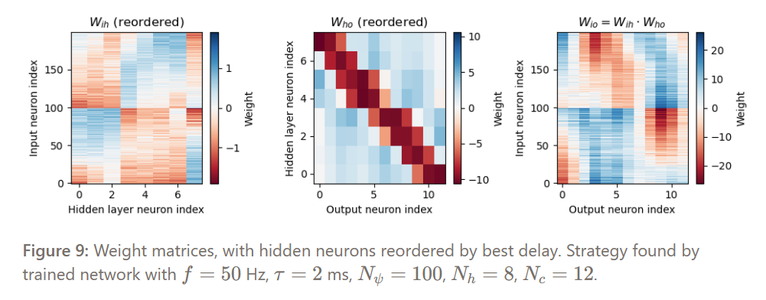

We found some interesting results, including that shorter time constants improved performance (consistent with what we see in the auditory system). Surprisingly, the network seemed to be using an "equalisation cancellation" strategy rather than the expected coincidence detection.

Ultimately, our scientific results were not incredibly strong, but we think this was a valuable experiment for a number of reasons. Firstly, it shows that there are other ways of doing science. Secondly, many people got to engage in a research experience they otherwise wouldn't. Several participants have been motivated to continue their work beyond this project. It also proved useful for generating teaching material, and a number of MSc projects were based on it.

With that said, we learned some lessons about how to do this better, and yes, we will be doing this again (call for participation in September/October hopefully). The main challenge will be to keep the project more focussed without making it top down / hierarchical.

We believe this is possible, and we are inspired by the recent success of the Busy Beaver challenge, a bottom up project of mathematics amateurs that found a proof to a 40 year old conjecture.

https://www.quantamagazine.org/amateur-mathematicians-find-fifth-busy-beaver-turing-machine-20240702/

We will be calling for proposals for the next project, engaging in an open discussion with all participants to refine the ideas before starting, and then inviting the proposer of the most popular project to act as a 'project lead' keeping it focussed without being hierarchical.

If you're interested in being involved in that, please join our (currently fairly quiet) new discord server, or follow me or @marcusghosh for announcements.

https://discord.gg/kUzh5MHjVE

I'm excited for a future where scientists work more collaboratively, and where everyone can participate. Diversity will lead to exciting new ideas and progress. Computational science has huge potential here, something we're also pursuing at @neuromatch.

Let's make it happen!

#neuroscience #computationalscience #computationalneuroscience #compneuro #science #metascience #SpikingNeuralNetworks #auditory