If you want to be part of The Resistance,

it will cost you your smart speaker, your Ring doorbell, your smart glasses, and your AI assistants.

Mass surveillance is incompatible with revolution. Get rid of it.

If you want to be part of The Resistance,

it will cost you your smart speaker, your Ring doorbell, your smart glasses, and your AI assistants.

Mass surveillance is incompatible with revolution. Get rid of it.

The charger for wife's hearing aids has a weird accessibility fail.

The charger is a little black plastic box that plugs into the wall. It has a slot for each hearing aid and a lid that snaps shut. Getting the hearing aids into position so that they will charge is rather fiddly - if they're slightly out of place, they won't charge. They each have a light that turns on when they're charging, but closing the lid of the case will often dislodge one sufficiently to break the connection.

Someone involved with the design of the charger or the hearing aids clearly recognized this problem, so there's a backup mechanism. It seems that if one hearing aid is charging but the other isn't, after a few minutes it starts making a sound.

A very quiet sound.

It sounds, roughly, like a single lonely cricket somewhere outside.

A sound which no one who needed the hearing aids could possibly perceive while not wearing the hearing aids.

This creates a weird dynamic where I occasionally have to communicate to my wife "crickets" so that she knows to fix the issue.

And all of this could be much more easily solved by just making the lid of the box out of clear plastic. Or adding a light on the outside of the box to indicate that the connection is bad.

(And writing this, I'm realizing that this was probably a heroic attempt at a software fix to a hardware problem. A fix which even sort of works for us. It's still a definite fail though.)

In honor of the late Robert Redford, "Sneakers", in high def ANSI with full subtitles:

ssh sneakers@ansi.rya.nc

(needs a terminal with 24 bit color support)

When giving #security guidance to developers, be sure to impart an understanding of the underlying problem, not just which API's to use or not. If you don't do this, enterprising developers will often reintroduce the problem by adding the problematic capabilities to an otherwise safe API.

To give a specific example, and to try to atone for some of my past sins:

The underlying problem in unsafe deserialization that leads to remote code execution is user-provided data telling your application what type it wants to be. When data can choose what type it is, it can choose types that have exploitable side effects in their constructors, setters, or destructors. Polymorphic deserializers are inherently unsafe.

In the past I've told people "use this API, it's safe". But when that API is safe because it doesn't allow polymorphism, developers inevitably modify the API to add polymorphism when it makes the overall design of the application simpler.

The portion of the #OWASP #serialization cheat-sheet on .NET is based on a talk I gave in 2017 before I understood this problem. (https://cheatsheetseries.owasp.org/cheatsheets/Deserialization_Cheat_Sheet.html#net-csharp )

It's much more difficult, but training developers to write secure code requires teaching them what the real problems are.

After learning yesterday about the island of Stroma, I've become increasingly convinced that it needs to be the setting for some sort of survival horror game.

I mean, it's an entire abandoned island, with an abandoned 19th century village (Nethertown!), mostly still standing, that's frequently unreachable. And the map just feel perfect*.

The only real drawback I'm seeing is that it feels like the monster would have to be hungry sheep.

*map taken from Wikimedia's copy of the 2013 UK Ordnance Survey - https://commons.wikimedia.org/wiki/File:Stroma_OS_map.png

We have to keep extra chairs near our computers for the cats. (Bandit is content with a folded up sheet on the coffee table).

This is necessary in large part because Minnie (the black cat in the foreground) has made a game of trying to jump into chairs just before I sit in them. If I wait until she's settled though, I can move that chair with her on it and swap a different one into its place.

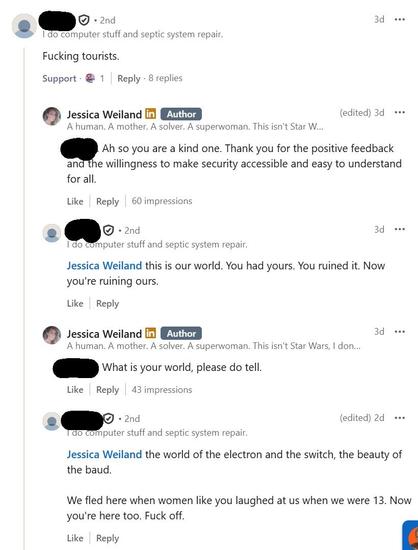

Guys,

Please.

Be better than the commenters.

I want to make one thing clear: Women get a seat at the table. You do not get to push us into a corner. This isn't "your world". We are not marketing pawns. We are not going to "submit to our husbands" and play coy. We are not all one size, shape, color, mindset, beauty standard. I thought we were past this. But it's clear. We aren't. And that needs to change. Ada Lovelace. Grace Hopper. Radia Perlman. Katherine Johnson. Annie Easley. Hedy Lamarr. Elizabeth Feinler. Margaret Hamilton. Karen Sparck. Women are part of the backbone of modern technology and computer networking as we know it. So tell them your thoughts about women in the industry and see what they say to your vision of "yours" | 211 comments on LinkedIn