JCelades

@Jorgito_@infosec.exchange

- 6 Followers

- 121 Following

- 43 Posts

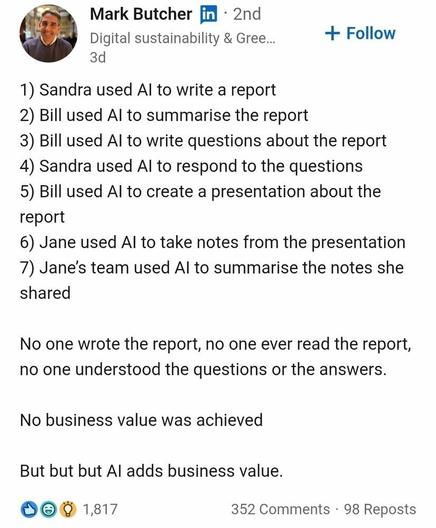

They are starting to get it ...

Have you ever seen a post or thread that you want to look at again after a certain amount of time?

There is a Fediverse reminder bot which you can use to remind you about a particular thread after a specified time period (it can be minutes, hours, days, weeks, months or years later).

To use the bot, reply to the post or thread you want reminding about, mentioning the bot's account with the amount of time you want. More info and instructions in the guide:

➡️ https://fedi.tips/is-there-a-reminder-bot-for-mastodon-and-the-fediverse

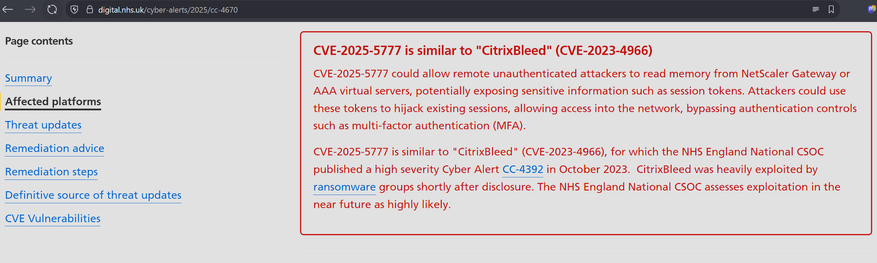

NHS Digital's cyber alert database has been updated too. https://digital.nhs.uk/cyber-alerts/2025/cc-4670

I highly recommend bookmarking this site for the alerts, they're really good at filtering noise:

https://digital.nhs.uk/cyber-alerts

E.g. if you select 'high' category, there's only one a month on average

My favorite way to hack in my ethical hacking is phone call based hacking with impersonation. Why? Because it has the highest success rate. This is what we're seeing in the wild right now, too.

Let's talk about how phone call attackers think and how to catch Scattered Spider style attacks for Insurance companies (that are heavily targeted right now, Aflac recently):

1. *Impersonating IT and Helpdesk for passwords and codes*

They pretend to be IT and HelpDesk over phone calls and text message to ask for passwords and MFA codes or credential harvest via a link

2. *Remote Access Tools as Helpdesk*

They convince teammates to run business remote access tools while pretending to be IT/HelpDesk

3. *MFA Fatigue*

They will send many repeated MFA prompt notifications until the employee presses Accept

4. *SIM Swap*

They call telco pretending to be your employee to take over their phone number and intercept codes for 2 factor authentication

Let's talk about the types of websites they register and how to train your team about them and block access to them.

Scattered Spider usually attempts to impersonate your HelpDesk or IT so they're going to use a believable looking website to trick folks.

Often times they register domains like this:

- victimcompanyname-sso[.]com

- victimcompanyname-servicedesk[.]com

- victimcompanyname-okta[.]com

Train your team to spot those specific attacker controlled look-alike domains and block them on your network.

What mitigations steps can you take to help your team spot and shut down these hacking attempts? Especially if you work in Retail or Insurance and are heavily targeted right now, focus on:

Human protocols:

- Start Be Politely Paranoid Protocol: start protocol with your team to verify identity using another method of communication before taking actions. For example, if they get a call from IT/HelpDesk to download remote access tool, use another method of communication like chat, email, initiating a call back to trusted number to thwart spoofing to verify authenticity before taking action. More than likely it's an attacker.

- Educate on the exact types of attacks that are popular right now in the wild (this above thread covers them).

Technical tool implementation:

- Set up application controls to prevent installation and execution of unauthorized remote access tools. If the remote access tools don't work during the attack, it's going to make the criminal's job harder and they may move on to another target.

- Set up MFA that is harder to phish such as FIDO solutions (YubiKey, etc). Educate that your IT / HelpDesk will not ask for passwords or MFA codes in the meantime.

- Set up password manager and require long, random, and unique passwords for each account, generated and stored in a password manager with MFA on.

- Require MFA on for all accounts work and personal accounts, move folks with admin access to FIDO MFA solution first, then move the rest of the team over to FIDO MFA.

- Keep devices and browsers up to date.

“Over four months, LLM users consistently underperformed at neural, linguistic, and behavioral levels.”

https://www.media.mit.edu/publications/your-brain-on-chatgpt/

Trump’s attempt to chill dissent with militarized escalation isn’t working:

“What it’s done is drive so many new people to the No Kings website looking for a way to show up peacefully against this autocratic overreach.” - Ezra

Join us on Saturday for No Kings Day: https://www.nokings.org/?SQF_SOURCE=indivisible

Introduction

At CIRCL (Computer Incident Response Center Luxembourg), we faced the challenge of evaluating vulnerabilities with only partial information often just a textual description.

To address this, we built an NLP model using the existing dataset from Vulnerability Lookup. The entire solution has now been released, including integration into the free online service and the open-source code. With this model, you can obtain the VLAI vulnerability score even when no existing score is available, by assessing severity based solely on the description.

Below, you'll find the complete process we developed for the VLAI Severity models, which can be applied to many other use cases.

Datasets

Among the datasets we provide, a key one is dedicated to vulnerability scoring and features CPE data, CVSS scores, and detailed descriptions.

This dataset is updated daily.

Sources of the data:

- CVE Program (enriched with data from vulnrichment and Fraunhofer FKIE)

- GitHub Security Advisories

- PySec advisories

- CSAF Red Hat

- CSAF Cisco

The licenses for each security advisory feed are listed here: https://vulnerability.circl.lu/about#sources

Get started with the dataset

import jsonfrom datasets import load_datasetdataset = load_dataset("CIRCL/vulnerability-scores")vulnerabilities = ["CVE-2012-2339", "RHSA-2023:5964", "GHSA-7chm-34j8-4f22", "PYSEC-2024-225"]filtered_entries = dataset.filter(lambda elem: elem["id"] in vulnerabilities)for entry in filtered_entries["train"]: print(json.dumps(entry, indent=4))For each vulnerability, you will find all assigned severity scores and associated CPEs.

Models

How We Build Our VLAI Model

With the various vulnerability feeders of Vulnerability-Lookup (for the CVE Program, NVD, Fraunhofer FKIE, GHSA, PySec, CSAF sources, Japan Vulnerability Database, etc.)we’ve collected over a million JSON records. This allow us to generate datasets for training and building models.

During our explorations, we realized that we can automatically update a BERT-based text classification model daily using a dataset of approximately 600k rows from Vulnerability-Lookup.With powerful GPUs, it’s a matter of hours.

Models are generated on our own GPUs and with our various open source trainers.

Similar to the datasets, model updates are performed on a regular basis.

Text classification

vulnerability-severity-classification-roberta-base

This model is a fine-tuned version of RoBERTa base on the datasetCIRCL/vulnerability-scores.

The time of generation with two GPUs NVIDIA L40S is approximately 6 hours.

Try it with Python:

>>> from transformers import AutoModelForSequenceClassification, AutoTokenizer... import torch... ... labels = ["low", "medium", "high", "critical"]... ... model_name = "CIRCL/vulnerability-severity-classification-roberta-base"... tokenizer = AutoTokenizer.from_pretrained(model_name)... model = AutoModelForSequenceClassification.from_pretrained(model_name)... model.eval()... ... test_description = "langchain_experimental 0.0.14 allows an attacker to bypass the CVE-2023-36258 fix and execute arbitrary code via the PALChain in the python exec method."... inputs = tokenizer(test_description, return_tensors="pt", truncation=True, padding=True)... ... # Run inference... with torch.no_grad():... outputs = model(**inputs)... predictions = torch.nn.functional.softmax(outputs.logits, dim=-1)... ... ... # Print results... print("Predictions:", predictions)... predicted_class = torch.argmax(predictions, dim=-1).item()... print("Predicted severity:", labels[predicted_class])... tokenizer_config.json: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1.25k/1.25k [00:00<00:00, 4.51MB/s]vocab.json: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 798k/798k [00:00<00:00, 2.66MB/s]merges.txt: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 456k/456k [00:00<00:00, 3.42MB/s]tokenizer.json: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 3.56M/3.56M [00:00<00:00, 5.92MB/s]special_tokens_map.json: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 280/280 [00:00<00:00, 1.14MB/s]config.json: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 913/913 [00:00<00:00, 3.40MB/s]model.safetensors: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 499M/499M [00:44<00:00, 11.2MB/s]Predictions: tensor([[2.5910e-04, 2.1585e-03, 1.3680e-02, 9.8390e-01]])Predicted severity: criticalcritical has a score of 98%.

Putting Our Models to Work in Vulnerability-Lookup

Models are loaded locally in the ML-Gateway to ensure minimal latency. All processing is done locally — no data is sent to Hugging Face servers.We use the Hugging Face platform to share our datasets and models, as part of our commitment to open collaboration.

ML-Gateway implements a FastAPI-based local server designed to load one or more pre-trainedNLP models during startup and expose them through a clean, RESTful API for inference.

Clients interact with the server via dedicated HTTP endpoints corresponding to each loaded model. Additionally, the server automatically generatescomprehensive OpenAPI documentation that details the available endpoints, their expected input formats, and sample responses—making it easy to explore and integrate the services.

The ultimate goal is to enrich vulnerability data descriptions through the application of a suite of NLP models, providing direct benefits to Vulnerability-Lookup and supporting other related projects.

Discuss this on our forum.

How Many #Qubits Will It Take to Break Secure Public Key #Cryptography Algorithms?

How Many Qubits Will It Take to Break Secure Public Key Cryptography Algorithms? - Slashdot

Wednesday Google security researchers published a preprint demonstrating that 2048-bit RSA encryption "could theoretically be broken by a quantum computer with 1 million noisy qubits running for one week," writes Google's security blog. "This is a 20-fold decrease in the number of qubits from our ...

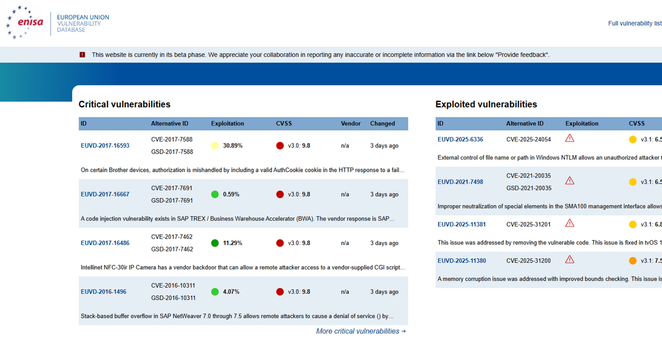

The European Vulnerability Database (EUVD) is now fully operational, offering a streamlined platform to monitor critical and actively exploited security flaws amid the US struggles with budget cuts, delayed disclosures, and confusion around the future of its own tracking systems https://euvd.enisa.europa.eu/