@vildis +9001%

Why they don't go with tar or pax or plain bzip2 / xz is beyond me.

- But I guess they are too lazy to use #BitTorrent and/or #IPFS and merely want to prop up #Filehosting providers...

@vildis +9001%

Why they don't go with tar or pax or plain bzip2 / xz is beyond me.

@vildis +9001%

Why they don't go with tar or pax or plain bzip2 / xz is beyond me.

@gsuberland @jay @vildis now I'm wondering if this layering of junk might be by accident helping in one way:

if there's a dozen unrelated torrent uploads of the same set of split rars, and none of them are completely seeded, you may be able to mix and match the rar files from all the partial torrent downloads?

Caution, tangent

One thing I personally benefitted from a lot when it comes to rars, back in the floppy disk era, was the ability to create rars with customizable 5/10/20% redundancy of data so it could self heal a few bad sectors away.

It's a feature I wish more backup/archive tools would have today still.

But that's not at all helpful for any kind of internet downloads nowadays (and hasn't been for a long time).

@jay @kkarhan @vildis not usenet, but private ftp servers running a stack like glftpd and pzs-ng which check files against sfv checksum on upload

i’d still agree it’s “legacy stuff” though, these standards were originally set in the late 1990s when iso releases started becoming a thing. before then everything was in zips as a holdover from the BBS days with BBS software that would pkunzip -t on upload.

@adorfer @jay @vildis I KNOW THAT!

Granted #RAR is outdated and even if we assume we've to split files still to systematically abuse free tiers of file hosters (which I disapprove of because it's not only a bit antisocial but also susceptible to #LinkRot), there are now better options like #7zip that are even easier to use than tar & bzip2 & xz…

@jay @kkarhan @vildis It's not so much "legacy" as it is that Usenet continues to be where a lot of pirated stuff originates.

Segmenting a file lets you check individual parts of a large download for errors while other parts are continuing to download, and then can redownload any corrupt segments. Bittorrent does this inherently, but other download methods don't.

Assuming the people making the things know what they're doing, they'd turn off compression entirely and just segment.

@StarkRG @jay @vildis personally I'd rather still compress stuff for speed reasons, as bandwith is the limiting factor, not computational power or storage.

https://infosec.space/@kkarhan/114789676621551304

I don't host, seed, download or distribute anything copyrighted, but like with @vxunderground and @VXShare do for #Antivirus I've seen enough groups also do some basic #encryption as to avoid any #ContentID-style matching and thus automated #DMCA takedowns...

@adorfer@chaos.social @jay@social.zerojay.com @vildis@infosec.exchange *I KNOW THAT!* - I literally did that myself 30+ years ago when I had a handed-down #Windows95 *T H I C C "pizzabox"* which didn't have a CD-Burner, only a CD-ROM and even if I had CD-RWs back in 1999, they would've been more expensive than a 10-pack of 3,5" 1440kB FDDs and it would've been wasteful for stuff in the 2-10 MB region. Granted #RAR is outdated and even if we assume we've to split files still to *systematically abuse free tiers of file hosters* (which I disapprove of because it's not only a bit antisocial but also susceptible to #LinkRot), there are now better options like #7zip that are even easier to use than `tar` & `bzip2` & `xz`…

Video is already compressed. Using lossless compression on already lossy compressed data won't get you a smaller file. It's also why transmitting compressed video over a compressed connection ends up seeming slower than transmitting non-compressed data, the actual data rate remains the same, but you're not getting any benefit out of the compression.

I agree that segmentation is unnecessary for bittorrent, but many torrents are just straight copies of usenet posts.

@VXShare @StarkRG @jay @vildis @vxunderground OFC, if their corporate firewall didn't blocklist your domain, most #MITM-based "#NetworkSecurity" solutions and "#EndpointProtection" will checksum files and instantly yeet them into the shadow realm.

And lets be honest: Like with chemistry and medicine, one wants to have a supplier that isn't shady af but actually transparent.

@vildis Parts were for slow unreliable connections back in the day, so you downloaded them one by one and if one failed you just did that piece not whole huge file. When you had it all, there was integrity check when combined that wouldn't be there otherwise and it would tell you which part was corrupted so you just got that one again.

It wasn't for compression purposes, it was for delivery purposes. No one compresses them these days.

@rejzor @vildis yessir. windows didn't have /usr/bin/split to work with back in the day. so we made made it work with what we had including standards to optimize for outcomes.

haha. syndicacy, baby! #thescene #warez950 #efnet4eva ✌️💙

@rejzor

Honorable mention:

7PLUS by DG1BBQ (around 1993)

file converter/encoder for error correction via store&forward networks, automatic generation of resend-requests for partial files/diffs.

https://github.com/hb9xar/7plus

But it's 100% spot on and totally accurate 🙂👍

@vildis real use-case for splitting: transferring large files from A to B either over a very crappy network link or via physical media much smaller than the original file. Think diskettes and sub-1Mbit networks over sub-par phone networks.

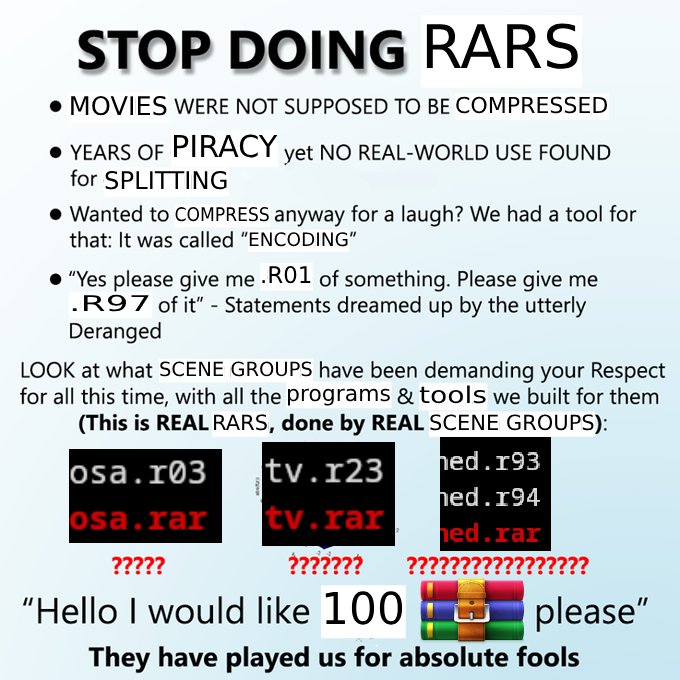

The problem with pirates is they have a way of doing tihings without understanding why or where that way came from.

TL;DR - Pirates are ignorant at best and could do well to learn their own history.

@vildis ... compression? rars? ...

do you mean like a house cat?

here, I have your compressed rars.

@vildis@infosec Couple of years back I got a work related data dump from an colleague who had sent a multi-part rar across multiple emails.

Once combined, there was a single zip inside, which contained a single data file.

Progress.