Adventures in #selfhosting!

I just finished failing to do a "correct" and "proper" upgrade of cloud-native postgres, #cnpg, from using standard #longhorn volume backups to the barman cloud plugin.

I got the plugin loaded accord to the migration docs, but couldn't get it to write to #s3, nor could the pods become ready. I worked at it for hours, but I saw lots of other people online and recently having the same issues and log messages that I was.

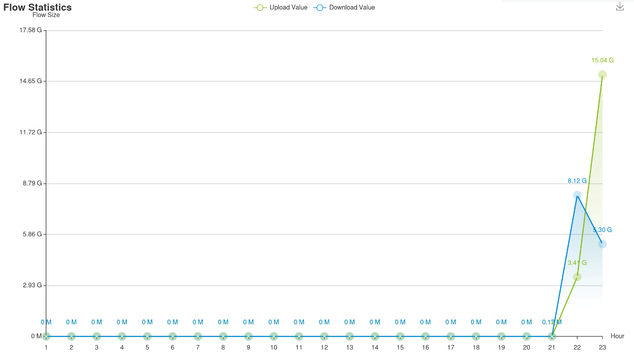

The reason I did this in the first place was that I noticed that I had some duplicate backup jobs causing issues with #fluxcd reconciliation.

In the end, I gave up and went back to the original longhorn backups, which have worked and I've already done disaster recovery with (don't ask), and deleted the duplicates.

Currently I'm waiting for the previous primary/write node to fully restart and clear out the barman side car. Then I'll turn flux back on and hopefully things will be good.