Как обучают ИИ: без формул, но с котами

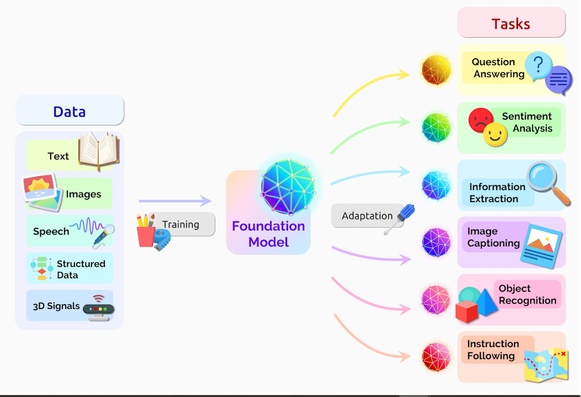

В этой статье — без воды, трюизмов, академизмов и формул — разберёмся, в чём принципиальное отличие машинного обучения (ML) от до-ИИ программирования, а затем генеративного ИИ от классических моделей машинного обучения (ML). Поговорим о типах генеративных моделей, их архитектуре и областях применения. Заодно затронем важный вопрос: где проходит граница между классическим программированием и вероятностным творчеством, на котором построены современные нейросети. Статья ориентирована прежде всего на тех, кто делает первые шаги в ИИ, но если ты начинающий ML-инженер, архитектор ИИ-приложений, основатель стартапа или просто хочешь разобраться, что на самом деле происходит под капотом у ChatGPT и Midjourney — ты, скорее всего, найдёшь здесь для себя что-то полезное.

https://habr.com/ru/articles/919296/

#машинное+обучение #искусственный_интеллект #generative_models #generative_art #ml #научпоп #обучение_нейронных_сетей #генеративные_модели #парадигмы #selfsupervised