ICYMI 👀

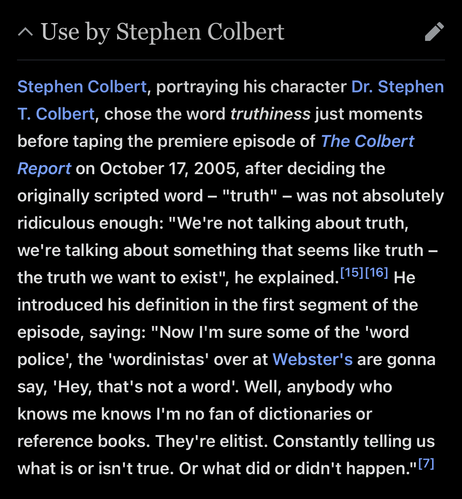

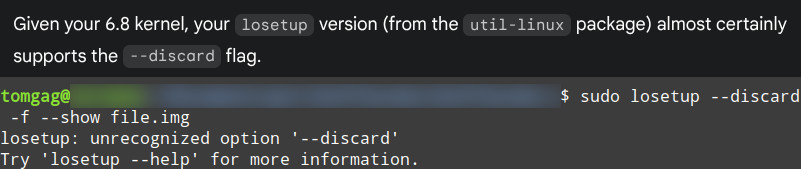

If you use a lot of #LLM workflows and are annoyed with #AI #hallucination, prompting your model with a few additional instructions for dealing with uncertainty might just clear the errors out of your workflow.

Give it a read! 👇

https://timthepost.com/posts/avoiding-model-hallucinations-through-structured-uncertainty-handling/