| Web | https://technotecture.com |

| Code | https://github.com/seichter |

| Pixelfed | https://pixelfed.social/@technotecture |

Hartmut Seichter

- 74 Followers

- 291 Following

- 3.4K Posts

In Brüssel gibt es jetzt Glasfaser mit 10 Gbps symmetrisch(!) für 20 € pro Monat.

Der rumänische Anbieter DIGI ist damt der dritte Glasfaser-Anbieter in Belgien neben Orange (Frankreich) und Proximus (Ex-Belgacom). Im Vergleich zur Konkurrenz bietet DIGI Kampfpreise an und liefert zudem als einziger der drei Anbieter symmetrische Leistung.

Wir in Deutschland weinen leise.

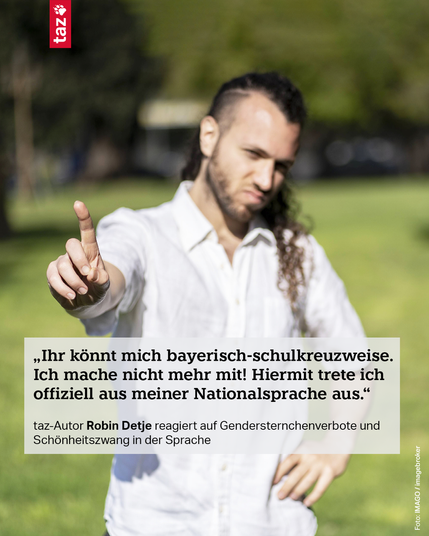

Zum #GenderVerbot — vier Sätze aus dem #Spiegel:

»... Selbst intensive Recherchen fördern kein #Gendergebot zutage. Zu Claudia #Roths Zeiten wurde mal gegendert und dann mal wieder nicht. Auf Anfrage bestätigt schließlich auch #WolframWeimers Büro:

#Gendern war niemals vorgeschrieben.

#Sprachvorschriften macht nur Wolfram #Weimer.«

Vibe Code Is Legacy Code, by @stevekrouse.com (@val.town):

Ein echter Mann heult nicht rum, wenn er Kritik erfährt.

Ein echter Mann feuert die Kritiker, erhebt 150 Prozent Zölle und lässt sich von Firmenchefs irgendwas Goldenes schenken 💪

A contact just told me that my old "LLMs generate nonsense code" blog post from 2 years ago is now very outdated with GPT5 because it's so awesome and so helpful. So I asked him to give it a test for me, and asked it my favorite test question based on a use-case I had myself recently:

Without adding third-party dependencies, how can I compress a Data stream with zstd in Swift on an iPhone?

and here is the answer from ChatGPT 5: https://chatgpt.com/share/68968506-1834-8004-8390-d27f4a00f480

Very confident, very bold, even claims "Works on iOS 16+".

Problem with that: Just like any other LLM I've tested that provided similar responses, it is - excuse my language but I need to use it - absolute horseshit. No version of any Apple SDK ever supported or supports ZSTD (see https://developer.apple.com/documentation/compression/compression_algorithm for a real piece of knowledge). It was never there. Not even in private code. Not even as a mention of "things we might do in the future" on some developer event. It fundamentally does not exist. It's completely made up nonsense.

This concludes all the testing for GPT5 I have to do. If a tool is able to actively mislead me this easy, which potentially results in me wasting significant amounts of time in trying to make something work that is guaranteed to never work, it's a useless tool. I don't like collaborating with chronic liars who aren't able to openly point out knowledge gaps, so I'm also not interested in burning resources for a LLM that does the same.