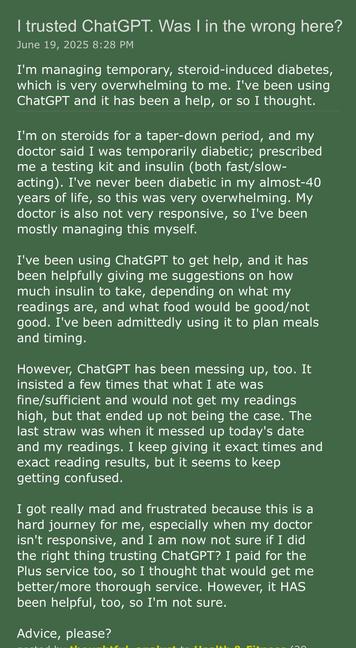

@hannah @fuzzychef WebMD, Goop et al also publish their misinformation on web pages, where everyone can see it. This at least creates the possibility that their misinformation can be spotted by third parties, and pressure applied to correct it.

Other than OpenAI, nobody sees what ChatGPT is whispering into the ears of vulnerable people

it runs you in circles that dont lead anywhere (good or bad) and ultimately are worthless without proper test to rule out stuff half of that stuff

Good data in, garbage out!

https://discord.com/invite/diabetes

It's an open and welcoming community, and is LGBTQ+ friendly.

Being very cautious -- is it okay to publish this link on the public website in question? I know here is kind of public, but Ask Metafilter gets indexed pretty heavily.

I'm not a Discord user and don't know if that's what "open" means.

Final stupidity, I went and posted it and then worried and had it taken down. But I can post it again! Or just put in a private message to the original poster.

@clew It's a completely public community. Feel free to post the link anywhere!

So long as the rules are followed (and they ARE enforced pretty aggressively), anyone is welcome with the understanding that this is a support community. We do gatekeep it to a degree, with a healthy chunk of the server hidden behind a manually assigned role.

@hannah lmao at the comment that was "You need Baldur Bjarnason at least as much as you need insulin."

(cc @baldur)

As someone who has been battling Type 2 Diabetes with two doctor, two endocrinologists, and has 24 hour monitoring of blood sugar...JESUS FREAKING CHRIST NO. NO NO NO NO. GO TO YOUR DOCTOR AND ASK FOR A CORRECTIONS SHEET. OH SWEET CRISPY WALNUTS DON'T USE CHATGPT FOR SOMETHING LIKE THIS.

It may be pointed out that some people are already liable to go down a rabbit hole and ChatGPT is just what they used, but we should also consider how these kinds of systems enable that, like with how they are natural at "yes, and"-ing.

This is also happening because of wider societal alienation and holes in support from lack of sufficient medical care and other kinds of expertise because of capitalism reasons. It's not great.

He might as well have rolled some dice to decide what to do

@hannah this is just the latest round of what happens when the medical system fails to meet people's actual needs (esp. when those needs are social/interpersonal and not merely related to access to specific molecules)

similar has happened before with women's issues, chronic illness, etc. getting consumed by quackery, MLMs, antivax, etc.

@hannah jfc it doesn't get "confused"

Please please please stop treating it like a mind

@hannah I'm rapidly approaching the point of "if you do stupid shit with a gun and die as a result, the gun didn't kill you" when it comes to LLM misuse.

To try and counter that impulse, I'm working on a handout/zine on why LLMs aren't "truth engines"

@hannah The current narrative coming from a lot of supporters (including the company I work for!) is “you should use it like a search engine, but don’t trust it and be sure to verify everything it says.”

…then I’ll just use a search engine to start with why even bother.

There won't ever be a count of how many LLMs have endangered or killed.

https://m.ai6yr.org/@ai6yr/114716031622310118

AI6YR Ben (@ai6yr@m.ai6yr.org)

The Trek: They Trusted ChatGPT To Plan Their Hike — And Ended Up Calling for Rescue "Two hikers were rescued this spring from the fittingly named Unnecessary Mountain near Vancouver, Canada after using ChatGPT and Google Maps to plan their route and finding themselves trapped by unexpected snow partway up..." h/t @researchbuzz@researchbuzz.masto.host https://thetrek.co/they-trusted-chatgpt-to-plan-their-hike-and-ended-up-calling-for-rescue/ #hiking #SearchAndRescue #AI #LLMs #SAR

Advice #1: get a new doctor.

Holy Shit !

Don't ever trust #ChatGPT in suggesting the right #medication on #serious and possible #dangerous #medicine. Change the fucking #Doctor, if he is not #responsive and tell him this before. It is YOUR #Health an not the Doctor's.

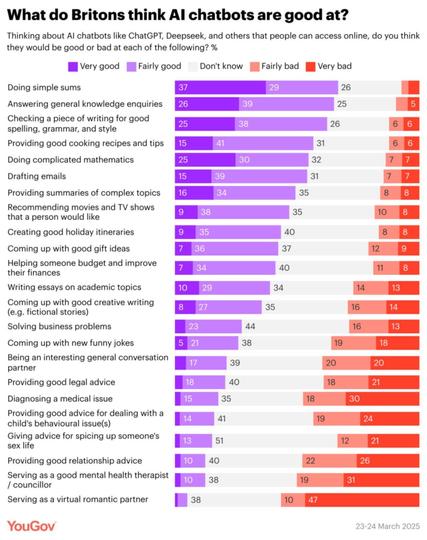

Yeah, but a lot of British newspapers print horoscopes. As a nation, it seems we're easily led. 😟

To be fair, the general public has a very very bad track record of who to trust on lots of these questions. People listen to celebrities, confident shysters, etc.

@hannah Me tapping the sign again