A system (of sentences, of equations, of graphs) is said "complete" if it has one and only one solution.

A system is deemed "singular" if it does not have one and only one solution.

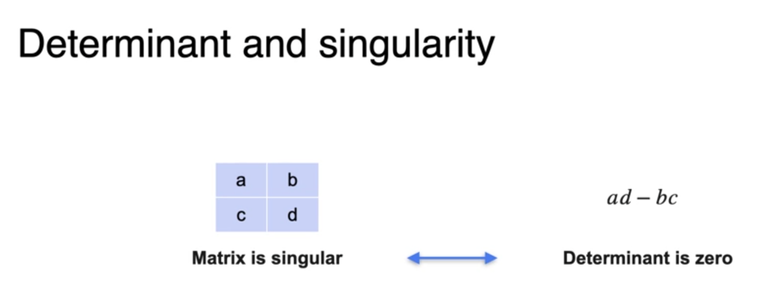

A convenient way to show singularity is to:

* define a "determinant" as the product of the leading diagonal minus the product of the antidiagonal and

* calculate that it is zero.

#learning #algebra #systems #matrices #determinants #singularity #data #ML #DataScience #math #maths #mathematics #tutorial