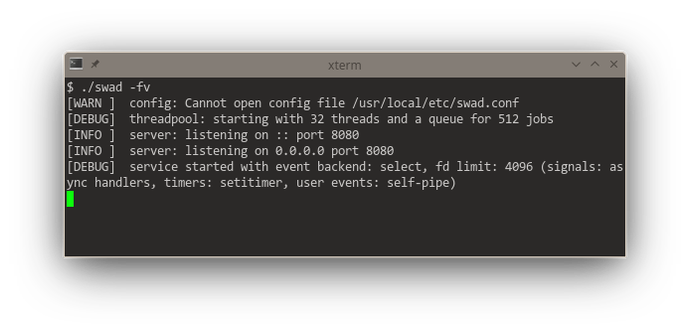

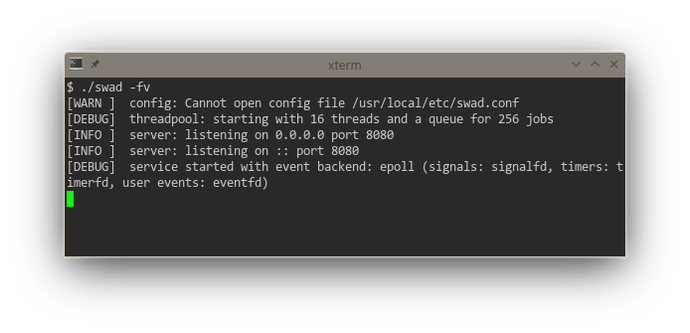

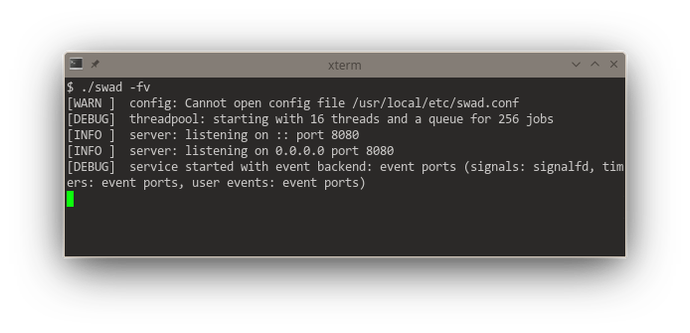

This redesign of #poser (for #swad) to offer a "multi-reactor" (with multiple #threads running each their own event loop) starts to give me severe headaches.

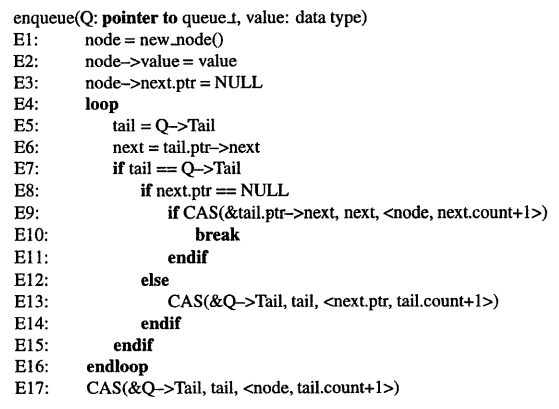

There is *still* a very rare data #race in the #lockfree #queue. I *think* I can spot it in the pseudo code from the paper I used[1], see screenshot. Have a look at lines E7 and E8. Suppose the thread executing this is suspended after E7 for a "very long time". Now, some dequeue operation from some other thread will eventually dequeue whatever "Q->Tail" was pointing to, and then free it after consumption. Our poor thread resumes, checks the pointer already read in E6 for NULL successfully, and then tries a CAS on tail->next in E9, which is unfortunately inside an object that doesn't exist any more .... If the CAS succeeds because at this memory location happens to be "zero" bytes, we corrupted some random other object that might now reside there. 🤯

Please tell me whether I have an error in my thinking here. Can it be ....? 🤔

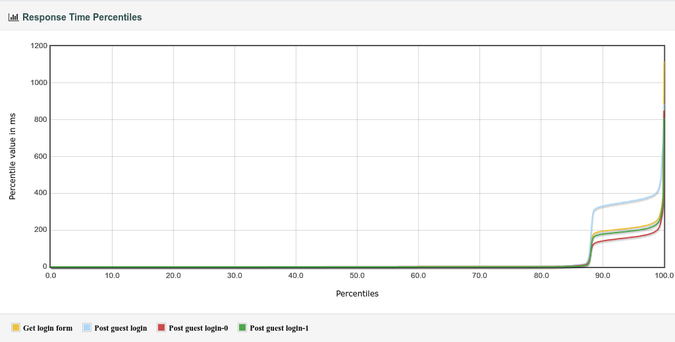

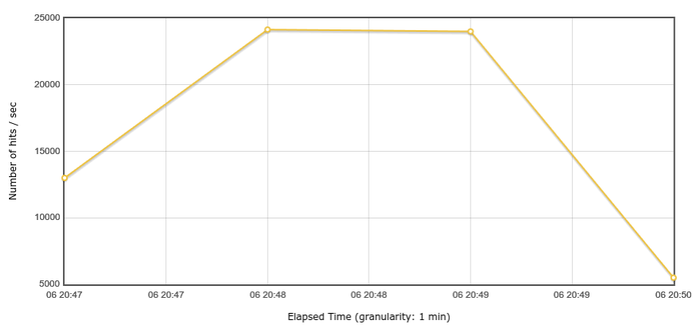

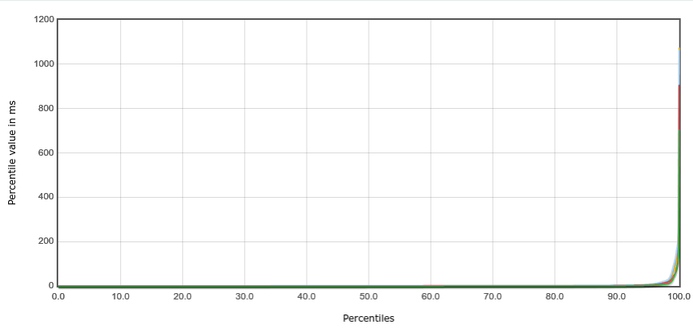

Meanwhile, after fixing and improving lots of things, I checked the alternative implementation using #mutexes again, and surprise: Although it's still a bit slower, the difference is now very very small. And it has the clear advantage that it never crashes. 🙈 I'm seriously considering to drop all the lock-free #atomics stuff again and just go with mutexes.

[1] https://dl.acm.org/doi/10.1145/248052.248106