My current plan, to move from SSD to SATADOM (and to smaller disks, effectively reducing my zpool size) is to use `zfs add`, not `zfs replace`.

See what was done over here: https://infosec.exchange/@bob_zim/115531520404665696

I'll be going from this:

[18:13 r730-01 dvl ~] % zpool list zroot

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

zroot 424G 32.8G 391G - - 14% 7% 1.00x ONLINE -

[18:13 r730-01 dvl ~] % gpart show da0

=> 40 937703008 da0 GPT (447G)

40 1024 1 freebsd-boot (512K)

1064 984 - free - (492K)

2048 41943040 2 freebsd-swap (20G)

41945088 895756288 3 freebsd-zfs (427G)

937701376 1672 - free - (836K)

to this:

dvl@r730-04:~ $ zpool list zroot

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

zroot 107G 1.07G 106G - - 0% 0% 1.00x ONLINE -

errors: No known data errors

dvl@r730-04:~ $ gpart show ada0

=> 40 242255584 ada0 GPT (116G)

40 532480 1 efi (260M)

532520 2008 - free - (1.0M)

534528 16777216 2 freebsd-swap (8.0G)

17311744 224942080 3 freebsd-zfs (107G)

242253824 1800 - free - (900K)

Zimmie (@bob_zim@infosec.exchange)

@jornane@ipv6.social @ronnie_bonkers@mastodon.social ‘zpool replace’ wouldn’t work in this particular case: > The size of new-device must be greater than or equal to the minimum size of all the devices in a mirror or raidz configuration. The point here was to increase swap space, which means the space remaining for ZFS to use would be smaller.

When a ZFS system starts going rogue and fills the disk, it would be nice if `sudo` still worked.

I know of quota, reservation, and refreservation.

I've tested with `zfs create -o refreservation=5GB zroot/reservation` etc, but the system still gets to the point of disk space exhaustion and sudo starts getting uppity.

So far, I think the easiest way may be quota.

`zfs set quota=15G zroot/var/log`

That is similar to creating a separate zpool of size 15G and moving /var/log over to that zpool.

I've just used them, so time to repost this:

Enhancing FreeBSD Stability with ZFS Pool Checkpoints

https://it-notes.dragas.net/2024/07/01/enhancing-freebsd-stability-with-zfs-pool-checkpoints/

Latest 𝗩𝗮𝗹𝘂𝗮𝗯𝗹𝗲 𝗡𝗲𝘄𝘀 - 𝟮𝟬𝟮𝟱/𝟭𝟭/𝟭𝟳 (Valuable News - 2025/11/17) available.

https://vermaden.wordpress.com/2025/11/17/valuable-news-2025-11-17/

Past releases: https://vermaden.wordpress.com/news/

#verblog #vernews #news #bsd #freebsd #openbsd #netbsd #linux #unix #zfs #opnsense #ghostbsd #solaris #vermadenday

Latest 𝗩𝗮𝗹𝘂𝗮𝗯𝗹𝗲 𝗡𝗲𝘄𝘀 - 𝟮𝟬𝟮𝟱/𝟭𝟭/𝟭𝟳 (Valuable News - 2025/11/17) available.

https://vermaden.wordpress.com/2025/11/17/valuable-news-2025-11-17/

Past releases: https://vermaden.wordpress.com/news/

#verblog #vernews #news #bsd #freebsd #openbsd #netbsd #linux #unix #zfs #opnsense #ghostbsd #solaris #vermadenday

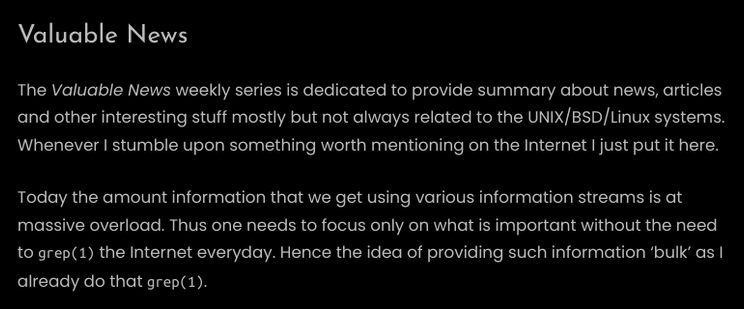

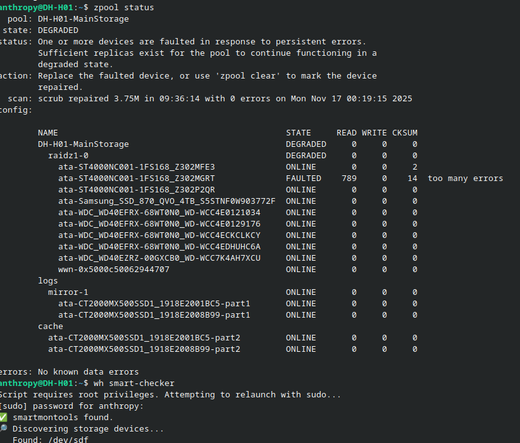

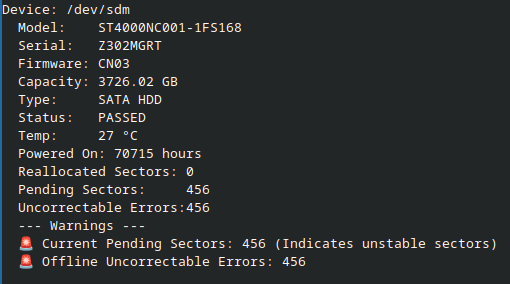

Reminder that SMART is not magic, in my experience, disks are dead long before SMART stops reporting them as 'PASSED'.

I actually came across the perfect example today: Two disks in my backup server are having issues, one is clearly broken, the other gave 2 checksum errors.

Despite this, neither disk is 'failed' according to SMART, one has 456 offline uncorrectable errors / pending sectors, the other one is fine.

Don't rely on broken hardware to tell you that it's broken.

Recording filesystem activity?

The more disk benchmarking I try to do, the more I want to more accurately simulate the actual workloads running in my system. I’m looking for a way that I can collect data about ZFS filesystem usage over a period of time to then craft fio benchmarks closer to what actually happens in the system.

I don’t need to get overly scientific - for the output I’m looking for something like:

- % mix of reads vs writes

- block size the application tried to write

- average queue depth (how ‘busy’ the system is)

Ideally I’d let this run for a few days to collect a sufficient sample.

Does something like this exist?

1 post - 1 participant

Recording filesystem activity?

The more disk benchmarking I try to do, the more I want to more accurately simulate the actual workloads running in my system. I’m looking for a way that I can collect data about ZFS filesystem usage over a period of time to then craft fio benchmarks closer to what actually happens in the system. I don’t need to get overly scientific - for the output I’m looking for something like: % mix of reads vs writes block size the application tried to write average queue depth (how ‘busy’ the system is...