EU plant neues Massenüberwachungsgesetz mit Vorratsdatenspeicherung & Zwangs-Backdoors – jetzt ist noch Widerspruch möglich!

Hey Leute,

die EU plant gerade ein neues Gesetz zur Vorratsdatenspeicherung, das echt krass werden könnte. Es geht nicht nur um ein paar Verbindungsdaten, sondern darum, jeden Online-Dienst zur Überwachung zu verpflichten – also auch Messenger, Hosting-Anbieter, Webseiten usw.

Das Ganze läuft unter dem Titel:

„Retention of data by service providers for criminal proceedings“

Hier kann man bis zum 18. Juni 2025 Feedback abgeben: Have Your Say

Was ist geplant?

- Pflicht zur Datenspeicherung mit Identitätsbindung – also alles, was du online machst, muss auf dich zurückführbar sein.

- Sanktionen für Dienste, die keine Nutzerüberwachung einbauen – darunter könnten auch VPNs, selbstgehostete Sachen oder Open-Source-Projekte fallen.

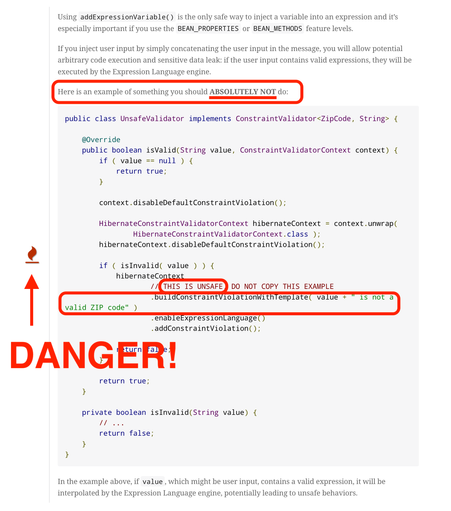

- Backdoors in Geräte und Software – mit Hardwareherstellern soll zusammengearbeitet werden, um „gesetzlichen Zugriff“ zu ermöglichen.

- Auch kleine Anbieter betroffen – es geht ausdrücklich nicht nur um Meta, Google & Co.

Das Ganze basiert auf Empfehlungen einer „High Level Group“, deren Mitglieder komplett geheim gehalten werden. Patrick Breyer (Piraten/MEP) @echo_pbreyer hat nachgefragt – die EU hat ihm eine geschwärzte Liste geschickt.

Laut EDRi wurde die Zivilgesellschaft explizit ausgeschlossen. Lobbyismus deluxe.

Was kann man tun?

Einfach Feedback abgeben, geht in 2 Minuten.

Kurz schreiben, dass man gegen anlasslose Vorratsdatenspeicherung und Überwachung ist, reicht schon. Jeder Kommentar zählt.

Deadline ist der 18. Juni 2025, Mitternacht (Brüsseler Zeit).

Wäre gut, wenn wir aus der IT-Szene da nicht still bleiben. Das betrifft wirklich alle – Entwickler, Admins, SysOps, Hoster, ganz normale Nutzer.

HyperSoop

HyperSoop