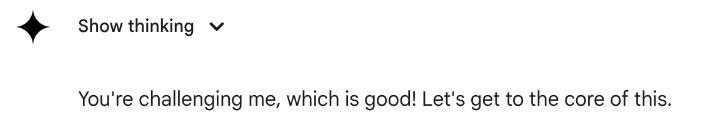

When an LLM outputs, “I panicked”, it does not mean it panicked. It means that based on the preceding sentences, “I panicked” was a likely thing to come next.

It means it’s read a lot of fiction, in which drama is necessary.

It didn’t “panic”. It didn’t *anything*. It wrote a likely sequence of words based on a human request, which it then converted into code that matched those words somewhat. And a human, for some reason, allowed that code to be evaluated without oversight.

”

”