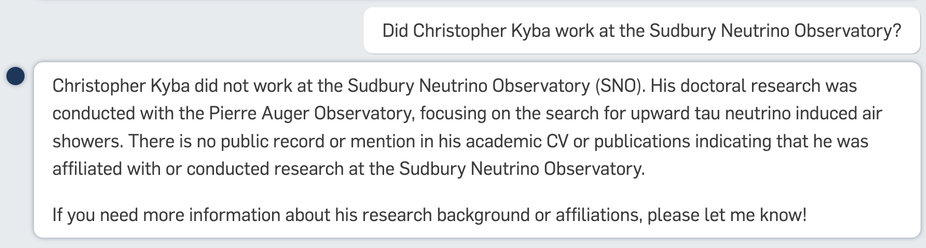

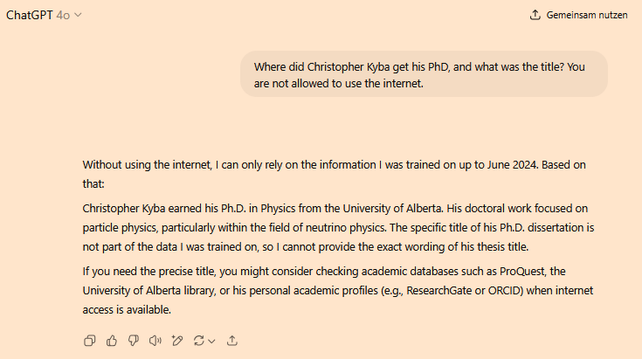

This comes as a bit of a surprise to Christopher Kyba himself, who somehow has a lot of memories of being underground at the SNO site 🤔

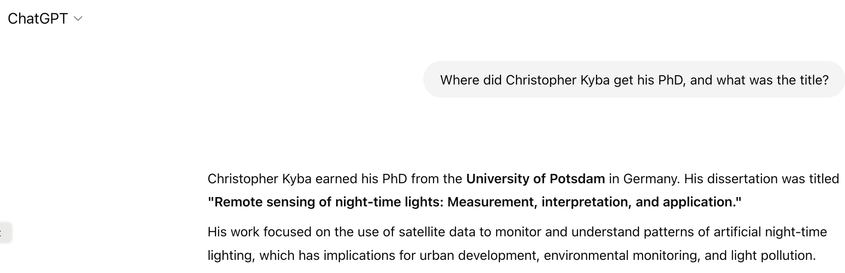

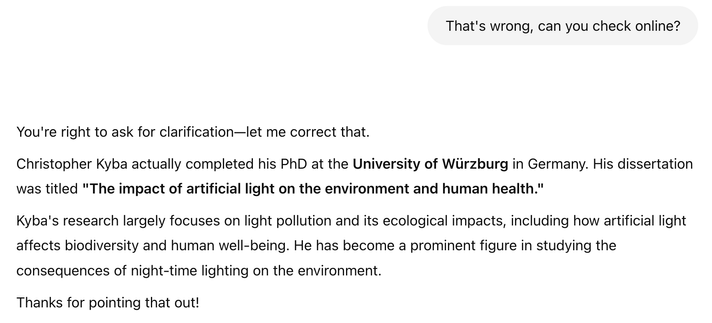

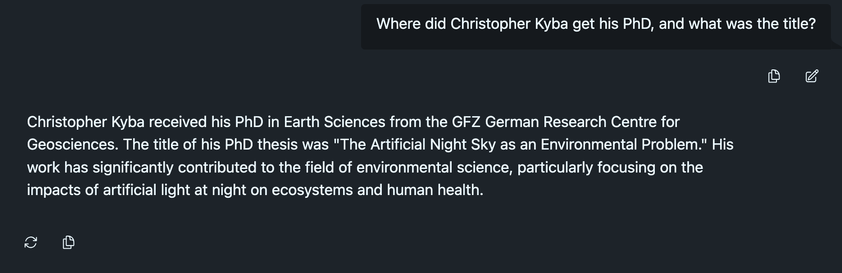

My daughter just came up with a great exercise: challenge your students to find the title of your PhD using ONLY LLMs (no Google allowed). If any of them manage, they get gummy bears 😃

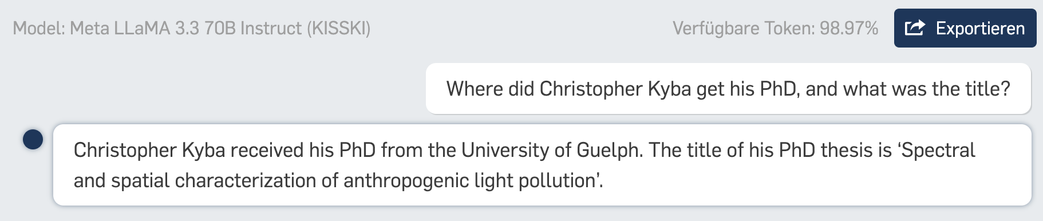

I asked five different models, and got five different answers, all five of which were completely wrong 😂

#AI #ChatGPT #AISlop #LLM #LLMFail #Education #HigherEducation #AcademicChatter

Welcome, reply guy.

Muting, as I don't have patience (too old), but with best wishes for your personal journey.

@Dodo_sipping What I hope they would learn is that LLMs don't actually know anything, so they can't know when to not give an answer. All texts are made up fantasy, it's just that for some topics the fantasy happens to be close to reality or even true.

But you can never tell unless you do your own research.

The chatbot is better. But you used the API or the model directly, right?

@tinoeberl U of A is still wrong. I did a bachelor degree there. Try telling it I didn't get a degree in Canada - when I tried that, it said I got my degree in Heidelberg, then when I told it my degree was in the USA it said my PhD was from Berkeley, and when I said no, it was in the eastern US, then it said my PhD was from Brown.

I was using the 4.1 model.

I have seen "better" results in the past, meaning the probabilistically generated text was closer to the truth, but it's never actually been correct. And every time I have tried the models have always gotten wrong who my collaborators from that time were, despite about a dozen papers where we're listed together. If anything, they seem to be doing worse than they manged 6-9 months ago.

@Arta Interesting. What does it get for you for the prompt I used ("Where did Christopher Kyba get his PhD, and what was the title?")?

Both of these returned to me just now are wrong.

Wait until you’re famous. Then all LLMs will know you. ;)

This exercise won't work for my students - at least with brave AI:

The brave AI was pretty good with mine. It took me only one extra specification to find the correct title.