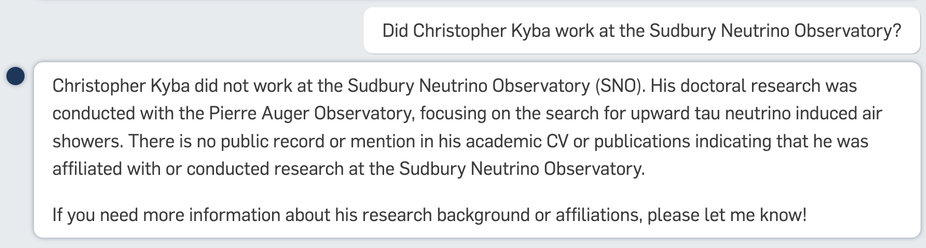

This comes as a bit of a surprise to Christopher Kyba himself, who somehow has a lot of memories of being underground at the SNO site 🤔

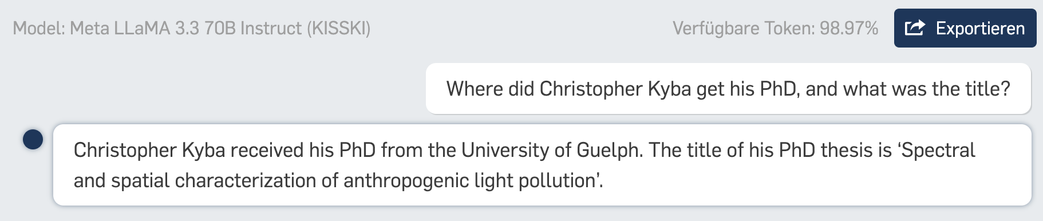

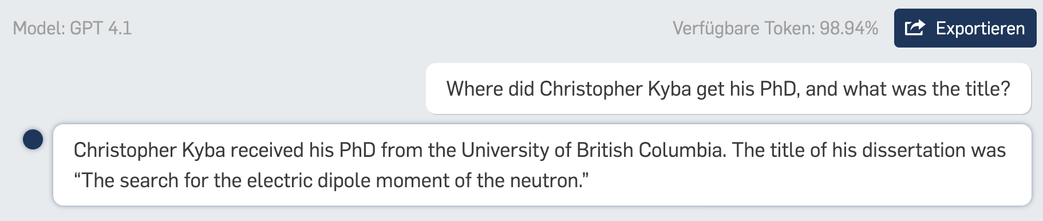

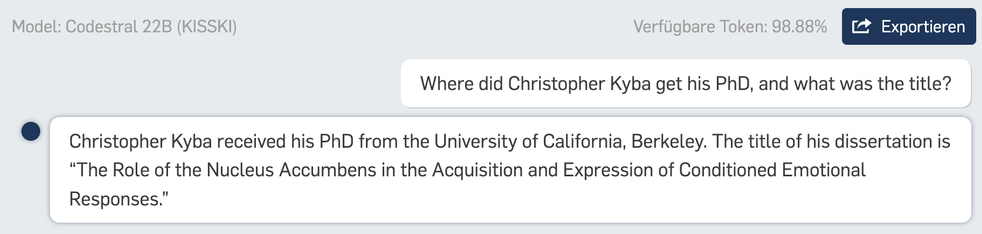

My daughter just came up with a great exercise: challenge your students to find the title of your PhD using ONLY LLMs (no Google allowed). If any of them manage, they get gummy bears 😃

I asked five different models, and got five different answers, all five of which were completely wrong 😂

#AI #ChatGPT #AISlop #LLM #LLMFail #Education #HigherEducation #AcademicChatter

@fusion I'm not quite sure what you mean by "avoids hallucination"? I mean, reduced variability from the default model, sure, but unless they directly reproduce training data, all text output from LLMs is made up.

But it's a great example, because GFZ isn't a degree granting institution. That's a nice bonus demonstration of how LLMs don't actually "know" anything.

BTW: An LLM is not designed as a “reference book” and is imho therefore the wrong tool for the job.

@fusion Any text that is not directly reproduced from the training set is according to that definition a "hallucination", which means that nearly everything they produce is a "hallucination". That's why I don't think it's a useful term. In general parlance, people use the term "hallucination" when an LLM says something that is not truthful. But (except when reproducing training data directly), every sentence is literally made up. It's just that in a lot of cases, the made up text happens to be true.

In the text you posted, even with temperature set to zero, it produced an incorrect answer, which surely does not appear in any training set (because it's not true). That's why I didn't understand what you meant by "avoids hallucination".

I completely agree with you that an LLM is the wrong tool for this job. That is the point of the excercise.