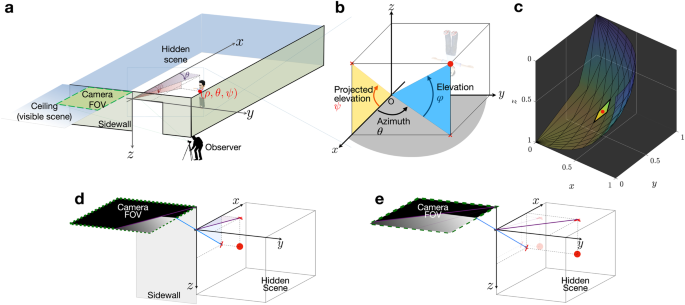

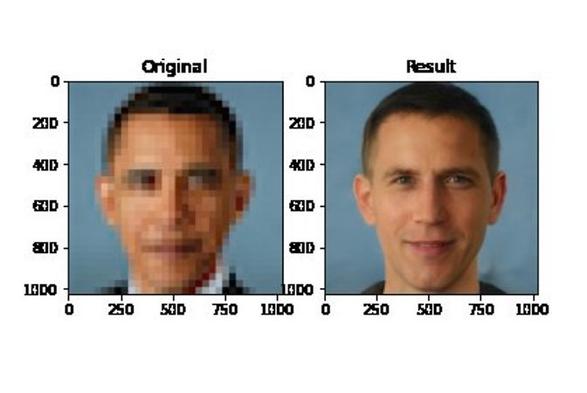

@mcc This was possible before 2010. There were face enhancement things for CCTV in the ‘90s that were trained on a set of faces and would fill in the gaps.

The key idea is that the image is not the only information you have. You know, a priori, that this blob is a face. Or you know that it’s a number plate and so the shapes must be letters and digits. And maybe it’s a UK number plate and so must be letters and numbers in a particular sequence. And maybe it’s a number plate for a specific type of vehicle so (assuming it’s a valid number plate) must be one of this known set.

This lets you treat it as a lossy compression problem with an external dictionary, rather than an image enhancement problem. The data you have is the lossily compressed data and the a priori dataset is the dictionary. Now you ‘just’ have to write the decoder.

The interesting experiment that drove some of this was a project to put biometrics on a magnetic strip for MoD systems that found that 50 bits was sufficient to uniquely identify facial features (which makes intuitive sense: there were around 232 people when they did this work and even if they all have unique faces then 50 bits is ample to have a sparse space containing all of them). From there, it follows that you can extrapolate a face from a few pixels as long as they are the right pixels (spoiler: you usually don’t).

EDIT: I remember a fractal image compression program from the ‘90s that claimed to be able to produce more detail than was in the original. It came on a magazine cover disk (floppy, not even a CD). You fed it an image and it would, effectively, produce a small program that generated something very close to that image. It took several minutes to run on my 386 but then you got an image that was resolution independent. You could make it generate arbitrary levels of detail, zooming into things that were single pixels on the original. Just zooming in a bit gave quite plausible things. They were all extrapolated from the rest of the image and nearby pixels, plus whatever biases were present in the generator. After a bit more zooming, you got obvious nonsense, but by then the entire screen was a single pixel from the original image.

he/him

he/him