Widely covered MIT paper saying AI boosts worker productivity is, in fact, complete bullshit it turns out.

An MIT spokesperson went on to say that they have no confidence in the veracity or reliability of journalistic institutions that repeat claims made in a student paper that has not undergone peer review.

^this

even the dogforsaken antivax movement of today exists precisely because a *doctor was paid by VC pharmabros to write a BS paper*. Which has since been thoroughly debunked *and* eventually retracted, but as you say (and as we're constantly grimly reminded) the damage has been done.

there's no reason to expect this bunch of them bros to be any better, evidence seems to suggest they're even worse.

@maybenot @gilgwath @GossiTheDog I thought Wakefield did it of his own volition, his angle was that he would later market his own vaccines as safe and rake money in.

The scum is probably responsible for more deaths than Putin, Assad, George W Bush and Agathe Habyarimana combined.

And they're already paying thousands of low wage laborers to write content for them anyway, in the name of RLHF (human feedback to correct AI mistakes, in near real time).

@GossiTheDog

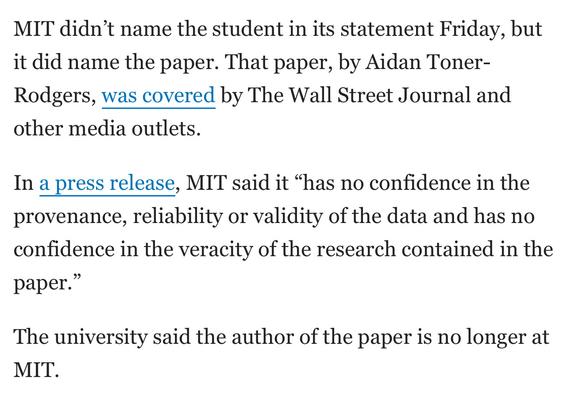

screenshot from the article. It reads:

MIT didn't name the student in its statement Friday, but it did name the paper. That paper, by Aidan Toner-Rodgers, was covered by The Wall Street Journal and other media outlets.

In a press release, MIT sait it "has no confidence in the provenance, reliability or validity of the data and has no confidence in the veracity of the research contained in the paper."

The university said the author of the paper is no longer at MIT.

FWIW, here's my take.

0: "AI" means three things nowadays: neural nets, machine learning, and LLM stuff. They are different things.

1: There was a paper in Science last year in which Materials Science types were doing some seriously kewl work on systems with 5 different metals using "machine learning" (gradient descent search in high dimensional spaces). And calling it AI.

2: The Econ. grad student didn't understand this and thought they were doing LLM stuff. Oops.

Machine learning is a field that uses statistics to do its thing. Its tools include neural nets but not LLMs. (I dislike the term "machine learning", but to the best I can tell, they're smart sensible folks, statisticians doing gradient descent in insanely high-dimensional spaces.)

Dunno how LLMs could be called "machine learning", since they're exactly and only random text generators.

But the Wikipedia for LLM begins with:

"A large language model (LLM) is a type of machine learning model designed "

Why is that wrong in your view? I'm not trying to gotcha you, this field is new and quite incomprehensible, so it irks me a bit when people reshuffle the categories I'm just learning.

Blokes building LLMs use machine learning.

Blokes doing machine learning don't use LLMs.

Probably an incorrect take. This looks more like deliberate fraud, rather than an econ PhD student making an honest mistake because of the WSJ citation of how this fraud, in Jan 2025, was brought to the attention of the two MIT professors who championed the lie by a "...computer scientist with experience in materials science (who) questioned how the technology worked, and how a lab THAT HE WASN'T AWARE OF (caps added) had experienced gains in innovation".

MIT is small enough that their star Nobel Econ laureate and any of his little army of econ PhDs could have easily checked with Materials Science. Straight up professional humiliating embarrassment.

Toner-Rodgers MIT second year student PhD web page was deleted by MIT. Signs point to an expulsion (fraud) not a suspension (honest mistake).

"Probably an incorrect take"

Yep. I'm more irritated by inconceiveble stupidity than deliberate fraud, so that's where I go. But your:

"Straight up professional humiliating embarrassment."

is spot on.

Cassandrich: agreed. Completely.

"If you want to be a bit snarky about it, you can alternatively think of AI as a very overconfident rubber duck that exclusively uses the Socratic method, is prone to irrelevant tangents, and is weirdly obsessed with quirky hats. Whatever floats your ducky."

https://hazelweakly.me/blog/stop-building-ai-tools-backwards/

Did they fired the „AI" who wrote that paper…

Was this paper written with a worker productivity boost?

Silicon Valley is nothing but a hustle now, not for serious people.

I mean, it was a hyphenated Aiden. The clues were right in the name to start.

"The author of the paper is no longer at MIT."

What are the odds that has something to do with academic dishonestly?

@GossiTheDog I like how, despite the "we can't say anything due to privacy" statements, we can infer that the student was kicked out of the program for academic dishonesty since they were apparently a 2nd year PhD at the end of 2024 and are currently no longer at MIT.

Journalists should definitely take a lot of care when looking at arXiv papers. I only ever submitted there when my work was already accepted, but I've seen papers published there with rejection letters attached. It's wild.