Are you f* kidding me, Apple?!

After a long time, I filed another bug report using Feedback Assistant because the bug was bad enough that it’s worth the effort of writing it all down.

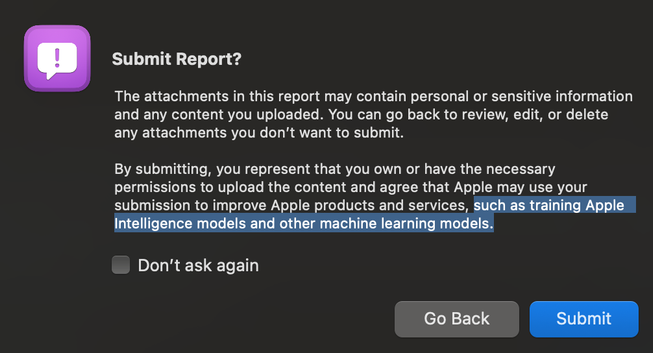

When uploading a sysdiagnose (or probably any other attachments) you get the usual privacy notice that there is likely a lot of private and other sensitive info in those log files. It’s not a great feeling but it is what it is with diagnostic data and I mostly trust the folks at Apple to treat it with respect and I trust the Logging system to redact the most serious bits.

However, when filing a feedback today a noticed a new addition to the privacy notice:

"By submitting, you […] agree that Apple may use your submission to [train] Apple Intelligence models and other machine learning models."

WTF? No! I don’t want that. It’s extremely shitty behavior to a) even ask me this in this context where I entrust you with *my* sensitive data to help *you* fix your shit to b) hide it in the other privacy messaging stuff and to c) not give me any way to opt out except for not filing a bug report.

Do you really need *more* reasons for developers not to file bug reports? Are the people who decided to do this really this ignorant about the image Apple‘s bug reporting process has in the community? How can you even think for a single second that this is an acceptable idea?

So, WTF, Apple?!