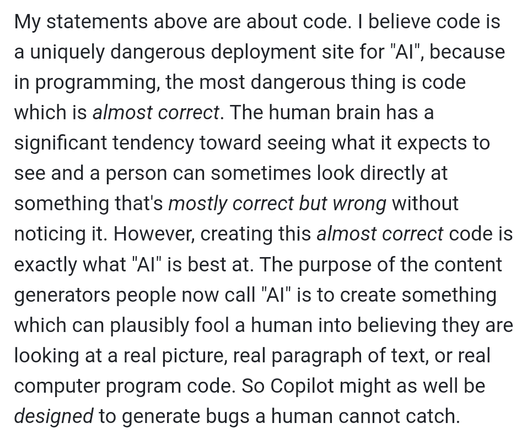

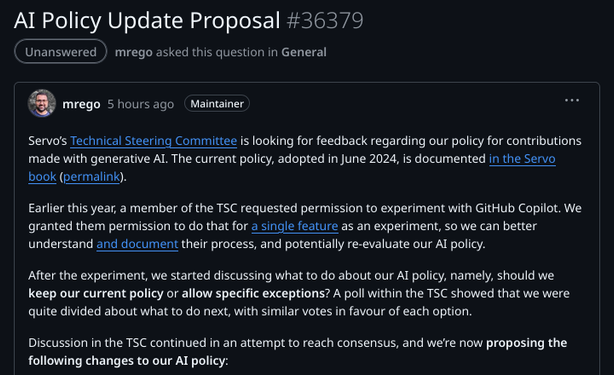

Hello, if you care about Servo, the alternative web browser engine that was originally slated to be the next gen Firefox but now is a donation-supported project under the Linux Foundation, you should know Servo announced a plan to as of June start allowing Servo code to be written by "Github Copilot":

https://floss.social/@servo/114296977894869359

There's a thoughtful post about this by the author of the former policy, which until now banned this:

https://kolektiva.social/@delan/114319591118095728

FOLLOWUP: Canceled! https://mastodon.social/@mcc/114376955704933891

Servo (@servo@floss.social)

Attached: 1 image Servo is considering: - allowing some AI tools for non-code contributions - allowing maintainers to use GitHub Copilot for some code contributions over the next 12 months These changes are planned to take effect in June 2025, but we want your feedback. More details: https://github.com/servo/servo/discussions/36379