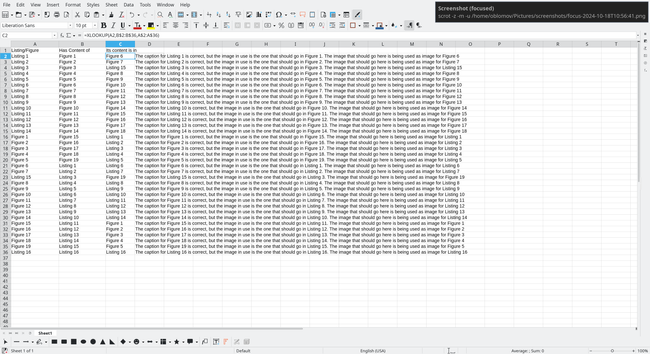

Finally got the proofs for my manuscript about porting #GPUSPH to run on CPU (yes, we went the other way). The completely bungled up all the figures and listings. This will be a nightmare to fix because I have to comment on each of them to say “this figure goes there, and the figure that should be here is at that other place”, and I'm ready to bet they'll *still* mess it up after I'm done adding all the comments.

And they are getting paid big monies because gold open access!