#adobe #ai #adobefirefly #firefly #adobeai #firefly #imagegeneration #videogeneration #vectorgeneration #generativeai

#adobe #ai #adobefirefly #firefly #adobeai #firefly #imagegeneration #videogeneration #vectorgeneration #generativeai

How to Generate More Realistic Images, Videos, & Better Vector Graphics with Adobe Firefly’s Generative AI

Realistic imagery matters more than ever as digital audiences expect believable photos, clips, and even avatars. Adobe Firefly, now unified as a single generative‑AI platform, supports image, video, audio, and vector creation. In April 2025, Adobe unveiled Image Model 4 and the Firefly Video Model; by July 2025, Firefly expanded with advanced video controls, partner models, and bulk editing. This article explains why these updates are timely and how creative professionals can use them to generate believable content. It draws on the most recent information as of August 1, 2025, so you can trust its relevance [helpx.adobe.com].

What Adobe Firefly offers creators – a quick overview

Firefly is Adobe’s commercially safe generative‑AI platform within Creative Cloud. Users can create images, videos, text effects, and even vectors from natural language prompts. Firefly attaches content credentials, ensuring transparency about the model used and whether AI helped create the work. Partner models from Google, OpenAI, Flux, and Runway now sit alongside Adobe’s engines. Paid plans grant more generative credits, and the mobile app (beta) lets you create on iOS or Android, syncing with your desktop projects. Firefly Boards, introduced in June 2025, provides a collaborative canvas for mood boards and ideation.

Why realism is a priority

Clients and audiences recognize the difference between stylised art and a believable scene. Social campaigns, marketing materials, and concept art often demand photos and videos that feel authentic. Adobe Firefly’s latest models improve prompt fidelity, structure control, and resolution. They render people, animals, and architecture with greater accuracy and even allow you to specify camera angles and zooms. The July 2025 video update also tackles motion coherence – an essential step for believable animation.

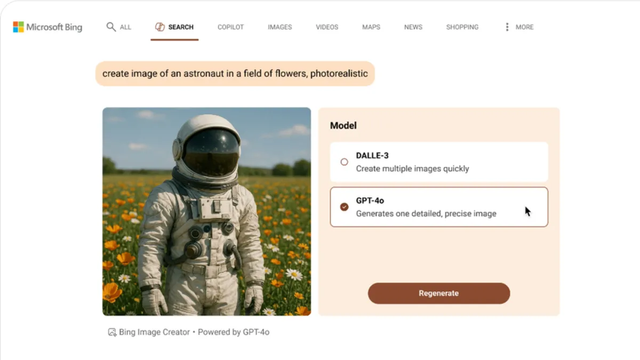

Image Model 4 and Image Model 4 Ultra – photorealism unlocked

How the new models differ

Image Model 4, released in April 2025, offers lifelike image quality, more creative control over structure and style, and the ability to generate outputs up to 2K resolution. It is designed for rapid ideation, producing high‑quality images quickly for illustrations, icons, and everyday creative needs. Image Model 4 Ultra goes further: it excels at photorealistic scenes, human portraits, and small groups with natural detail. Adobe calls Image Model 4 the fastest, most controllable, and most realistic Firefly model yet. For advanced use cases, the two models give professionals a choice between speed and extreme realism [blog.adobe.com].

Best practices for realistic image generation

Using Firefly well depends on the quality of your prompts. Adobe’s guidelines advise you to be specific – use at least three words and avoid vague terms like “generate”. Include a subject, descriptive adjectives, and contextual keywords. Being descriptive improves alignment with your vision: details about characters, environments, and lighting lead to better results. Adding originality through feeling, style, and lighting helps stand out. Consider empathy: prompts that reflect emotion make images more engaging. Finally, Adobe Firefly is part of Creative Cloud – after generation, use Generative Fill, Expand, and other Photoshop tools to refine your images, crop or replace backgrounds, and color‑grade multiple files at once.

Use reference images and style controls

Image Model 4 gives you options to set aspect ratio, content type, and style presets. You can match composition to a reference image, apply aesthetic filters, or use 3D scenes as guides. Partner models such as GPT Image, Flux 1.1 Pro, and Ideogram 3 provide alternative aesthetics; each uses different credit rates. Choose Adobe models when you need licensed, commercially safe results; switch to partner models when exploring stylized or experimental looks.

Vector and partner‑model innovations

Adobe Firefly now offers text‑to‑vector capabilities. You can transform prompts into scalable, editable vector graphics for logos, icons, or packaging. Because vectors are clean and resolution‑independent, they integrate well with design workflows. Partner models integrated in July 2025 include Google’s Veo 3, Runway’s Gen‑4, Topaz upscalers, and Moonvalley’s Marey. Firefly Boards allows you to select from these models and compare outputs on a canvas. Choose a model based on the look you need: Veo for cinematic motion blur, Gen‑4 for stylized animations, Topaz for upscaling, or Flux for graphic illustrations. Content credentials still accompany partner‑model outputs, but check each provider’s terms before commercial use.

Video generation in 2025 – new controls and smoother motion

Motion fidelity and composition reference

The July 2025 Firefly video update tackles the biggest complaint: jerky motion. Adobe’s upgraded video model produces smoother transitions; snow particles or an octopus now animate with fewer frame‑to‑frame jumps. While clips remain short and compressed, this improvement makes storyboards and previews feel more believable. The new Composition Reference workflow allows you to upload a base clip and have Firefly replicate its framing or camera movement with entirely new content. This is valuable for cinematographers and storyboard artists; they can modify time of day, set dressing, or weather while preserving motion vectors.

Style presets and keyframe cropping

Firefly’s advanced video controls include style presets such as claymation, anime, and line‑art, allowing quick exploration of looks. Keyframe cropping uses your uploaded first and last frames to automatically reframe videos for vertical, square, or horizontal formats. These features speed up social‑media deliverables without manual pan‑and‑scan editing. They also illustrate how Adobe Firefly can adopt cinematic aesthetics or preserve composition across aspect ratios.

Generative sound effects and avatars

Firefly’s July update introduces Generate Sound Effects (beta). You can type a description like “hissing steam valve” or record a rhythm; Firefly layers a corresponding audio clip onto your video. Although outputs are stereo and somewhat cartoonish, this tool adds atmosphere quickly. Text to Avatar (beta) converts scripts into talking‑head videos with stock avatars and adjustable accents. Use it for explainer videos or training content, but remember that current avatars remain limited and cannot match professional presenters.

Third‑party video models and prompt enhancements

A major change is Firefly’s integration of third‑party video models. You can now choose Google Veo 3 and Runway Gen‑4 for video generation, plus Luma’s Ray 2 and Pika 2.2** (coming later). Partner models bring diverse aesthetics and motion characteristics. Adobe Firefly also includes an Enhance Prompt tool that rewrites vague user instructions into more specific language before generation. Finished clips can be exported directly to Adobe Express or Premiere Pro, streamlining your workflow.

Best practices for video prompts

Clear, descriptive prompts remain the key to realistic videos. Adobe suggests using as many words as necessary to describe lighting, cinematography, color grade, mood, and aesthetic style [helpx.adobe.com]. A recommended structure is: Shot Type Description + Character + Action + Location + Aesthetic. For example, specify the camera perspective (close‑up, wide shot), the character’s appearance and emotion, the action they perform, the environment, and the cinematic feel. Limit prompts to four subjects to avoid confusion. Being explicit about the visual tone (realistic, cinematic, animated, or artistic) helps Firefly meet your expectations. Define actions with dynamic verbs and adverbs, use descriptive adjectives to set the atmosphere, and provide backstory when needed. You can even direct camera movements – pan, zoom, aerial, or low‑angle shots – to achieve a personalized look. Include temporal elements such as time of day or weather to influence mood. Finally, iterate: start with a basic prompt and refine it through successive generations.

Bulk editing, mobile workflows, and creative production

The July 2025 update also introduces productivity tools. Adobe Firefly can resize multiple images simultaneously, keeping focal points sharp and using generative expansion to fill empty spaces. It can reframe multiple videos to new aspect ratios, crop or color‑grade thousands of images at once, and remove or replace backgrounds across a batch. Firefly Boards (beta) integrates partner models and allows teams to organize images, videos, and documents on an infinite canvas. The Firefly mobile app, released in June 2025, lets you generate images and videos on the go. All creations sync with your Creative Cloud account for seamless transition between devices.

What makes Firefly so useful for designers and filmmakers

Firefly’s generative models accelerate concept exploration. Designers can quickly test compositions, moods, and styles before committing to a photoshoot or set build. Marketers gain near‑instant social content that matches brand aesthetics. Motion fidelity and composition reference shorten previsualisation cycles for filmmakers. However, Firefly is a tool, not a replacement for craft. AI‑generated images and videos still require human oversight to meet professional standards. High‑resolution capture, dynamic range management, and lighting nuance remain the domain of artists and cinematographers. Ethical considerations also matter: users must verify that partner models are appropriate for their projects and respect licensing conditions.

Practical tips to maximize Adobe Firefly’s realism

- Focus your prompts. Start with clear, specific descriptions; refine them iteratively until the output matches your vision.

- Control composition and style. Use reference images, specify shot types, and choose style presets to match your desired look.

- Leverage partner models. Experiment with Veo, Gen‑4, or Flux when you need alternative aesthetics or more dynamic motion.

- Combine AI with manual editing. After generating, refine results in Photoshop, Lightroom, or Premiere Pro; adjust color grading and retouch details.

- Use bulk tools wisely. Batch resizing, reformatting, and color‑grading help maintain consistency across campaigns; use generative expand to fill missing areas.

- Check content credentials. Always review the attached metadata to know which model created your output and ensure commercial safety.

- Stay updated. Adobe regularly adds new models and features; being early to adopt them gives you creative advantages.

The future of AI‑assisted creativity

Adobe Firefly shows how quickly generative AI is moving toward believable images and videos. Image Model 4 and its Ultra variant push photorealism, while the July 2025 video update improves motion and introduces tools for composition, style, and sound. As partner models expand, creators will gain even more diversity in aesthetics and storytelling. Yet the human element remains essential: designers and filmmakers must guide AI with clear intent, refine outputs, and uphold ethical standards. Mastering Firefly’s latest features now will prepare you for even more sophisticated AI tools on the horizon.

Don’t hesitate to browse WE AND THE COLOR’s AI and Technology categories to stay up to date with the latest news, trends, and updates.

#adobeFirefly #ai #imageGeneration #vectorGeneration #videoGeneration

Kling AI vs Adobe Firefly Comparison: Creative AI Image & Video Generators Go Head-to-Head

All the Features, All the Facts, and Everything You Need to Know about Kling AI and Adobe Firefly in 2025.

Generative video and image tools are reshaping visual communication. In the last two years alone, Adobe Firefly users have created more than 22 billion assets. At the same time, China‑based Kuaishou has rapidly iterated its Kling models, propelling a home‑grown challenger onto the global stage. As creative professionals and marketers weigh their options, the question “Kling AI vs Adobe Firefly?” keeps popping up. This detailed and well-sourced comparison offers a comprehensive answer. It examines the origins, capabilities, and limitations of each platform and offers critical insights for choosing between them.

The Rise of Generative Video

Advances in diffusion transformers, spatiotemporal attention, and multimodal AI have turned science fiction into accessible tools for designers, filmmakers, and hobbyists. Kling AI vs Adobe Firefly sits within this broader trend. Both tools are built on large generative models trained to translate text or image prompts into moving images. They differ in scope, integration, and design philosophy. Before diving into details, it helps to understand the generative landscape.

Understanding Kling AI

Evolution of the Kling Models

Kling AI emerged from Kuaishou, one of China’s largest social‑media companies. The first version debuted in June 2024, offering text‑to‑video generation at 1080p resolution and durations of five or ten seconds. Version 1.5 arrived in November 2024 with the ability to generate a video using only a final frame, making it ideal for product shots and static scenes. It also introduced camera‑movement controls in professional mode.

The 1.6 update in December 2024 improved prompt responsiveness and physical realism. Kuaishou added Standard and Professional modes, giving beginners an accessible interface while offering advanced users more control. A key feature was Lip‑Sync—synchronizing mouth movements with audio when faces are present.

Kling 2.0 and 2.1

April 2025 brought Kling 2.0, which extended clip length up to two minutes at 1080p and 30 fps. It introduced direct editing of objects within a scene via text commands and special tools for coloring, reshaping, and expanding shots. Notably, Kuaishou launched an AI Sounds feature that generates realistic audio matching on‑screen action.

Only a month later, Kuaishou released Kling 2.1, focusing on improved motion quality and semantic responsiveness. It offered separate 720p and 1080p modes, allowing users to balance speed and detail. An AI Sounds update in June 2025 added automatic soundscapes like rain or crowd noise. Scenario’s technical guide notes that Kling 2.1 supports both text‑to‑video and image‑to‑video workflows, providing faster generation speeds, improved action control, and consistent character styling. The premium 2.1 Master variant adds advanced 3D motion and support for multiple aspect ratios (help.scenario.com).

Strengths and Innovations

Kling’s reputation stems from its motion quality. Scenario highlights that versions 1.6 and above excel at smooth, natural motion and avoid the jitter common in many models. Kling also shines at character animation, with version 2.1 maintaining facial consistency and emotional expression across a sequence. Its models allow first‑frame conditioning—using an image as the opening frame—and, for 1.6 Pro, last‑frame conditioning to set the closing state (help.scenario.com). Users can specify both start and end frames, enabling smooth transitions or looped animations.

Prompt adherence is another strength: Kling models respond closely to textual instructions and accept negative prompts to guide the output. Resolution options range from 360p to 1080p across versions, and durations of five or ten seconds are supported (longer sequences are possible by stitching outputs). The 2.1 release introduced cost‑effective Standard and Pro tiers and the high‑end Master version with multi‑aspect ratios (help.scenario.com).

Flux AI’s review elaborates on 2.1 Standard and 2.1 Master. The Standard model emphasizes speed and affordability, offering fast rendering, seamless image‑to‑video conversion, ambient audio synthesis, and a maximum duration of ten seconds. The Master model targets professional storytellers; it delivers superior motion with joint‑attention rendering, exceptional scene coherence, enhanced ambient sound, and support for complex prompts. Flux lists key innovations such as a physics‑aware motion engine, multi‑frame reference consistency to avoid distortions, automatic ambient audio generation, and improved lip‑sync (flux-ai.io). Taken together, these advances make Kling AI a powerful tool for narrative‑driven content.

Drawbacks and Considerations

Despite its strengths, Kling AI comes with trade‑offs. The comprehensive guide from Arab AI notes inconsistent output quality in some videos, long wait times for free users, and customer support issues (aiarabai.com). In addition, while Kling’s deep control options allow artists to manipulate start and end frames and control motion paths, the learning curve is steep. New users may find the interface complex compared with more plug‑and‑play tools. Pricing also varies: the free plan offers limited credits, while paid plans start at roughly $79 per year for the Standard tier. The high‑end Master version, available through partners like Flux, costs more credits per second (flux-ai.io).

Visit Kling AI for more infoAdobe Firefly: A Unified Creative Platform

From Image Generator to All‑in‑One Suite

Adobe launched Firefly as an image generator but has quickly expanded it into a unified platform. The April 2025 update integrated image, video, audio, and vector generation tools and offered improved models for ideation and creative control. Firefly is designed for commercial safety; Adobe emphasises that it is trained on licensed or public‑domain content. Brands such as Deloitte and Paramount+ have adopted Firefly to speed up production and personalise campaigns (blog.adobe.com). Integration with Photoshop, Premiere Pro, and other Creative Cloud apps allows users to move seamlessly between concept and final asset.

Adobe has also introduced a mobile app (coming soon) for iOS and Android. It lets users generate images and videos on the go and sync history across devices, ensuring that mobile projects can be continued on desktop later.

Firefly Video Model

The Firefly Video Model, officially launched in 2025, generates clips up to five seconds and supports resolutions up to 1080p. Users can select aspect ratios—16:9, 9:16, or 1:1—and upload start and end frames to guide the generation. The model delivers significant improvements in photorealism over the beta, enhancing text rendering, landscapes, and transition effects. It allows seamless transitions from text prompts to images to video and offers industry‑leading camera controls (blog.adobe.com).

The July 2025 update introduced advanced video controls and composition reference. Creators can upload a reference video, and Firefly will transfer its composition to new content, ensuring visual flow across scenes. Style presets—claymation, anime, line art, and more—let users set a tone with one click. Keyframe cropping simplifies cropping the first and last frames while generating a video that matches the intended format. In addition, Firefly now offers Generate Sound Effects (beta) and Text‑to‑Avatar (beta) features that layer custom audio or generate avatar‑led videos (blog.adobe.com), adding depth to storytelling.

Firefly also integrates third‑party models. The July update added Runway’s Gen‑4 Video and Google Veo3 with audio to Firefly Boards and Generate Video. Upcoming integrations include Topaz Labs upscalers, Luma AI’s Ray 2 and Pika 2.2. By letting users choose models within a single interface, Adobe positions Firefly as a hub for generative creativity (blog.adobe.com).

Pricing and Credits

Firefly uses a credit‑based system. Creative Cloud subscribers receive a monthly allotment of generative credits; additional credits can be purchased. According to a 2025 comparison of AI video tools, a Firefly Premium plan costs about $9.99 per month and provides 2,000 generative credits. This plan grants unlimited access to the Firefly Video Model. The integration of credits across Photoshop, Illustrator, and other apps makes Firefly cost‑effective for users already embedded in Adobe’s ecosystem.

Advantages and Considerations

Firefly’s biggest selling point is commercial safety. Adobe trains its models on licensed data and attaches content credentials to each generation, giving businesses confidence in legal reuse. Firefly’s interface is intuitive; the web app and mobile app present clear controls, and the ability to export directly into Photoshop and Express simplifies workflows. The platform encourages experimentation with style presets and composition references, making it ideal for quick ideation and marketing campaigns.

Yet Firefly has limitations. Video clips max out at five seconds, which may restrict storyboarding or long‑form narratives. The platform currently offers fewer fine‑tuning options than Kling; complex scenes may require multiple iterations. Pricing is straightforward but credit‑based, so heavy users might exhaust credits quickly. Some users also note that Firefly still struggles with scenes involving complex human interactions and may cap resolution for print workflows (createandgrow.com). Despite these caveats, Firefly’s integration and safety make it a strong choice for designers and marketers.

Visit Adobe Firefly for more infoKling AI vs Adobe Firefly: Feature‑by‑Feature Comparison

Creative Control and Customization

When comparing Kling AI vs Adobe Firefly, creative control is a key differentiator. Kling offers granular control through first‑frame and last‑frame conditioning, motion brushes (introduced in earlier versions), and the ability to upload up to four reference images via its Elements feature. The 2.1 release supports multi‑frame reference consistency to maintain visual coherence across frames. Users can adjust prompt strength, negative prompts, and camera movements to fine‑tune outputs. These tools make Kling ideal for animators, filmmakers, and game designers who need precise narrative control.

Adobe Firefly prioritizes ease of use. While it allows uploading start and end frames and provides camera controls, the options are designed for rapid ideation rather than granular animation. Firefly’s composition reference tool replicates the structure of a reference video, and style presets offer quick aesthetic shifts. However, it lacks features like negative prompts and multi‑reference composition found in Kling. For users seeking speed and simplicity, Firefly’s controls may be sufficient; those seeking deeper control might prefer Kling.

Video Quality and Realism

Both models strive for realism, but they differ in approach. Kling 2.1 employs a physics‑aware motion engine and 3D spatiotemporal joint attention to simulate gravity, momentum, and fluid dynamics. Its strength lies in consistent character animation and dynamic facial expressions. The Master model adds joint‑attention passes for exceptional motion and scene coherence flux-ai.io. These capabilities make Kling well‑suited for cinematic storytelling, 3D sequences, and action scenes.

Adobe’s Firefly Video Model focuses on photorealism and text rendering. The latest release improved motion fidelity, producing smoother transitions and lifelike accuracy in landscapes, animals, and atmospheric elements. Support for 1080p resolution and multiple aspect ratios ensures crisp visuals. Firefly’s style presets allow for creative diversity, but the five‑second limit may restrict continuous storytelling (blog.adobe.com). In general, Firefly excels at generating polished clips for marketing or social posts, while Kling delivers more complex motion and longer durations.

Ease of Use and Workflow Integration

For users embedded in the Creative Cloud ecosystem, Firefly offers seamless integration. It connects directly to Photoshop, Illustrator, and Premiere, letting you export assets or continue projects without switching platforms. The forthcoming mobile app enables generation on the go. Firefly’s interface is intuitive, with clear options for aspect ratios, camera angles, and style presets (blog.adobe.com). Its content credentials ensure transparency and legal compliance.

Kling AI requires more technical familiarity. While the Standard mode simplifies some processes, the Pro and Master modes demand understanding of prompt engineering, negative prompts, and reference framing. The platform functions as a full creator environment, offering lip‑sync, motion brushes, and multi‑reference image composition. Such flexibility is powerful but may overwhelm casual users. Kling’s site also has reported wait times and occasional quality fluctuations.

Pricing and Accessibility

Pricing influences the Kling AI vs Adobe Firefly choice. Firefly uses a credit system integrated into Creative Cloud subscriptions. For roughly $9.99 per month, users get 2,000 credits, unlimited video model access, and the ability to work across Adobe apps. The credit system suits users who already pay for Creative Cloud.

Kling AI offers a free tier with limited credits, but users often face long waits and watermark restrictions. The Standard plan costs about $79.20 per year and includes 660 credits per month. A more expensive Pro tier extends credit limits and unlocks higher‑quality modes. Through partners like Flux AI, the 2.1 Master model costs 1,000 credits per five seconds (flux-ai.io). For those producing high volumes of content, Firefly’s unlimited premium access may be cheaper; for occasional high‑quality sequences, Kling might offer better value.

Use Cases and Target Users

Designers and marketers may gravitate toward Firefly. Its ease of use, commercial safety, integrated sound effects, and quick generation make it ideal for social campaigns, ideation sessions, and rapid prototyping. The integration with Creative Cloud suits those who already rely on Adobe software. Firefly’s composition reference and style presets aid mood‑board development and visual brainstorming.

Filmmakers, animators, and game developers might prefer Kling. The platform’s frame control, multi‑reference capability, and physics‑aware engine enable complex narratives and dynamic camera moves. Version 2.1’s support for multiple outputs per prompt and AI‑powered prompt generation speeds up iteration. The ability to generate longer clips in Kling 2.0 (up to two minutes) also appeals to creators who need longer sequences. However, the steep learning curve and wait times should be considered.

Beyond the Feature Sheet: Critical Perspectives

Comparing Kling AI vs Adobe Firefly is not just about specifications. It raises questions about creative control, safety, and the future of AI‑assisted art. Who owns the output? Adobe’s approach to commercial safety—training on licensed data and attaching content credentials—offers reassurance. Kuaishou has not been as transparent about training data, raising potential licensing concerns. How much control do you need? Kling’s advanced tools empower skilled animators, but novices may struggle. Firefly lowers the barrier to entry but may limit advanced experimentation.

Where does AI fit in the creative process? Both platforms are best used as collaborators rather than replacement tools. They can generate drafts, mood boards, or rough edits, but human judgment, storytelling, and editing remain essential. Artists should treat these tools as part of a larger workflow, combining AI‑generated footage with real‑world footage and manual edits.

What about ethical and cultural considerations? Kling’s origin in China and Firefly’s base in the U.S. highlight differing regulatory environments and data‑use policies. Users must consider not just technical capabilities but also ethical alignment with their practice.

Which Tool Should You Choose?

There is no universal winner in the Kling AI vs Adobe Firefly debate. The choice depends on your needs and context. Ask yourself:

- Do you need maximum creative control or quick results? Kling offers granular control with multi‑frame references and physics‑aware motion, while Firefly delivers rapid, polished clips with minimal setup.

- Are you already invested in Adobe’s ecosystem? If you use Photoshop, Illustrator, or Premiere, Firefly integrates seamlessly. If not, Kling’s standalone nature may suit you better.

- How long should your clips be? Firefly caps video length at five seconds; Kling 2.0 can generate up to two‑minute scenes. If storyboarding or longer shots are essential, Kling is the only option.

- What about budget? Firefly’s premium plan offers unlimited video generation for about $9.99 per month. Kling’s Standard plan is cheaper annually but charges more credits for high‑end output; Master mode can be costly.

In many cases, using both tools strategically may be wise—Firefly for rapid ideation and social content, Kling for polished narrative sequences. The generative AI landscape is evolving quickly, so staying flexible will help you adapt as models improve.

The Takeaway

Generative video is still in its early stages, but Kling AI vs Adobe Firefly encapsulates the field’s diversity. Kuaishou’s Kling pushes boundaries with physics‑aware motion, multi‑reference control, and immersive ambient audio. Adobe’s Firefly prioritizes safety, integration, and user friendliness, offering robust image and video tools within a familiar ecosystem. Ultimately, both platforms offer remarkable capabilities that can augment human creativity. By understanding their differences and aligning them with your needs, you can harness AI as a powerful creative partner, ensuring your work remains original, inspired, and ethically grounded.

Explore both tools to see which works best for your creative workflow.

Visit the Kling AI website Visit the Adobe Firefly websiteExplore WE AND THE COLOR’s AI section for insights into today’s most exciting tech trends.

#adobe #adobeFirefly #ai #AIGenerator #AIVideo #imageGeneration #KlingAI #videoGeneration

The Register: Meet President Willian H. Brusen from the great state of Onegon. “After seeing some complaints about GPT-5 hallucinating in infographics on social media, we asked the LLM to ‘generate a map of the USA with each state named.’ It responded by giving us a drawing that has the sizes and shapes of the states correct, but has many of the names misspelled or made up.”

10 Powerful Ways to Use Kling AI for Your Creative Business in 2025

The creative economy of 2025 demands unparalleled speed, originality, and adaptability. Generative AI has moved from a novel concept to an essential, practical tool that is actively reshaping professional workflows. At the forefront is Kling AI, a sophisticated video generation platform that is becoming indispensable for creative entrepreneurs, agencies, and brands. This platform empowers you to produce cinematic-quality videos from simple text prompts. It transforms abstract ideas into compelling visual narratives within seconds.

The true potential of Kling AI for your creative business, however, lies beyond the initial novelty. Its power is in its strategic application within real-world, professional workflows. This article explores ten powerful and practical ways creative professionals are leveraging Kling AI. They use it to innovate, communicate, and scale their businesses right now.

1. Create High-Impact Social Media Content with Kling AI

Visual storytelling absolutely dominates social media. Kling AI enables creators and brands to produce short-form videos with a cinematic flair once reserved for large production crews. Consequently, this capability is not merely cost-effective; it is genuinely transformational for any content strategy.

From Text Prompt to Viral Reel

You can generate eye-catching reels, promotional teasers, or animated announcements from a single concept. For visual artists, this means turning abstract thoughts into captivating short mood films. For brands, it offers a powerful new way to unveil product launches or campaign themes with undeniable visual authority. The platform helps you answer the question of how to make AI videos for social media that truly stand out.

2. Use Kling AI to Design Stunning Client Pitch Videos

First impressions remain critically important in creative business. This is especially true for pitch decks and proposal presentations. Kling AI allows designers and entrepreneurs to build custom visuals that articulate a concept or mood more effectively than words ever could.

Elevating the Pitch Deck

Instead of a flat PDF, imagine starting your pitch with a dynamic 15-second cinematic clip. This clip perfectly encapsulates your vision. This approach does more than just impress; it forges an immediate emotional connection. Furthermore, it demonstrates your creative ambition and your technological fluency, setting you apart from the competition.

3. Produce Animated Explainers Without Animation Skills

Previously, creating animated explainer videos required specialized skills and, often, a dedicated team. Kling AI democratizes this entire process. It gives independent creators and small studios the ability to produce short, engaging animated explainers that look and feel completely professional.

Simplifying Complexity Through Motion

From UI walkthroughs to product showcases, Kling AI helps transform static ideas into fluid, understandable motion. How can you make explainer videos with AI that are clear and engaging? This tool is the answer. As a result, it can dramatically improve how your audience understands complex information.

4. Elevate Podcast Production with Visual Episodes

The podcasting landscape is increasingly visual. Whether you post full episodes on YouTube or share teaser clips on Instagram, adding a visual layer can boost your reach and retention. Kling AI makes it easy to create dynamic video snippets from podcast scripts or key soundbites.

Visuals for Your Voice

You can generate ambient scenes that reflect the tone of the discussion. You can also animate key quotes or create intros that reflect your unique brand identity. This practice gives small podcasts the polish of big-budget productions, helping you figure out how to make video teasers for podcasts using AI.

5. Transform Case Studies into Mini-Documentaries with Kling AI for Agencies

A static case study might sit unread in an inbox. In contrast, a 30-second AI-generated video can turn it into a shareable narrative. Use Kling AI to summarize client projects, milestones, or testimonials with animated visuals, voiceover, and the perfect mood. This makes it a key tool of Kling AI for agencies.

Making Your Portfolio Memorable

This narrative approach makes your portfolio work more emotional and memorable. It is perfect for agencies, freelancers, and design studios that want to differentiate themselves in a crowded market. This strategy turns proof of work into a compelling story.

6. Generate Visual Concepts for Creative Direction

Creative directors often need to visualize concepts for campaigns or installations. Kling AI can generate visual treatments directly from creative briefs. It effectively serves as an inspiration board in motion. This is a primary use case for Kling AI in creative direction.

From Brief to Moving Mood Board

By translating written ideas into moving images, you accelerate feedback loops and decision-making. It is also an elegant way to present mood and tone to clients or collaborators. This ensures everyone shares the same vision from the project’s start.

7. Create Mood Films and Artistic Shorts for Portfolios with Kling AI for Artists

Artists, illustrators, and designers can now create video art without needing to shoot a single frame. Kling AI allows you to generate poetic or surreal visuals for your portfolio. This application makes Kling AI for artists more than a utility—it becomes a new medium.

AI as an Artistic Medium

This use case positions Kling AI as a tool of genuine artistic expression. It is especially relevant for experimental creatives and storytellers looking to explore narrative visuals. You can now ask how to create visual poems with AI and find a practical answer.

8. Bring Storyboards and Scripts to Life Instantly

Are you working on a film pitch, music video, or ad concept? Kling AI lets you visualize the script or storyboard in seconds. This helps directors and producers pre-visualize ideas without waiting for hand-drawn frames or 3D renders.

Accelerating Pre-Production

This function is a massive time-saver. It helps secure buy-in from stakeholders very early in the process. Because the visuals can be regenerated from the same prompt, iteration becomes easy and efficient. Storyboard video generation with AI is now a practical reality.

9. Offer New Services as an AI Creative Studio

For creative agencies, Kling AI opens new service possibilities. You can offer AI-generated brand films, vision videos, and launch animations. This move positions your studio as an essential innovation partner for your clients.

Monetize AI Tools in the Creative Industry

This is an appealing service for startups or e-commerce brands looking to stand out. Kling AI makes it feasible to deliver high-concept motion content, even on tight timelines. It allows creative entrepreneurs to offer cutting-edge services. This makes Kling AI for creative entrepreneurs a pathway to new revenue.

10. Enhance Visual Identity Projects with Motion

Visual identities traditionally stop at logos and colors. However, brands now expect motion to be part of their core DNA. Kling AI allows designers to explore how a brand might move without needing motion design software.

Giving Brands a Dynamic Personality

You can use prompts to create scenes reflecting brand values and mood. You can add motion to identity guidelines. Ultimately, this shows how a brand can breathe and live in motion, not just in static form. This is a key part of brand identity motion design with AI.

Why Kling AI Is the Creative Companion of 2025

Kling AI is a fundamental paradigm shift for creators who want to move faster and tell better stories. It helps you compete on a global stage without increasing overhead. The platform allows you to reimagine what is possible with limited resources but unlimited imagination.

In 2025, creative businesses that embrace AI tools like Kling AI will not just survive—they will stand out. Whether you are a solo designer, a full-service agency, or a media brand, a use case here can elevate your work. Now is the time to experiment. Start with one strategy. Build from there. The future of visual storytelling is already here, and Kling AI is helping to shape it.

Explore the Tool#ai #animation #audioGeneration #imageGeneration #KlingAI #videoGeneration #visuals

Was ist denn der Vorteil, wenn ich Pixelkoordinaten als float [0..1] statt int [0..länge/breite] hab?

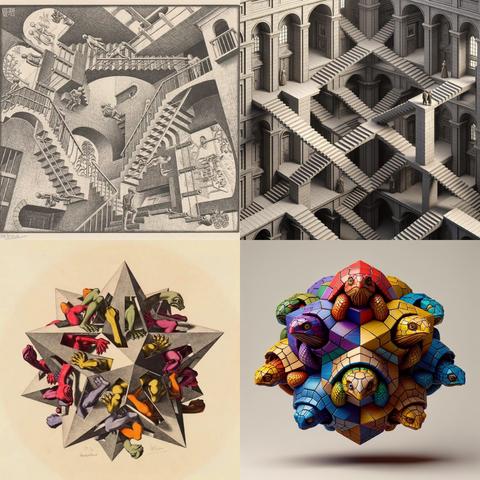

Notes on Image Generation with GPT-4.1

As regular readers may know, I have no compunction about using AI tools for reviewing my writing and generating occasional illustrations – I do it as a force multiplier, not as a r(...)

#ai #art #classics #comics #dalle #gpt4.1 #imagegeneration #patterns #stablediffusion #styletransfer

"An analytic theory of creativity in convolutional diffusion models"

https://arxiv.org/abs/2412.20292

Image generation results predicted using analytical computations that required no training.

An analytic theory of creativity in convolutional diffusion models

We obtain an analytic, interpretable and predictive theory of creativity in convolutional diffusion models. Indeed, score-matching diffusion models can generate highly original images that lie far from their training data. However, optimal score-matching theory suggests that these models should only be able to produce memorized training examples. To reconcile this theory-experiment gap, we identify two simple inductive biases, locality and equivariance, that: (1) induce a form of combinatorial creativity by preventing optimal score-matching; (2) result in fully analytic, completely mechanistically interpretable, local score (LS) and equivariant local score (ELS) machines that, (3) after calibrating a single time-dependent hyperparameter can quantitatively predict the outputs of trained convolution only diffusion models (like ResNets and UNets) with high accuracy (median $r^2$ of $0.95, 0.94, 0.94, 0.96$ for our top model on CIFAR10, FashionMNIST, MNIST, and CelebA). Our model reveals a locally consistent patch mosaic mechanism of creativity, in which diffusion models create exponentially many novel images by mixing and matching different local training set patches at different scales and image locations. Our theory also partially predicts the outputs of pre-trained self-attention enabled UNets (median $r^2 \sim 0.77$ on CIFAR10), revealing an intriguing role for attention in carving out semantic coherence from local patch mosaics.