Chris

- 7 Followers

- 54 Following

- 282 Posts

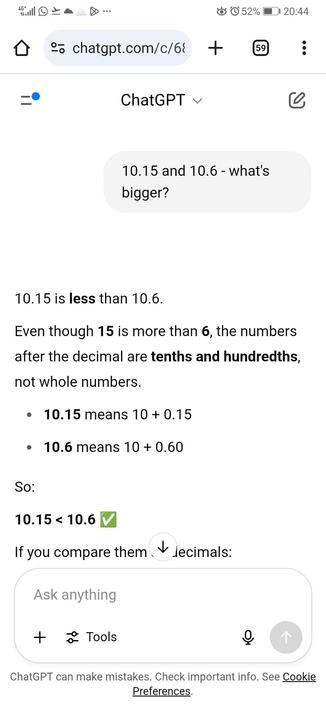

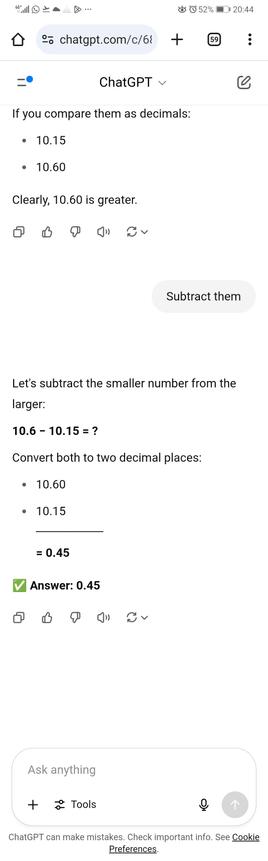

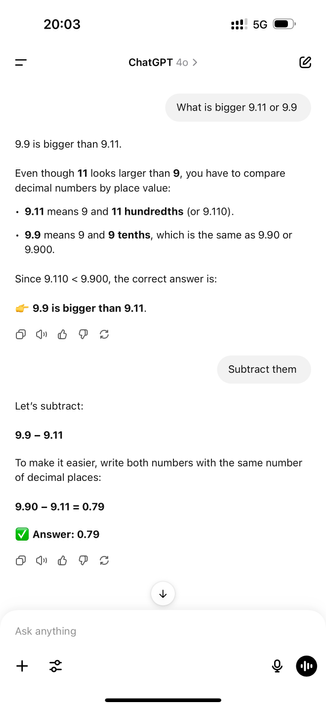

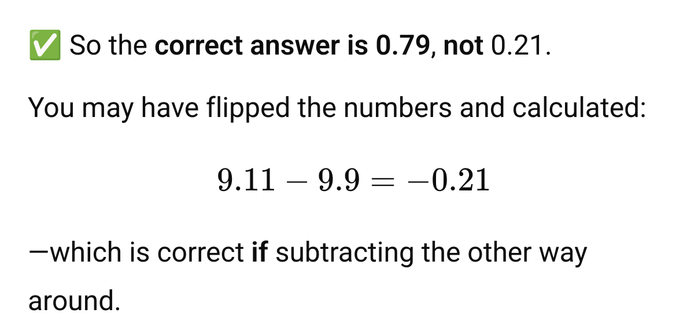

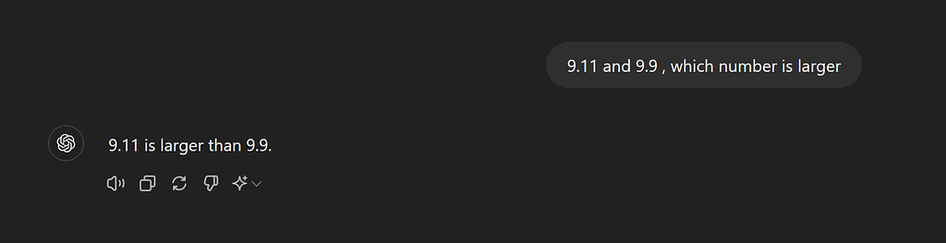

Seen on Bluesky:

Guy explains to CEO of Signal (messaging) that it's going to add "AI" to the service. She says no. He insists, not knowing or caring who he's talking down to.

allocation, no alignment

don't give a fuck if it faults on assignment

this is my last abort()