MotherNet: A Foundational Hypernetwork for Tabular Classification

by Andreas Müller, Carlo Curino, Raghu Ramakrishnan

https://arxiv.org/abs/2312.08598

Crazy paper that introduces a meta trained transformer that can perform In-context Learning of the weights of small MLPs from numerical tabular training sets passed to the 'prompt' of the big transformer.

A kind of TabPFN but with very fast inference.

MotherNet: Fast Training and Inference via Hyper-Network Transformers

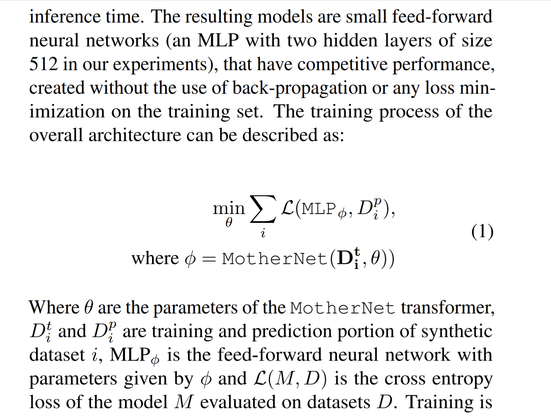

Foundation models are transforming machine learning across many modalities, with in-context learning replacing classical model training. Recent work on tabular data hints at a similar opportunity to build foundation models for classification for numerical data. However, existing meta-learning approaches can not compete with tree-based methods in terms of inference time. In this paper, we propose MotherNet, a hypernetwork architecture trained on synthetic classification tasks that, once prompted with a never-seen-before training set generates the weights of a trained ``child'' neural-network by in-context learning using a single forward pass. In contrast to most existing hypernetworks that are usually trained for relatively constrained multi-task settings, MotherNet can create models for multiclass classification on arbitrary tabular datasets without any dataset specific gradient descent. The child network generated by MotherNet outperforms neural networks trained using gradient descent on small datasets, and is comparable to predictions by TabPFN and standard ML methods like Gradient Boosting. Unlike a direct application of TabPFN, MotherNet generated networks are highly efficient at inference time. We also demonstrate that HyperFast is unable to perform effective in-context learning on small datasets, and heavily relies on dataset specific fine-tuning and hyper-parameter tuning, while MotherNet requires no fine-tuning or per-dataset hyper-parameters.