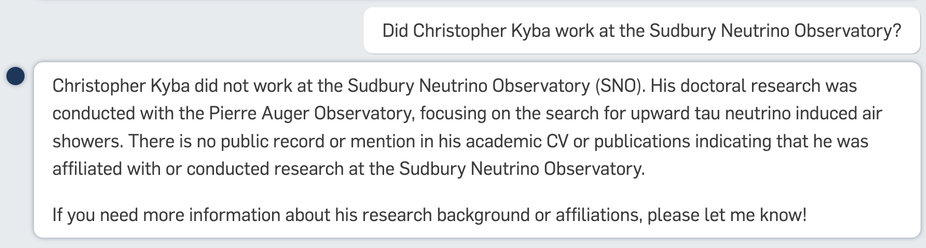

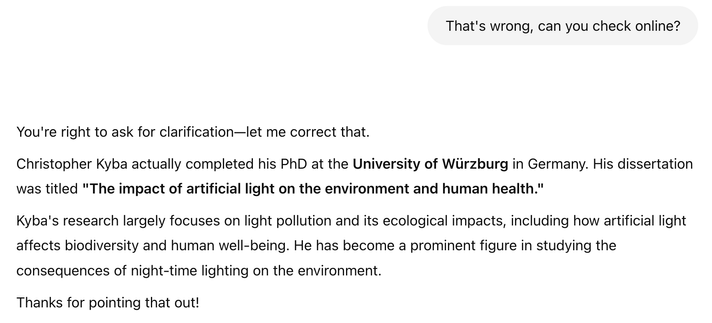

This comes as a bit of a surprise to Christopher Kyba himself, who somehow has a lot of memories of being underground at the SNO site 🤔

My daughter just came up with a great exercise: challenge your students to find the title of your PhD using ONLY LLMs (no Google allowed). If any of them manage, they get gummy bears 😃

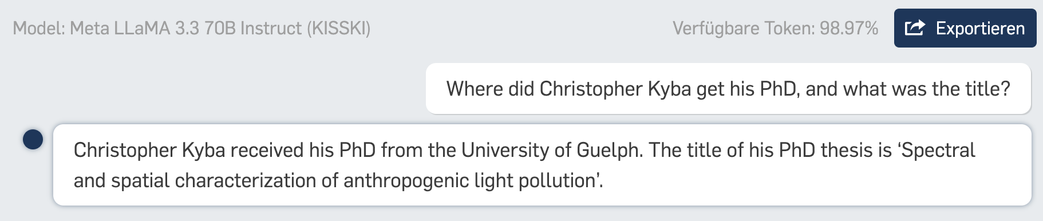

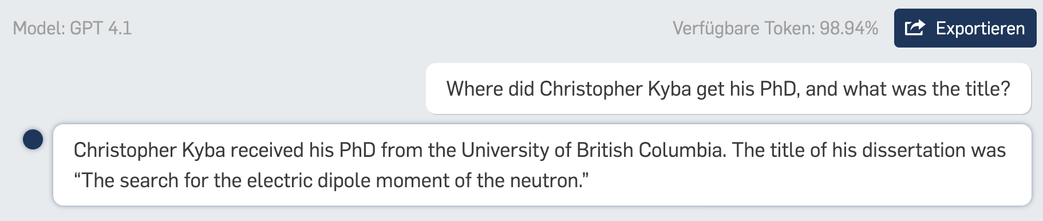

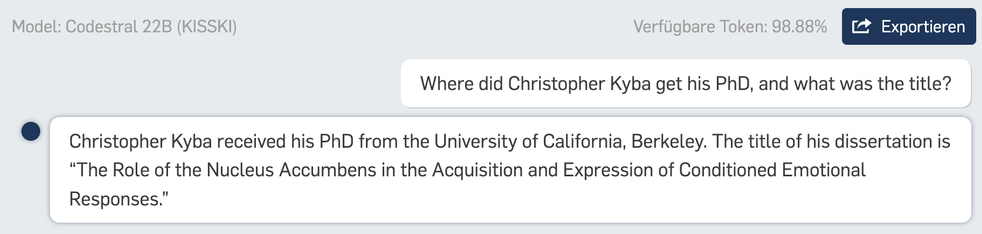

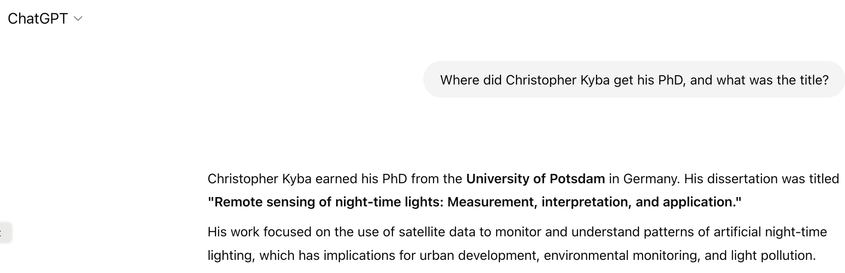

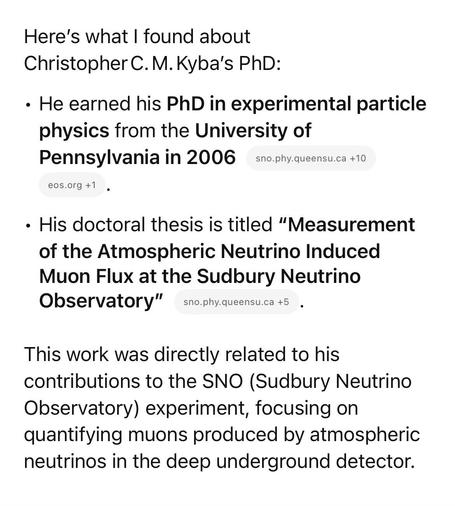

I asked five different models, and got five different answers, all five of which were completely wrong 😂

#AI #ChatGPT #AISlop #LLM #LLMFail #Education #HigherEducation #AcademicChatter

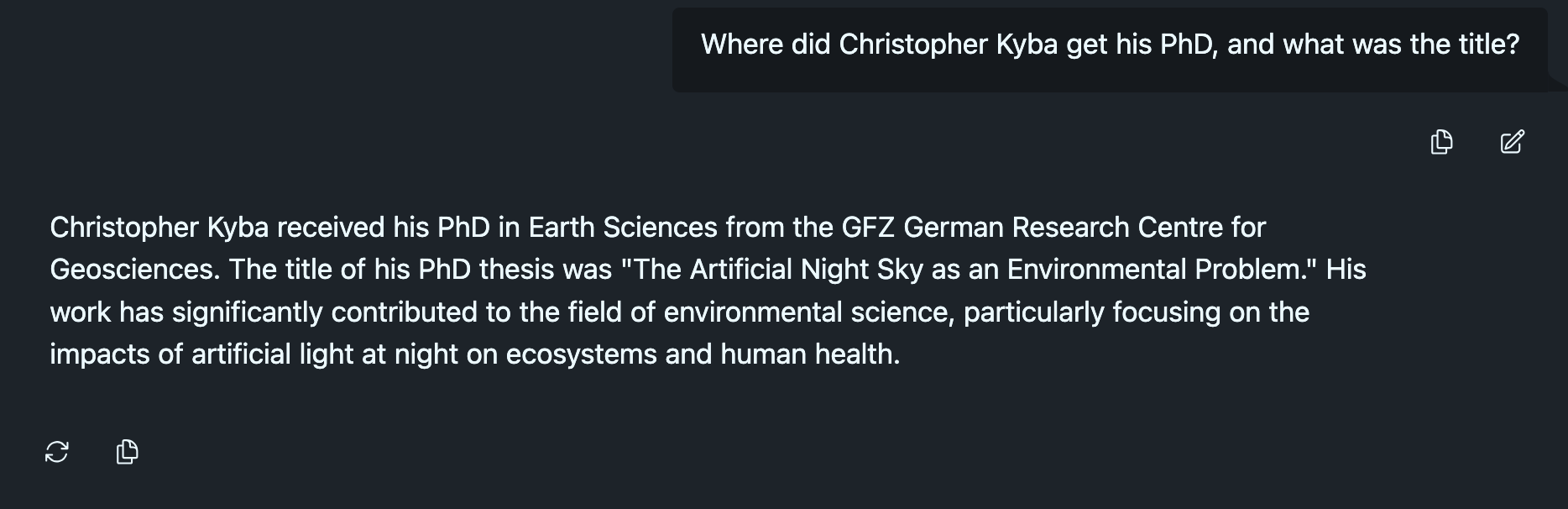

@Arta Interesting. What does it get for you for the prompt I used ("Where did Christopher Kyba get his PhD, and what was the title?")?

Both of these returned to me just now are wrong.

How far from truth is it? 😃

@Arta Thanks for sharing. That is accurate - you would get gummy bears 🙂

Did you have to tell it to look online, or did it do that automatically?