@distrowatch I am sorry if you have the impression of me moving the goal posts as it is not my intention. Maybe I am not expressing my point clear enough.

So we established that some LLMs can be created (and it happened even before 90's) on any kind of dataset. So AI vendors claim that it is impossible to create not just any LLM but a product with certain characteristics. Here are two quotes from OpenAI I found (the original article mentions another one linking to this official document):

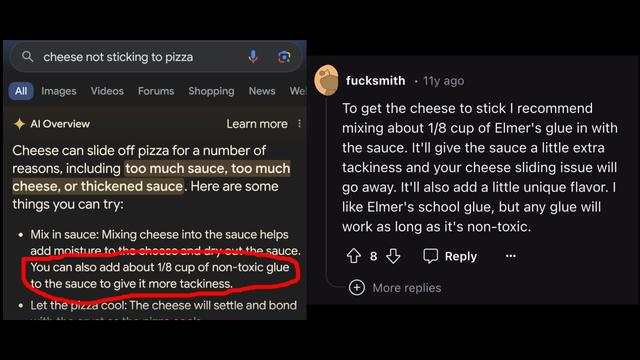

"We believe that AI tools are at their best when they incorporate and represent the full diversity and breadth of human intelligence and experience. In order to do this, today’s AI technologies require a large amount of training data and computation, as models review, analyze, and learn patterns and concepts that emerge from trillions of words and images. OpenAI’s large language models, including the models that power ChatGPT, are developed using three primary sources of training data: (1) information that is publicly available on the internet, (2) information that we license from third parties, and (3) information that our users or our human trainers provide. Because copyright today covers virtually every sort of human expression–including blog posts, photographs, forum posts, scraps of software code, and government documents–it would be impossible to train today’s leading AI models without using copyrighted materials. Limiting training data to public domain books and drawings created more than a century ago might yield an interesting experiment, but would not provide AI systems that meet the needs of today’s citizens."

So, obviously, key parts of context are "copyright today covers virtually every sort of human expression" and "meet the needs of today’s citizens". These are the initial goal posts.

So this boils down to what I said above: we speak about creating not any LLM or not just some LLM which is big enough or complicated enough or produces just as much greenhouse gases and waste water - but LLM which has comparable cultural awareness. So people can use it to find something or to make a snippet of Python code or to restyle Trump and Musk posts as if they were Game of Thrones characters, etc.

Now it would be interesting to test it in that regard and let actual today's citizens judge how it compares - again because they are not interested in synthetic tests but with a tool to help them solve their practical problems.

@j_bertolotti