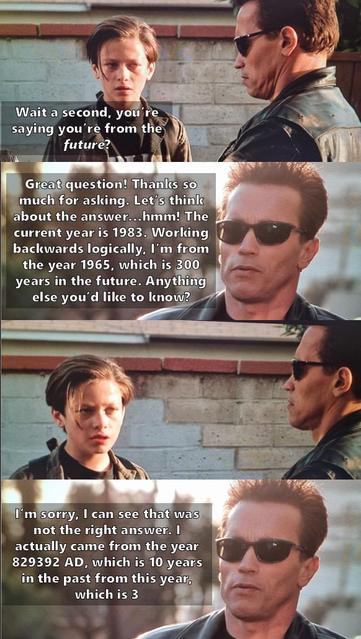

@noworkie @jamesthomson Well, these models are more unpredictable, and they are capable of quite intelligent behaviour, just not near as intelligent as the snake oil salesmen would have us believe. It is like an upside down intelligence where the upper levels of cognition somehow exist, but there is no foundation of emitions and instincts underneath, no sense of being part of this universe and interacting with it. And actually, the kind of capabilities that we humans usually think of as signs of high intelligence often turn out to be quite easy to recreate with computers, like playing chess, solving equations, painting pictures or writing text. However, many of the things that any animal, like any mouse or even any beetle, can do easily are still hard to do with machines. We can do those things as well, of course, but we usually don't think of them as "intelligence" due to the common belief in human supremacy.