@zackwhittaker The "A" could also stand for "authoritarian".

Every despot alive today is dreaming of a pliant workforce without the potential of a *moral compass*.

AI + robots would provide them 'security' and production without pesky humans ever getting in their way.

🤣🤣🤣🤣💯

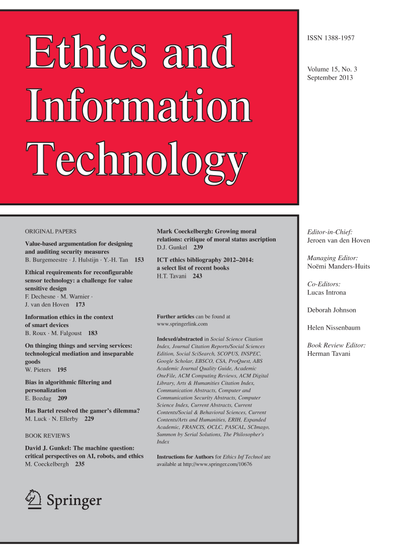

ChatGPT is bullshit - Ethics and Information Technology

Recently, there has been considerable interest in large language models: machine learning systems which produce human-like text and dialogue. Applications of these systems have been plagued by persistent inaccuracies in their output; these are often called “AI hallucinations”. We argue that these falsehoods, and the overall activity of large language models, is better understood as bullshit in the sense explored by Frankfurt (On Bullshit, Princeton, 2005): the models are in an important way indifferent to the truth of their outputs. We distinguish two ways in which the models can be said to be bullshitters, and argue that they clearly meet at least one of these definitions. We further argue that describing AI misrepresentations as bullshit is both a more useful and more accurate way of predicting and discussing the behaviour of these systems.

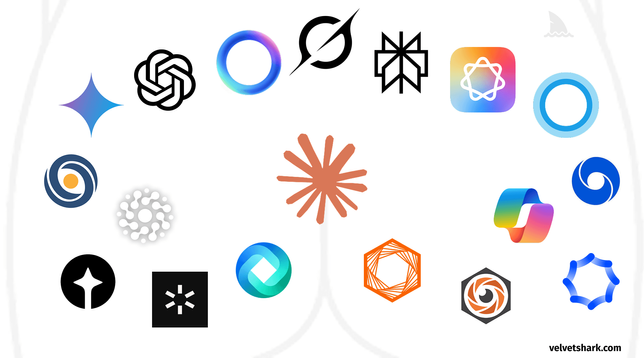

Some of those are just circles. If a circle looks like a butthole to you, then that's on you.

But the Walmart logo, that one's definitely a butthole!

They are showing us their true form.

@zackwhittaker Because all these AI companies and just making sh*t!! It's not AI its just an LLM and yet every assistant is unusable since you can't ask questions without it being a political statement like.

"What is the net worth of politician X?" And the answer has not been lets search the internet but a response how political questions cannot be answered. Which is dumb and sh*tty.

@zackwhittaker does this mean we will see the return of goatse.cx as an AI startup?They do, after all, have the original...

And they're about as sensible as any of the others.

If anybody has a butthole that looks like any of those, I urge them to see a physician ASAP.

If you're into interpreting imagery, you should see Georgia O'Keeffe's paintings of lilies and other flowers!

"I'm surrounded by assholes!" - Rick Moranis, Spaceballs