Online workshop this friday 2pm CET: Wekinator – Gesture Control with Machine Learning

"Explore the world of gestural interfaces in this online course on using Wekinator to harness the power of machine learning for performance and installations."

Infos and signup here: https://thenodeinstitute.org/courses/ws24-tk-04-wekinator-gesture-control-for-performance-with-machine-learning/

Run by @sebescudie

Hosted by @thenodeinstitute

#wekinator #ml #machinelearning #creativecoding

WS24 – TK – 05 – Wekinator – Gesture Control for Performance with Machine Learning

Wekinator – Gesture Control for Performance with Machine Learning Febuary 7th 2025, 2-5 PM, Berlin Time In English Language / Online via Zoom or as Recording1 sessions on Friday About Wekinator …

Body Painting (Gesture Drawing App in vvvv)

https://youtu.be/P6szJyo55go

"In this project I made a simple drawing application in vvvv, that is controlled using your hands and fingers through a Leap Motion Sensor."

#vvvv #visualprogramming #creativecoding #wekinator #machinelearning #ml

Body Painting (Gesture Drawing App in vvvv)

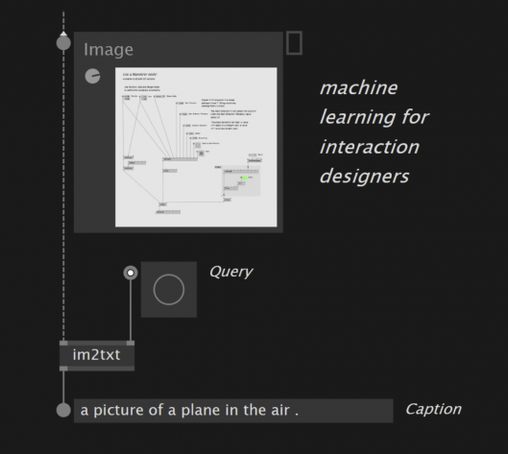

Machine learning for interaction designers - The NODE Institute

As the name of the webinar suggests, we'll talk about machine learning through the prism of interactive installations.

New in the work-in-progress section:

VL.Wekinator by @sebescudie

https://discourse.vvvv.org/t/vl-wekinator/18991

"The Wekinator allows users to build new interactive systems by demonstrating human actions and computer responses, instead of writing programming code."

#vvvv #visualprogramming #dotnet #creativecoding #ml #ai #machinelearning #wekinator

VL.Wekinator

bonjour everyone, there’s been some work lately creating a VL.Wekinator plugin that allows you to easily interact with the aforemetioned software. don’t know what Wekinator is? read on! what the wekinator wekinator is a standalone software that uses machine learning to solve the following problem: given input X, I want to get output Y. Where X can be any input that can be expressed as a series of floats and the output can either be: discrete categories, like “Pose 1”, “Pose 2”, … continuous...