🚀 A practical data efficiency tip for software developers & tech leaders:

✅ Treat your data like you treat your code!

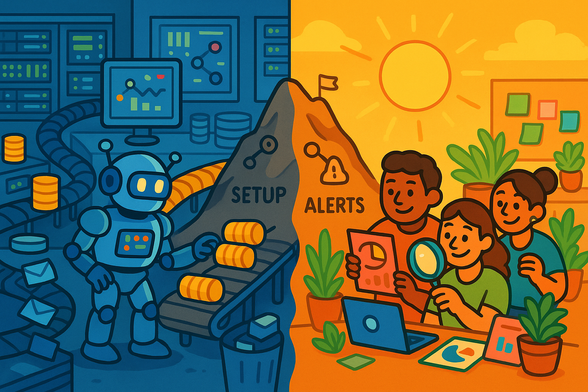

Instead of waiting for data problems to surface in production — or worse, in your ML models or analytics — you can catch them earlier by integrating data checks into your development workflow.

Here’s how:

💡 Add data validation tests to your CI/CD pipeline — just like unit tests for code.

💡 Define and enforce data contracts (expected schemas & rules) between teams or systems.

💡 Run automated change impact analysis when modifying data pipelines to see what breaks before deploying.

By shifting these checks left — into your CI/CD pipeline — you avoid expensive downstream failures, reduce debugging time, and deliver more reliable ML and analytics outcomes.

Start small: pick one critical dataset or pipeline and add basic schema validation to your PR checks. You’ll thank yourself later.

💻📊 #SoftwareDevelopment #DataValidation #CI/CD #TechLeadership #ML #Analytics