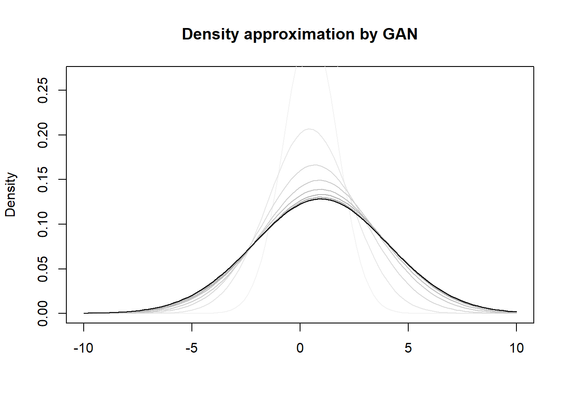

I don't think people understand synthetic data. Sure, some people get it that with human-generated data and imitative models you're only asymptotically approaching the human level.

And natural data is not necessarily the best data to train AIs with, as far as you consider the density and fidelity of knowledge and task related skills being presented.

What if you train your model with natural data, and it still makes errors when deployed? What lever do you pull? Collect more natural data and hope for the best? There has never been a satisfying and a scalable answer to this.

What if you add a small indirection, and use synthetic data instead? You have instructions or conditioning data you use to produce your synthetic training corpuses. You can very trivially incorporate these error cases into your synthetic data generator!

You then actually have the levers you need to make the errors disappear, without having to hit your head against an immovable object, real data, repeatedly.

This, in addition to the fact that you can produce your synthetic data generation instructions from real data, but sidestep the whole personally identifiable data issue as you'd only extract the meaningful knowledge in an enriched form from the real data instead of blindly doing the censor work of a last century East German bureaucrat to massive volumes of irrelevant data.

Make your AIs write textbooks on the tasks you want them to master. Make them synthesize training data based on these textbooks. You can then handle the errors better and you don't need to worry about leaking personal data. After all, that is how humans master skills as well.

#DeepLearning #AI #SyntheticData