| GitHub | https://github.com/mattkretz |

| ORCID | https://orcid.org/0000-0002-0867-243X |

| Strava | https://www.strava.com/athletes/124318317 |

mkretz

- 60 Followers

- 56 Following

- 249 Posts

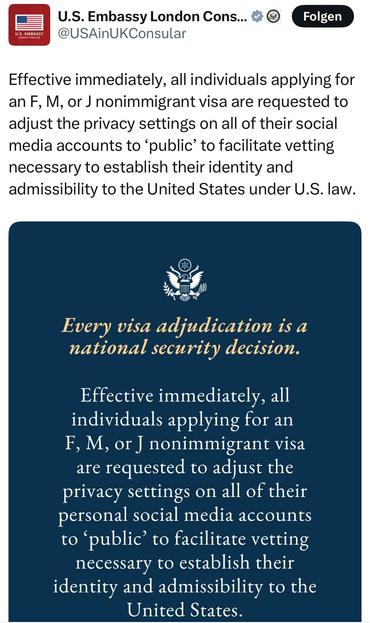

I probably am not the only one that certainly will never travel to the US again as long as the @GOP is in power there.

The speed at which they destroy the foundations of US strength is mindboggling.

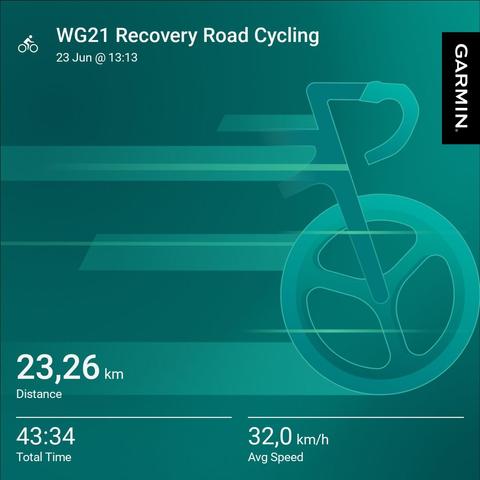

My bike must have missed me. It drove really fast through the 26–54 km/h wind. 😅

But thank you. That was helpful to understand how this was motivated, even if I disagree with it.

And we just renamed it again. Who would have thought that we can name something 'vec' when we already have 'vector' 😅.

It'll be std::simd::vec<T, N> and std::simd::mask<T, N> in C++26.

Also vec and mask are (read-only) ranges now (range-based for works) and we got permutations, gather & scatter, compress & expand as well as mask conversions to and from bitset and unsigned. 🥳

Lot's of implementation and optimization work ahead for me now.

I still don't understand why you want very small negative numbers to be preserved under sqrt when all other negative numbers are not.

And of course the inconsistency still doesn't make any sense.

Why did IEEE specify sqrt(-0) to be -0?! That's … surprising when applied to the interpretation of -0 in the context of complex numbers:

sqrt(complex{-0,+0}) is complex{+0,+0}.

And also pow(-0, 0.5) is +0.

If anything sqrt(-0) should be NaN, but why -0?