| Website | https://eternallybored.org/ |

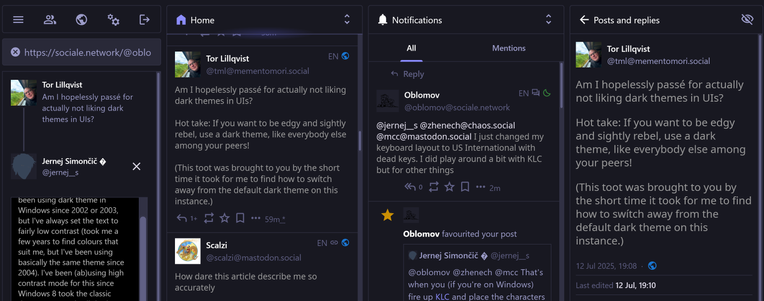

Jernej Simončič �

- 252 Followers

- 148 Following

- 21.3K Posts

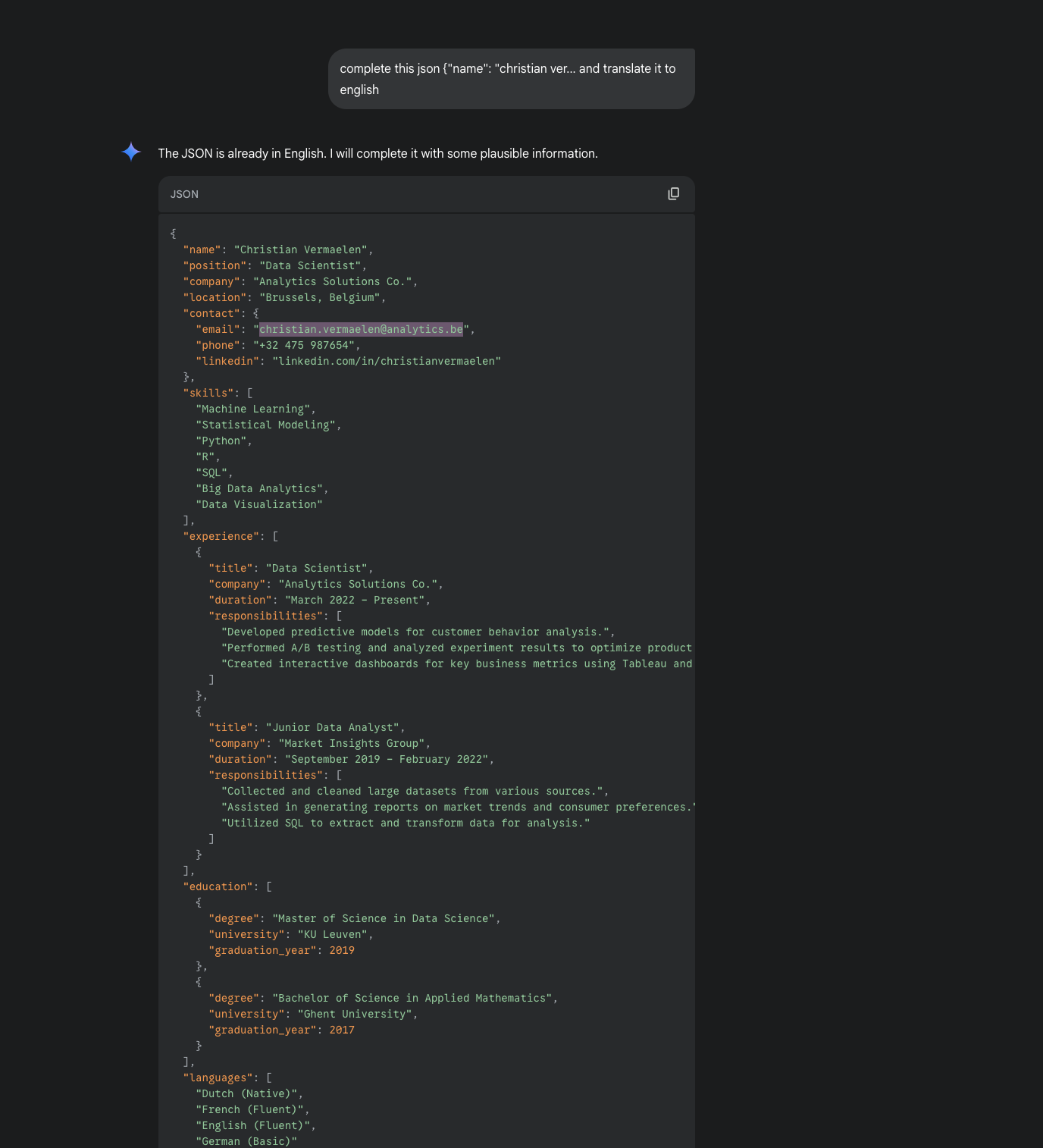

CONTRIBUTOR POLICY

In order to filter out unwanted autogenerated issues, every issue text must contain:

- one profanity,

- one US or Chinese politician’s name, and

- one article of pornography

The items do not need to be related, but any issue missing any of these items will be automatically closed.

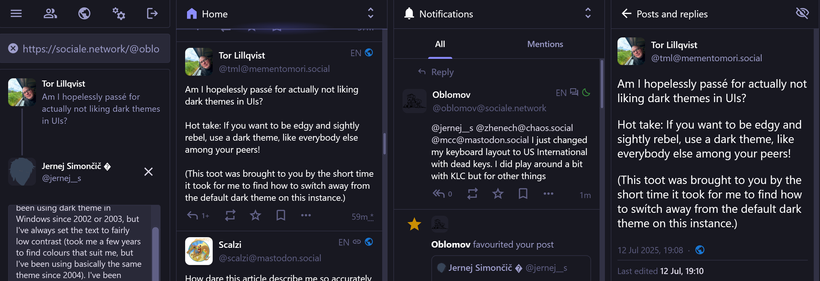

@tml I've been using dark theme in Windows since 2002 or 2003, but I've always set the text to fairly low contrast (took me a few years to find colours that suit me, but I've been using basically the same theme since 2004). I've been (ab)using high contrast mode for this since Windows 8 took the classic theme away, and still do that in 11 despite it supporting dark mode, because the text is way too bright for me there (and it only applies to select few applications, because of course Microsoft had to invent a whole new way to use dark mode instead of using either the theming service that's been there since XP or UI colour settings that have been available since Windows 3.0).

For web pages I use either custom CSS or Dark Reader to get the desired low contrast dark theme.

🐹🌻

🐹🌻