🚨New preprint alert!🚨

We (lead author: Lucy Owen) used the Hasson Lab's “Pie Man” dataset (Simony et al., 2016) to explore how “informative” and “compressible” brain activity patterns are during intact/scrambled story listening.

Preprint: https://biorxiv.org/cgi/content/short/2023.03.17.533152v1

Code/data: https://github.com/ContextLab/pca_paper

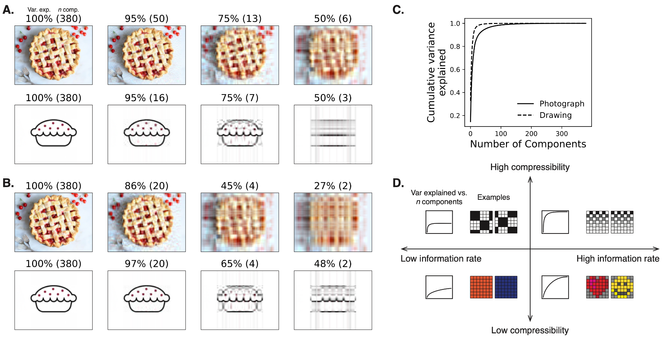

The core idea is that more “informative” brain patterns should yield higher classification accuracy. More “compressible” brain patterns should yield higher accuracy for a fixed number of features. We’re interested in tradeoffs between the two, under different circumstances.

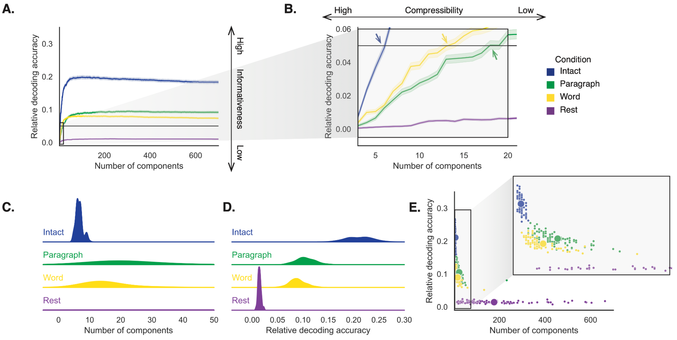

Brain activity from people listening to the unscrambled story was both more informative overall *and* more compressible than activity during scrambled listening or during rest.

As the story progresses, these patterns get stronger! After listening for a while, activity evoked by the intact story or coarse scrambling becomes even *more* informative and compressible, whereas finely scrambled/rest activity becomes *less* informative and compressible.

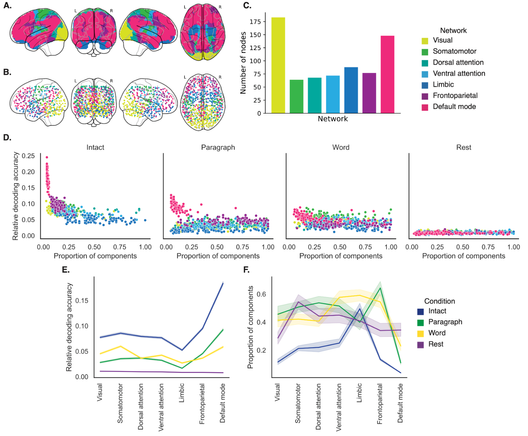

We also zoomed in on specific networks. Activity from higher-order brain areas was generally more informative than from lower-order areas, but we didn’t see any obvious differences in compressibility across networks.

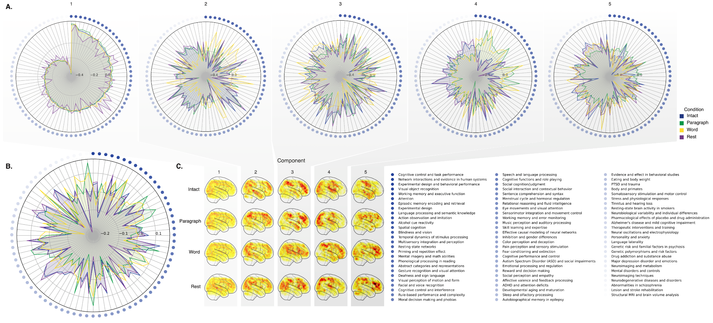

We did some interesting exploratory things too, using a combination of @neurosynth and ChatGPT to help understand what different patterns we found might “mean” from a functional perspective.

Taken together, our work suggests that our brain networks flexibly reconfigure according to ongoing task demands: activity patterns associated w/ higher-order cognition and high engagement are more informative and compressible than patterns evoked by lower-order tasks.