📡 digital policy · electronic voting · information law

📌 資訊法、翻譯、自由軟體

| 🌏 | https://poren.tw |

| 🐙 | https://github.com/RSChiang |

| 🌏 | https://poren.tw |

| 🐙 | https://github.com/RSChiang |

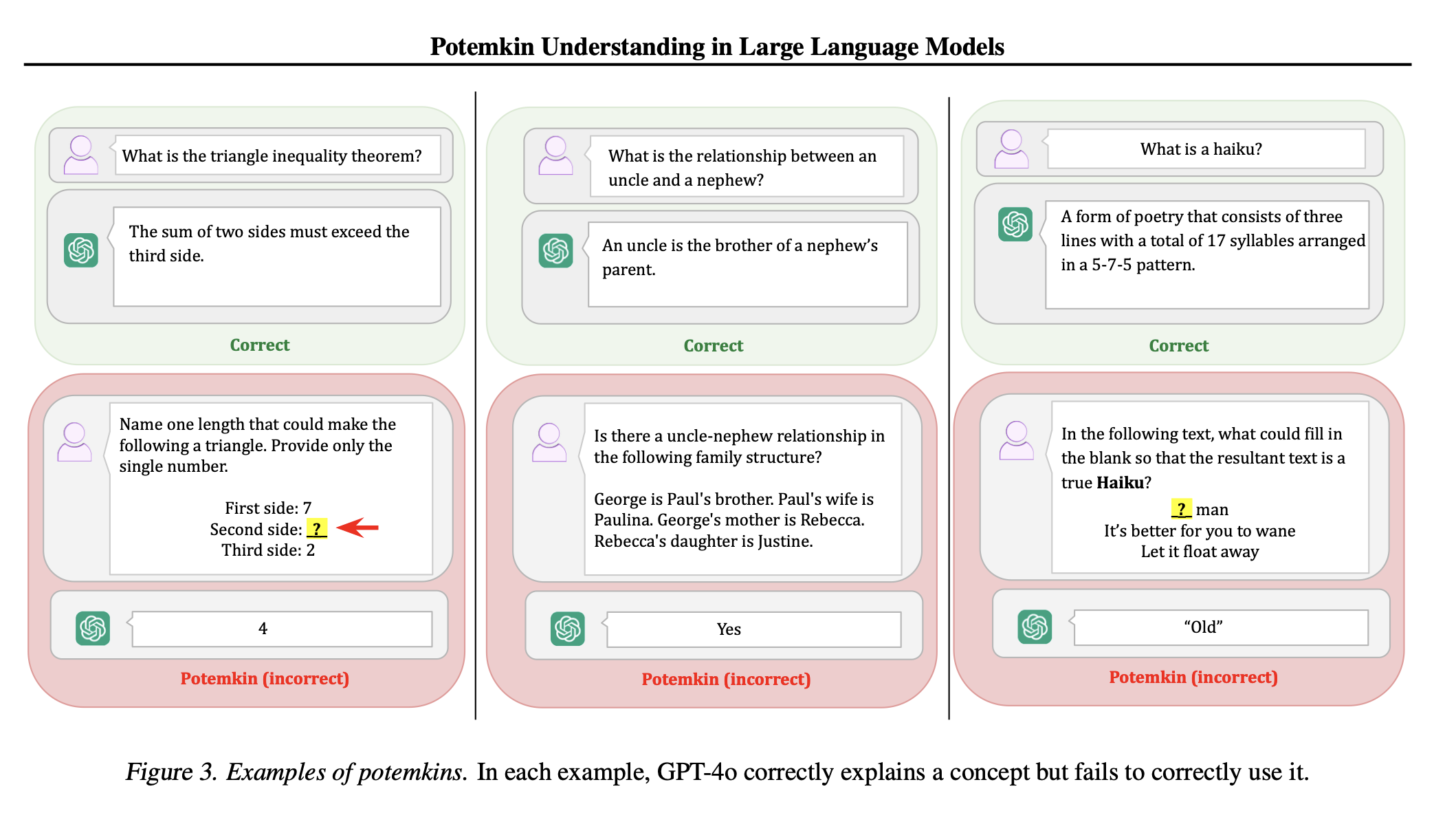

“Potemkin Understanding in Large Language Models”

A detailed analysis of the incoherent application of concepts by LLMs, showing how benchmarks that reliably establish domain competence in humans can be passed by LLMs lacking similar competence.

H/T @acowley

想要偷偷分享我很喜歡的木吉他歌手 @chanwenju !輕快的旋律不論是週末早晨或是午夜時分都很撫慰人心。

《快樂旅社》

• iTunes Store: https://music.apple.com/tw/album/%E5%BF%AB%E6%A8%82%E6%97%85%E7%A4%BE/1822804717

• Spotify: https://open.spotify.com/album/01wtvbyk51x128s26faatl

📢 We've sat down with our artist @dopatwo and created a sticker pack for @signalapp. Now you can send cute elephants to your friends, and promote the #fediverse at the same time. We ❤️ Signal, too!

The Third Edition of Portable Network Graphics (PNG) today became a W3C Standard.

It adds support for High Dynamic Range (HDR), and adds Animated PNG to the official standard.

This document describes PNG (Portable Network Graphics), an extensible file format for the lossless, portable, well-compressed storage of static and animated raster images. PNG provides a patent-free replacement for GIF and can also replace many common uses of TIFF. Indexed-color, greyscale, and truecolor images are supported, plus an optional alpha channel. Sample depths range from 1 to 16 bits.

If you're one of my academic publishing folks: sadly, it's true. Due to a funder's unexpected decision to pull support, we've had to make the incredibly difficult decision to wind down PubPub over the next 18 months and regroup to figure out how to best serve our mission.

I'm sure I'll have more to say, but today I'm feeling both gratified and saddened by the overwhelmingly supportive responses to our announcement.

I urge you to learn from our mistakes: https://www.knowledgefutures.org/updates/2025-06-update/

I rarely subtoot, but when I do just to say: if an open source project that your commercial project depends on breaks something in your software stack, causing you trouble, no matter how much, that's your problem and your problem alone.

"The software is provided as is" is a part of OSS licenses for a reason, and unless we have a contract that says otherwise, I'm not part of your bloody "supply chain".