LLMs are not intelligent but they ARE good for spouting extruded text that mimics the zeitgeist of their training data and if that training data includes the overflowing sewer that is the unfiltered internet you're going to get gaslighting, lies, conspiracy theories, and malice. Guardrails only prevent some of it from spilling over.

Humans base their norms on their peers' opinions, so LLMs potentially normalize all sorts of horrors in discourse—probably including ones we haven't glimpsed yet.

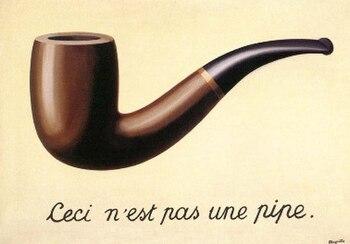

➖

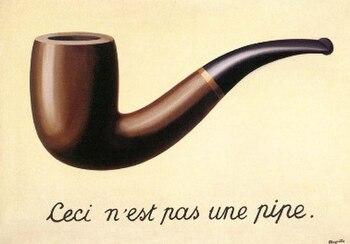

➖