You make the flawed assumption that AI tech will not advance.

That has not been the case so far.

Not sure why you might think this since there is no evidence for that.

@n_dimension @thomasfuchs You make the flawed assumption that "AI tech" will advance, despite all the best training data already have being used.

Sure models may get cheaper to train, and there may be more handrails to prevent the worst outcomes. But even for a modest goal of 100x fewer incorrect results there is simply no path.

You have a source for your claim "best training data has been used"?

Because I can link you a paper about synthetic data being used for frontier models.

There is one thing that I have seen that is stronger than AI being "confidently incorrect" and that is AI antagonists that refuse to learn AI and base their opinions on information that is often more than a year old.

I am constantly in awe how sure AI antagonists are based on their mutually reinforced echo chamber of social posts.

@n_dimension @glent @thomasfuchs Don't worry, with the escalation on AI hungry resources, both AI companies and their users are going to also escalate the fucking up of the climate, so before it gets this good, we won't be able to breathe.

Or rather, we the people won't, disgusting rich assholes will have their secure places and a few mercenaries protecting them.

@Johns_priv @glent @thomasfuchs

They all have bunkers.

We have breached 7 of the 9 human life support parameters.

Worse yet, they all embrace #transhumanism which is an evil, fascist ideology once you scrape off the lipstick.

But the "#AI uses huge amount of power" is figurative nonsense. By the numbers, in 2024 *ALL DATACENTERS* used only about 0.2% global carbon emissions. And 50% of those are your Facebook's, iClouds and Dropboxes.

All the articles by experts so far I have read is "Percentage of percentage" avoiding actual numbers and appeals to authority (experts).

I understand that, you have to make it sound like an urgent problem. Because otherwise there will be no action. Just don't look at the data. Least you get challenged. Also everyone (especially the uniformed) assumes #aienergyconsumption will grow. That's kinda reasonable, but not the slope of that consumption. Given developments like Model distillation. There are 240,000+ models on #huggingface, some billions of parameters large, that you can run on a super beefy home PC. Not a data centre.

The really progressive #AI counter movement ought to be on #personalai and #regulateAI

I can't see AI antagonists smashing jet planes and #petroAgriculture which seems, in their style hypocritical and poorly informed.

@n_dimension @glent @thomasfuchs Thanks for that.

Predicting whether AI will reach / exceed human level capabilities of writing code is hard. What's clear is

- there is a mad race to AGI, because the probability to get there is >0, and the price of reaching AGI first is enormous.

- We had many similar situations where many predicted the end of Moore's law, but ingenious engineers found ways to stay on track.

Given the incredible investment and successes I guess we will see AGI in a few years.

and b) the "AI key skill" because once you get to AI developers that outclass humans you get into a fast cycle of improvement.

@Mastokarl @glent @thomasfuchs

True.

Writing code with AI is effective because it either works or not...except you may have embedded logic errors...but as with biologicals code...you would hope one does design and unit testing before prod.

@Mastokarl @glent @thomasfuchs

The race is not to #AGI...

...but to automated AGI researchers. Working at speed up to 150,000 that of biologicals, we may actually reach AGI in a singularity ...

...and extinction by Tuesday.

I don't fear #AI.

I fear humans who own AI, because they have shown historically that they don't give a fuck about other humans.

And one of them is a psycho fascist who thinks empathy is a weakness.

@n_dimension @glent @thomasfuchs Yes, exactly my thoughts. That's why I'm rooting for Open Source AIs - it is ridiculous to assume that of all people we should trust often sociopathic billionaires with this technology.

But of course, they will continue to argue "if we have state of the art open source AI, the world will end". Right.

An extraction scheme to pump money, power and knowledge away from workers and into the hands of capitalist grifters.

The system will mostly fail, with some good use cases, but will have bad outcomes for most workers. Seals will applaud the billionaires laughing their way to the bank.

You are 100% correct.

Training is theft. And by the laws we live. The courts, so far endorsed this theft.

As is the impact on Labor.

In my view, it's super hypocritical to suddenly care about job losses now, when our jobs are on the chopping block, when for the last 80 years,cwe were totally fine with labourers losing theirs.

But I am annoyed, by the ineffectual echo chamber "AI bad" posts, mainly be people who don't actually use #AI.

The way to fix this developing AI catastrophe is strong AI regulation.

Again I don't see any of it from AI antagonists.

Or just smash the data centres, #luddite style. But again, I don't see any of it.

Just poorly informed posts on social.

#regulateAI NOW

Otherwise I am convinced that AI is the next big scheme to pound workers into submission, by convincing us of the limited utility of our work. Appropriating and leveraging our past common achievements.

Nothing short of a revolution in workers actually owning the means of production will make AI actually work in our favour.

Its a very big ask for regulation to help when there's such an inertia behind it.

UNITED

THE WORKERS

WILL NEVER BE DEFEATED!

The industrial revolution gave us trade unions.

Let's hope the #AI revolution will pull in the suits and white collar workers (looking specifically at you IT folk) most of whom did not think themselves part of the proletariat.

Machines will replace us all unless we do more than post screen caps on social of public engines being stupid.

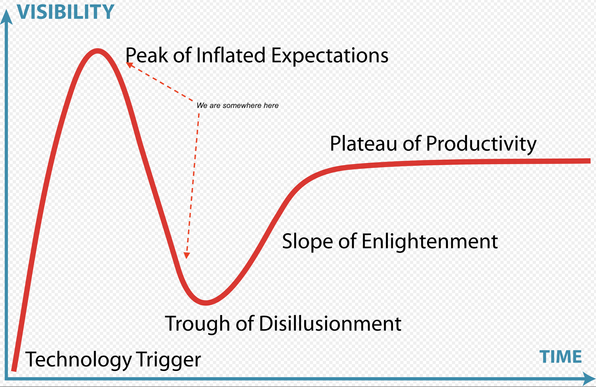

I partially agree with the statement, that AI *WILL* get better. But as the hype is going up it seems to be getting much worse. Up to now, the base paradigm about computers was that they are consistent even in their errors. With LLMs we get computers that make inconsistent errors that are much trickier to fix.

The LLMs are getting worse, because (I have noticed it myself), ChatGpt especially is throttling down compute.

Most folks who use LLMs don't need deep think for planning a party or finding a word with three Js to WTFPWN at Scrabble.

As to the accuracy, it's one of those falshoods that was true 1 year ago.

I verify most of the "Hahaha stupid AI posts" that come across my timeline, and most of them;

a) Don't show the actual prompt

b) Are from "Google Overview" which is not a true LLM but a search-LLM hybrid incorporating worst of both worlds.

c) Are an example of someone who actually does not know how to prompt properly.

It amazes me how many #AI antagonists simultaneously think AI is dumb and also an omniscient mind reader.

About 10% is accurate though.

@n_dimension @glent @thomasfuchs

"As to the accuracy, it's one of those falshoods that was true 1 year ago."

You're not the only person verifying those, but not every AI skeptic inform themselves from memes. My experience is the opposite. Specific example, a year ago, I was able to paste my google scholar publications into chatGPT to format them to the rest on my CV. This year it started making up PMIDs even though I provided all the info that it just had to reshuffle. Just one of many issues

I wish more informed AI sceptics engaged with me.

Most just yell at me and block me.

Which is sad, because , like them I started in a high state of AI anxiety.

I still think AI is going to end badly for us.

But my approach is reasoned engagement and regulations.

@n_dimension @glent @thomasfuchs

The issue of current LLM is that they are increasingly shaped to resemble Silicon Valley CEOs, i.e., to be convincing liars. Past human/computer dynamic was about humans being erratic but flexible and machines being rigid but consistent. If this relationship flips, and ppl get tasked to error check unlimited AI output, we'll lose efficiency.

Also, AI's IQ-lowering impact may dwarf that of Pb. It's a dble edged sword and should be implemented w/ caution.

@glent @n_dimension @thomasfuchs precisely!

- There is a finite amount of "good quality code" to train on and there are finite ways to solve defined problems in each programming language unless we accept inefficiency just to circumvent optimal solutions...

"Generative" not "Duplicative"

A fascinating philosophical and mathematical problem.

Countable infinity and uncountable infinity.

I am sceptical about your claim "there are finite ways of solving a problem".

Intuitively, I feel that's wrong, as we work within various (artificial) constrains.

I won't argue it, as I am fundamentally ignorant of it.

But I will delve deeper into theory of algorithms. You piqued my interest, thanks.

@n_dimension @glent @thomasfuchs There are finite ways to solve a problem given a specific toolset and finite resources...

- IOW: There are only few methods to i.e. compare values being identical or not that are reliable for any data type and espechally across data types.

And that is a matter of fact with any programming languague.

Feel free to read up i.e. on strcmp and other functions, because there are few options at hand.

Wulfy (@n_dimension@infosec.exchange)

@kkarhan@infosec.space @glent@aus.social @thomasfuchs@hachyderm.io A fascinating philosophical and mathematical problem. Countable infinity and uncountable infinity. I am sceptical about your claim "there are finite ways of solving a problem". Intuitively, I feel that's wrong, as we work within various (artificial) constrains. I won't argue it, as I am fundamentally ignorant of it. But I will delve deeper into theory of algorithms. You piqued my interest, thanks.

Thanks will do.